存储 configMap ConfigMap 功能在 Kubernetes1.2 版本中引入,许多应用程序会从配置文件、命令行参数或环境变量中读取配

ConfigMap 的创建 使用目录创建 创建配置文件多个配置温江放置于 目录 /data/app/k8s/configMap/configs

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [root@master configMap]# cat /data/app/k8s/configMap/configs/myConfig1.properties enemies=aliens lives=3 enemies.cheat=true enemies.cheat.level=noGoodRotten secret.code.passphrase=UUDDLRLRBABAS secret.code.allowed=true secret.code.lives=30 [root@master configMap]# cat /data/app/k8s/configMap/configs/myConfig2.properties color.good=purple color.bad=yellow allow.textmode=true how.nice.to.look=fairlyNice

创建 configmap

1 kubectl create configmap my-configs --from-file=/data/app/k8s/configMap/configs

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 [root@master configMap]# kubectl create configmap my-configs --from-file=/data/app/k8s/configMap/configs configmap/my-configs created [root@master configMap]# kubectl get configmaps NAME DATA AGE kube-root-ca.crt 1 45d my-configs 2 66s [root@master configMap]# kubectl describe configmaps my-configs Name: my-configs Namespace: default Labels: <none> Annotations: <none> Data ==== myConfig1.properties: ---- enemies=aliens lives=3 enemies.cheat=true enemies.cheat.level=noGoodRotten secret.code.passphrase=UUDDLRLRBABAS secret.code.allowed=true secret.code.lives=30 myConfig2.properties: ---- color.good=purple color.bad=yellow allow.textmode=true how.nice.to.look=fairlyNice BinaryData ==== Events: <none> [root@master configMap]#

—from-file 指定在目录下的所有文件都会被用在 ConfigMap 里面创建一个键值对,键的名字就是文件名,值就是文件的内容

使用文件创建 只要指定为一个文件就可以从单个文件中创建 ConfigMap

1 kubectl create configmap my-fill-config --from-file=/data/app/k8s/configMap/configs/myConfig1.properties

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 [root@master configMap]# kubectl create configmap my-fill-config --from-file=/data/app/k8s/configMap/configs/myConfig1.properties configmap/my-fill-config created [root@master configMap]# kubectl get configmaps NAME DATA AGE kube-root-ca.crt 1 45d my-configs 2 3m38s my-fill-config 1 5s [root@master configMap]# kubectl describe configmaps my-fill-config Name: my-fill-config Namespace: default Labels: <none> Annotations: <none> Data ==== myConfig1.properties: ---- enemies=aliens lives=3 enemies.cheat=true enemies.cheat.level=noGoodRotten secret.code.passphrase=UUDDLRLRBABAS secret.code.allowed=true secret.code.lives=30 BinaryData ==== Events: <none> [root@master configMap]#

—from-file 这个参数可以使用多次,你可以使用两次分别指定上个实例中的那两个配置文件,效果就跟指定整个目录是一样的

使用字面值创建 使用文字值创建,利用 —from-literal 参数传递配置信息,该参数可以使用多次,格式如下

1 kubectl create configmap k-v-config --from-literal=special.how=very --from-literal=special.type=charm

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 [root@master configMap]# kubectl create configmap k-v-config --from-literal=special.how=very --from-literal=special.type=charm configmap/k-v-config created [root@master configMap]# kubectl get configmaps NAME DATA AGE k-v-config 2 3s kube-root-ca.crt 1 45d my-configs 2 5m49s my-fill-config 1 2m16s [root@master configMap]# kubectl describe configmaps k-v-config Name: k-v-config Namespace: default Labels: <none> Annotations: <none> Data ==== special.type: ---- charm special.how: ---- very BinaryData ==== Events: <none> [root@master configMap]#

将配置文件转换成yaml文件 可以通过命令将配置文件转换成yaml的格式

1 kubectl get configmaps my-configs -o yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 [root@master configMap]# kubectl get configmaps my-configs -o yaml apiVersion: v1 data: myConfig1.properties: | enemies=aliens lives=3 enemies.cheat=true enemies.cheat.level=noGoodRotten secret.code.passphrase=UUDDLRLRBABAS secret.code.allowed=true secret.code.lives=30 myConfig2.properties: | color.good=purple color.bad=yellow allow.textmode=true how.nice.to.look=fairlyNice kind: ConfigMap metadata: creationTimestamp: "2024-12-24T12:25:14Z" name: my-configs namespace: default resourceVersion: "189544" uid: b80b50f8-3b48-4982-a963-8b790fde20d5 [root@master configMap]#

Pod 中使用 ConfigMap 使用 ConfigMap 来替代环境变量 创建 部署配置文件 configMapEnv.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 apiVersion: v1 kind: ConfigMap metadata: name: special-config namespace: default data: special.how: very special.type: charm --- apiVersion: v1 kind: ConfigMap metadata: name: env-config namespace: default data: log_level: INFO --- apiVersion: v1 kind: Pod metadata: name: dapi-test-pod spec: containers: - name: test-container image: 192.168 .16 .110 :20080/stady/myapp:v1 command: [ "/bin/sh" , "-c" , "env" ] env: - name: SPECIAL_LEVEL_KEY valueFrom: configMapKeyRef: name: special-config key: special.how - name: SPECIAL_TYPE_KEY valueFrom: configMapKeyRef: name: special-config key: special.type envFrom: - configMapRef: name: env-config restartPolicy: Never

special-config按键值导入 ,env-config全量导入

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 [root@master configMap]# kubectl apply -f configMapEnv.yaml configmap/special-config unchanged configmap/env-config unchanged pod/dapi-test-pod created [root@master configMap]# kubectl get pod NAME READY STATUS RESTARTS AGE dapi-test-pod 0/1 Completed 0 38s .... [root@master configMap]# kubectl logs dapi-test-pod MYAPP_SVC_PORT_80_TCP_ADDR=10.98.57.156 NGINX_SVC_SERVICE_HOST=10.104.90.73 KUBERNETES_PORT=tcp://10.96.0.1:443 MYAPP_SERVICE_PORT_HTTP=80 KUBERNETES_SERVICE_PORT=443 MYAPP_SVC_PORT_80_TCP_PORT=80 HOSTNAME=dapi-test-pod SHLVL=1 MYAPP_SVC_PORT_80_TCP_PROTO=tcp HOME=/root MYAPP_NODEPORT_PORT_80_TCP=tcp://10.99.67.70:80 NGINX_SVC_PORT=tcp://10.104.90.73:80 MYAPP_SERVICE_HOST=10.97.220.149 NGINX_SVC_SERVICE_PORT=80 SPECIAL_TYPE_KEY=charm MYAPP_SVC_PORT_80_TCP=tcp://10.98.57.156:80 MYAPP_NODEPORT_SERVICE_PORT_HTTP=80 NGINX_SVC_PORT_80_TCP_ADDR=10.104.90.73 MYAPP_SERVICE_PORT=80 MYAPP_PORT=tcp://10.97.220.149:80 NGINX_SVC_PORT_80_TCP_PORT=80 NGINX_SVC_PORT_80_TCP_PROTO=tcp NGINX_VERSION=1.12.2 KUBERNETES_PORT_443_TCP_ADDR=10.96.0.1 MYAPP_NODEPORT_SERVICE_HOST=10.99.67.70 MYAPP_PORT_80_TCP_ADDR=10.97.220.149 PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin KUBERNETES_PORT_443_TCP_PORT=443 KUBERNETES_PORT_443_TCP_PROTO=tcp MYAPP_PORT_80_TCP_PORT=80 MYAPP_PORT_80_TCP_PROTO=tcp NGINX_SVC_PORT_80_TCP=tcp://10.104.90.73:80 MYAPP_SVC_SERVICE_HOST=10.98.57.156 MYAPP_NODEPORT_SERVICE_PORT=80 MYAPP_NODEPORT_PORT=tcp://10.99.67.70:80 SPECIAL_LEVEL_KEY=very log_level=INFO KUBERNETES_SERVICE_PORT_HTTPS=443 KUBERNETES_PORT_443_TCP=tcp://10.96.0.1:443 PWD=/ MYAPP_NODEPORT_PORT_80_TCP_ADDR=10.99.67.70 MYAPP_PORT_80_TCP=tcp://10.97.220.149:80 KUBERNETES_SERVICE_HOST=10.96.0.1 MYAPP_SVC_SERVICE_PORT=80 MYAPP_SVC_PORT=tcp://10.98.57.156:80 MYAPP_NODEPORT_PORT_80_TCP_PORT=80 MYAPP_NODEPORT_PORT_80_TCP_PROTO=tcp [root@master configMap]#

用 ConfigMap 设置命令行参数 创建配置文件 configMapCmd.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 apiVersion: v1 kind: ConfigMap metadata: name: special-config namespace: default data: special.how: very special.type: charm --- apiVersion: v1 kind: Pod metadata: name: dapi-test-pod spec: containers: - name: test-container image: 192.168 .16 .110 :20080/stady/myapp:v1 command: [ "/bin/sh" , "-c" , "echo $(SPECIAL_LEVEL_KEY) $(SPECIAL_TYPE_KEY)" ] env: - name: SPECIAL_LEVEL_KEY valueFrom: configMapKeyRef: name: special-config key: special.how - name: SPECIAL_TYPE_KEY valueFrom: configMapKeyRef: name: special-config key: special.type restartPolicy: Never

1 2 3 4 5 6 7 8 9 10 11 12 13 [root@master configMap]# kubectl apply -f configMapCmd.yaml configmap/special-config created pod/dapi-test-pod created [root@master configMap]# [root@master configMap]# kubectl get pod NAME READY STATUS RESTARTS AGE dapi-test-pod 0/1 Completed 0 6s .... [root@master configMap]# [root@master configMap]# kubectl logs dapi-test-pod very charm [root@master configMap]#

通过数据卷插件使用ConfigMap 创建配置文件 configMapFile.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 apiVersion: v1 kind: ConfigMap metadata: name: special-config namespace: default data: special.how: very special.type: charm --- apiVersion: v1 kind: Pod metadata: name: dapi-test-pod spec: containers: - name: test-container image: 192.168 .16 .110 :20080/stady/myapp:v1 command: [ "/bin/sh" , "-c" , "cat /etc/config/special.how" ] volumeMounts: - name: config-volume mountPath: /etc/config volumes: - name: config-volume configMap: name: special-config restartPolicy: Never

1 2 3 4 5 6 7 8 9 10 [root@master configMap]# kubectl apply -f configMapFile.yaml configmap/special-config created pod/dapi-test-pod created [root@master configMap]# kubectl get pod NAME READY STATUS RESTARTS AGE dapi-test-pod 0/1 Completed 0 6s ... [root@master configMap]# [root@master configMap]# kubectl logs dapi-test-pod very[root@master configMap]#

在数据卷里面使用这个 ConfigMap,有不同的选项。最基本的就是将文件填入数据卷,在这个文件中,键就是文件名,键值就是文件内容

ConfigMap 的热更新 构造部署配置文件 configMapHot.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 apiVersion: v1 kind: ConfigMap metadata: name: log-config namespace: default data: log_level: INFO --- apiVersion: apps/v1 kind: Deployment metadata: name: my-nginx spec: replicas: 1 selector: matchLabels: name: my-nginx template: metadata: labels: name: my-nginx spec: containers: - name: my-nginx image: 192.168 .16 .110 :20080/stady/myapp:v1 ports: - containerPort: 80 volumeMounts: - name: config-volume mountPath: /etc/config volumes: - name: config-volume configMap: name: log-config

1 2 3 4 5 6 7 8 [root@master configMap]# kubectl apply -f configMapHot.yaml configmap/log-config unchanged deployment.apps/my-nginx created [root@master configMap]# kubectl get pods -l name=my-nginx NAME READY STATUS RESTARTS AGE my-nginx-599788c876-sfxt8 1/1 Running 0 2m17s [root@master configMap]# kubectl exec $(kubectl get pods -l name=my-nginx -o=name|cut -d "/" -f2) -- cat /etc/config/log_level INFO[root@master configMap]#

修改 ConfigMap

1 kubectl edit configmap log-config

修改 log_level 的值为 DEBUG 等待大概 10 秒钟时间,再次查看环境变量的值

类似vi打开文件修改内容样操作 ,修改了之后保存退出1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 # Please edit the object below. Lines beginning with a '#' will be ignored, # and an empty file will abort the edit. If an error occurs while saving this file will be # reopened with the relevant failures. # apiVersion: v1 data: log_level: DEBUG kind: ConfigMap metadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"v1","data":{"log_level":"INFO"},"kind":"ConfigMap","metadata":{"annotations":{},"name":"log-config","namespace":"default"}} creationTimestamp: "2024-12-24T13:16:25Z" name: log-config namespace: default resourceVersion: "195129" uid: 4f221f29-8dfc-40e4-960e-cbed32609ede

等待大概10秒左右再次查询

1 2 3 4 [root@master configMap]# kubectl exec $(kubectl get pods -l name=my-nginx -o=name|cut -d "/" -f2) -- cat /etc/config/log_level DEBUG[root@master configMap]# [root@master configMap]#

ConfigMap 更新后滚动更新 Pod

更新 ConfigMap 目前并不会触发相关 Pod 的滚动更新,可以通过修改 pod annotations 的方式强制触发滚动更新

1 kubectl patch deployment my-nginx --patch '{"spec": {"template": {"metadata": {"annotations": {"version/config": "20241224" }}}}}'

查看容器已重新启动, Annotations 已更新 version/config: 20241224

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 [root@master configMap]# kubectl patch deployment my-nginx --patch '{"spec": {"template": {"metadata": {"annotations": {"version/config": "20241224" }}}}}' deployment.apps/my-nginx patched [root@master configMap]# kubectl get pod NAME READY STATUS RESTARTS AGE my-nginx-64987b9879-p2btx 1/1 Running 0 5s nginx-dm-56996c5fdc-tmjws 1/1 Running 1 (21h ago) 22h nginx-dm-56996c5fdc-wcs8g 1/1 Running 1 (21h ago) 22h [root@master configMap]# kubectl describe deployment my-nginx Name: my-nginx Namespace: default CreationTimestamp: Tue, 24 Dec 2024 21:16:25 +0800 Labels: <none> Annotations: deployment.kubernetes.io/revision: 2 Selector: name=my-nginx Replicas: 1 desired | 1 updated | 1 total | 1 available | 0 unavailable StrategyType: RollingUpdate MinReadySeconds: 0 RollingUpdateStrategy: 25% max unavailable, 25% max surge Pod Template: Labels: name=my-nginx Annotations: version/config: 20241224 Containers: my-nginx: Image: 192.168.16.110:20080/stady/myapp:v1 Port: 80/TCP Host Port: 0/TCP Environment: <none> Mounts: /etc/config from config-volume (rw) Volumes: config-volume: Type: ConfigMap (a volume populated by a ConfigMap) Name: log-config Optional: false Conditions: Type Status Reason ---- ------ ------ Available True MinimumReplicasAvailable Progressing True NewReplicaSetAvailable OldReplicaSets: my-nginx-599788c876 (0/0 replicas created) NewReplicaSet: my-nginx-64987b9879 (1/1 replicas created) Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ScalingReplicaSet 9m22s deployment-controller Scaled up replica set my-nginx-599788c876 to 1 Normal ScalingReplicaSet 37s deployment-controller Scaled up replica set my-nginx-64987b9879 to 1 Normal ScalingReplicaSet 36s deployment-controller Scaled down replica set my-nginx-599788c876 to 0 from 1 [root@master configMap]#

更新 ConfigMap 后:

使用该 ConfigMap 挂载的 Env 不会同步更新

使用该 ConfigMap 挂载的 Volume 中的数据需要一段时间(实测大概10秒)才能同步更新

Secret Secret 解决了密码、token、密钥等敏感数据的配置问题,而不需要把这些敏感数据暴露到镜像或者 Pod Spec中。Secret 可以以 Volume 或者环境变量的方式使用

Secret 有三种类型:

Service Account :用来访问 Kubernetes API,由 Kubernetes 自动创建,并且会自动挂载到 Pod 的/run/secrets/kubernetes.io/serviceaccount 目录中

Opaque :base64编码格式的Secret,用来存储密码、密钥等

kubernetes.io/dockerconfigjson :用来存储私有 docker registry 的认证信息

Service Account Service Account 用来访问 Kubernetes API,由 Kubernetes 自动创建,

以 flannel 的镜像来举例. 可以再挂载的目录中到找对应目录

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 [root@master configMap]# kubectl get pod -A | grep flan kube-flannel kube-flannel-ds-c22v9 1/1 Running 14 (122m ago) 45d kube-flannel kube-flannel-ds-vv4c8 1/1 Running 18 (122m ago) 45d kube-flannel kube-flannel-ds-zqllr 1/1 Running 16 (122m ago) 45d [root@master configMap]# [root@master configMap]# kubectl describe pod kube-flannel-ds-c22v9 -n kube-flannel Name: kube-flannel-ds-c22v9 Namespace: kube-flannel Priority: 2000001000 Priority Class Name: system-node-critical Service Account: flannel Node: master/192.168.16.200 Start Time: Sat, 09 Nov 2024 19:39:26 +0800 Labels: app=flannel controller-revision-hash=b54d875dc k8s-app=flannel pod-template-generation=1 tier=node Annotations: <none> Status: Running IP: 192.168.16.200 IPs: IP: 192.168.16.200 Controlled By: DaemonSet/kube-flannel-ds Init Containers: install-cni-plugin: Container ID: docker://bc78acea68d5c1b899240ef141065108a1b02f9a9140029257f9a7351fc9b240 Image: docker.io/flannel/flannel-cni-plugin:v1.2.0 Image ID: docker-pullable://192.168.16.110:20080/k8s/flannel-cni-plugin@sha256:2180bb74f60bea56da2e9be2004271baa6dccc0960b7aeaf43a97fc4de9b1ae0 Port: <none> Host Port: <none> Command: cp Args: -f /flannel /opt/cni/bin/flannel State: Terminated Reason: Completed Exit Code: 0 Started: Tue, 24 Dec 2024 19:46:18 +0800 Finished: Tue, 24 Dec 2024 19:46:19 +0800 Ready: True Restart Count: 1 Environment: <none> Mounts: /opt/cni/bin from cni-plugin (rw) /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-5rp2q (ro) install-cni: Container ID: docker://6249eaae0226fa95c9bd3ce9087a6f1833f110bd41a95fcbd4c0aa4d527d1bcf Image: docker.io/flannel/flannel:v0.22.3 Image ID: docker-pullable://192.168.16.110:20080/k8s/flannel@sha256:b2bba065c46f3a54db41cd5181b87baa0fca64eda8b511838cdc147dfc59e76d Port: <none> Host Port: <none> Command: cp Args: -f /etc/kube-flannel/cni-conf.json /etc/cni/net.d/10-flannel.conflist State: Terminated Reason: Completed Exit Code: 0 Started: Tue, 24 Dec 2024 19:46:20 +0800 Finished: Tue, 24 Dec 2024 19:46:20 +0800 Ready: True Restart Count: 0 Environment: <none> Mounts: /etc/cni/net.d from cni (rw) /etc/kube-flannel/ from flannel-cfg (rw) /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-5rp2q (ro) Containers: kube-flannel: Container ID: docker://802dccfa129d6f489c133ccf817a925213928b366e121f09c999682851981480 Image: docker.io/flannel/flannel:v0.22.3 Image ID: docker-pullable://192.168.16.110:20080/k8s/flannel@sha256:b2bba065c46f3a54db41cd5181b87baa0fca64eda8b511838cdc147dfc59e76d Port: <none> Host Port: <none> Command: /opt/bin/flanneld Args: --ip-masq --kube-subnet-mgr State: Running Started: Tue, 24 Dec 2024 19:46:56 +0800 Last State: Terminated Reason: Error Exit Code: 1 Started: Tue, 24 Dec 2024 19:46:21 +0800 Finished: Tue, 24 Dec 2024 19:46:43 +0800 Ready: True Restart Count: 14 Requests: cpu: 100m memory: 50Mi Environment: POD_NAME: kube-flannel-ds-c22v9 (v1:metadata.name) POD_NAMESPACE: kube-flannel (v1:metadata.namespace) EVENT_QUEUE_DEPTH: 5000 Mounts: /etc/kube-flannel/ from flannel-cfg (rw) /run/flannel from run (rw) /run/xtables.lock from xtables-lock (rw) /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-5rp2q (ro) Conditions: Type Status Initialized True Ready True ContainersReady True PodScheduled True Volumes: run: Type: HostPath (bare host directory volume) Path: /run/flannel HostPathType: cni-plugin: Type: HostPath (bare host directory volume) Path: /opt/cni/bin HostPathType: cni: Type: HostPath (bare host directory volume) Path: /etc/cni/net.d HostPathType: flannel-cfg: Type: ConfigMap (a volume populated by a ConfigMap) Name: kube-flannel-cfg Optional: false xtables-lock: Type: HostPath (bare host directory volume) Path: /run/xtables.lock HostPathType: FileOrCreate kube-api-access-5rp2q: Type: Projected (a volume that contains injected data from multiple sources) TokenExpirationSeconds: 3607 ConfigMapName: kube-root-ca.crt ConfigMapOptional: <nil> DownwardAPI: true QoS Class: Burstable Node-Selectors: <none> Tolerations: :NoSchedule op=Exists node.kubernetes.io/disk-pressure:NoSchedule op=Exists node.kubernetes.io/memory-pressure:NoSchedule op=Exists node.kubernetes.io/network-unavailable:NoSchedule op=Exists node.kubernetes.io/not-ready:NoExecute op=Exists node.kubernetes.io/pid-pressure:NoSchedule op=Exists node.kubernetes.io/unreachable:NoExecute op=Exists node.kubernetes.io/unschedulable:NoSchedule op=Exists Events: <none> [root@master configMap]# [root@master configMap]# [root@master configMap]# kubectl exec kube-flannel-ds-c22v9 -n kube-flannel -- ls /var/run/secrets/kubernetes.io/serviceaccount Defaulted container "kube-flannel" out of: kube-flannel, install-cni-plugin (init), install-cni (init) ca.crt namespace token [root@master configMap]#

Opaque Secret 创建说明 Opaque 类型的数据是一个 map 类型,要求 value 是 base64 编码格式:

1 2 3 4 5 [root@master configMap]# echo -n "admin" | base64 YWRtaW4= [root@master configMap]# echo -n "123456" | base64 MTIzNDU2 [root@master configMap]#

构造配置文件 secrets.yml

1 2 3 4 5 6 7 8 apiVersion: v1 kind: Secret metadata: name: mysecret type: Opaque data: password: MTIzNDU2 username: YWRtaW4=

查看结果

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 [root@master secrets]# kubectl apply -f opaqueSecret.yaml secret/mysecret created [root@master secrets]# [root@master secrets]# kubectl get secrets NAME TYPE DATA AGE mysecret Opaque 2 32s [root@master secrets]# kubectl get secret mysecret NAME TYPE DATA AGE mysecret Opaque 2 48s [root@master secrets]# kubectl get secret mysecret -o yaml apiVersion: v1 data: password: MTIzNDU2 username: YWRtaW4= kind: Secret metadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"v1","data":{"password":"MTIzNDU2","username":"YWRtaW4="},"kind":"Secret","metadata":{"annotations":{},"name":"mysecret","namespace":"default"},"type":"Opaque"} creationTimestamp: "2024-12-24T13:58:37Z" name: mysecret namespace: default resourceVersion: "198953" uid: 9d744d72-34a2-448f-84d1-5955df366b24 type: Opaque [root@master secrets]# [root@master secrets]# kubectl get secret mysecret -o jsonpath="{.data.password}" | base64 --decode 123456[root@master secrets]# kubectl get secret mysecret -o jsonpath="{.data.username}" | base64 --decode admin[root@master secrets]#

使用方式 将 Secret 挂载到 Volume 中 构造配置文件 secretVolume.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 apiVersion: v1 kind: Pod metadata: labels: name: secret-volume-test name: secret-volume-test spec: volumes: - name: secret-volume secret: secretName: mysecret containers: - image: 192.168 .16 .110 :20080/stady/myapp:v1 name: db-secret-volume volumeMounts: - name: secret-volume mountPath: '/etc/secrets' readOnly: true

检查

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [root@master secrets]# kubectl apply -f secretVolume.yaml pod/secret-volume-test created [root@master secrets]# [root@master secrets]# kubectl get pod NAME READY STATUS RESTARTS AGE my-nginx-64987b9879-p2btx 1/1 Running 0 46m nginx-dm-56996c5fdc-tmjws 1/1 Running 1 (22h ago) 23h nginx-dm-56996c5fdc-wcs8g 1/1 Running 1 (22h ago) 23h secret-volume-test 1/1 Running 0 9s [root@master secrets]# kubectl exec secret-volume-test -- ls /etc/secrets password username [root@master secrets]# kubectl exec secret-volume-test -- cat /etc/secrets/password 123456[root@master secrets]# kubectl exec secret-volume-test -- cat /etc/secrets/username admin[root@master secrets]#

将 Secret 挂载到 环境变量 中 构造配置文件 secretEnv.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 apiVersion: apps/v1 kind: Deployment metadata: name: secret-env-pod spec: replicas: 2 selector: matchLabels: name: secret-env template: metadata: labels: name: secret-env spec: containers: - name: secret-env-pod image: 192.168 .16 .110 :20080/stady/myapp:v1 ports: - containerPort: 80 env: - name: TEST_USER valueFrom: secretKeyRef: name: mysecret key: username - name: TEST_PASSWORD valueFrom: secretKeyRef: name: mysecret key: password

检查pod的环境变量

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 [root@master secrets]# kubectl apply -f secretEnv.yaml deployment.apps/secret-env-pod created [root@master secrets]# kubectl get pod NAME READY STATUS RESTARTS AGE my-nginx-64987b9879-p2btx 1/1 Running 0 76m nginx-dm-56996c5fdc-tmjws 1/1 Running 1 (22h ago) 23h nginx-dm-56996c5fdc-wcs8g 1/1 Running 1 (22h ago) 23h secret-env-pod-6469d4d977-9bbfh 1/1 Running 0 3s secret-env-pod-6469d4d977-hj58v 1/1 Running 0 3s secret-volume-test 1/1 Running 0 30m [root@master secrets]# kubectl exec secret-env-pod-6469d4d977-9bbfh -- env PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin HOSTNAME=secret-env-pod-6469d4d977-9bbfh TEST_USER=admin TEST_PASSWORD=123456 .... [root@master secrets]#

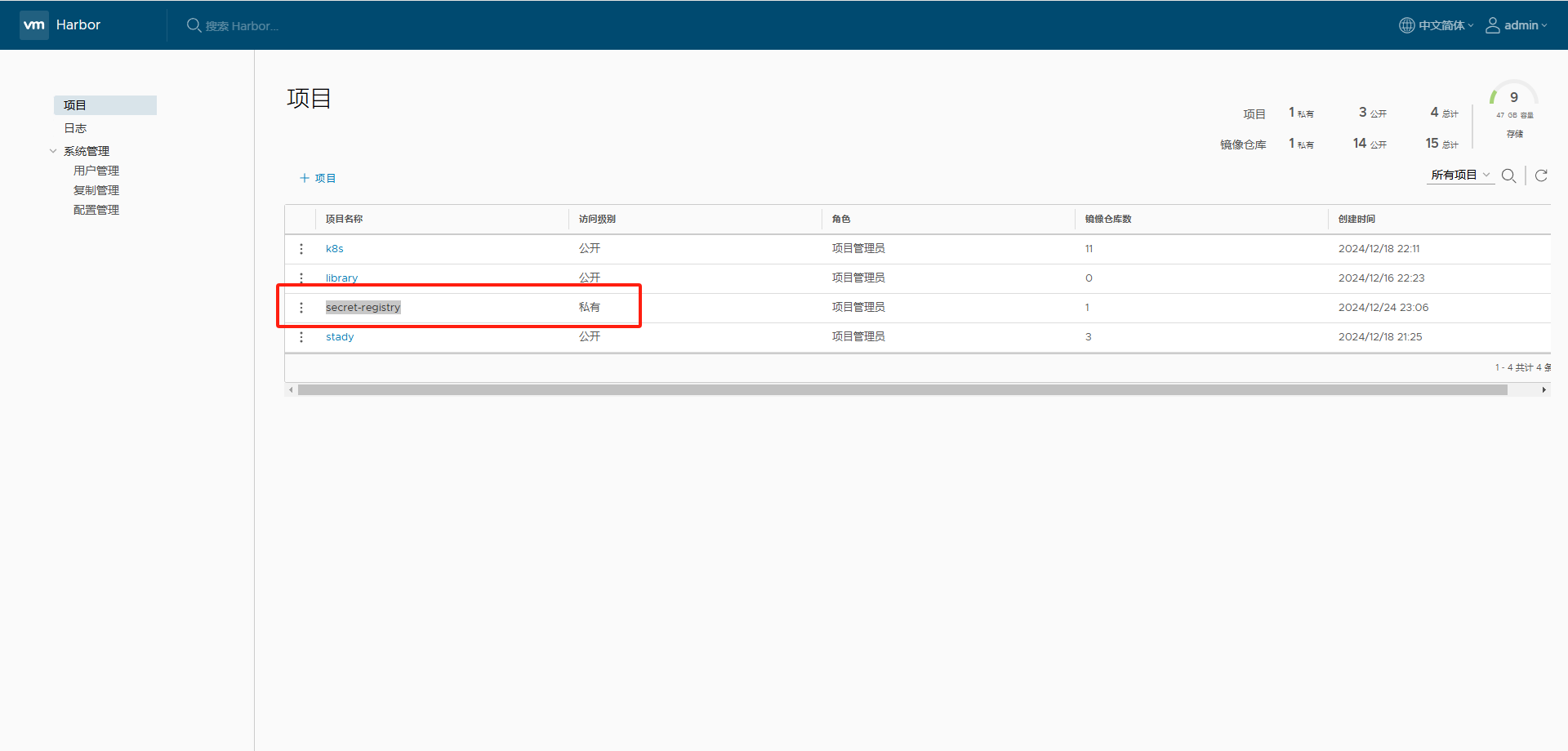

kubernetes.io/dockerconfigjson 在harbor中有个私有仓库 secret-registry

操作前如果有登录hub的动作 master/node 节点需要都先执行退出命令

1 docker logout 192.168.16.110:20080

创建部署配置文件 secretRegistry1.yml

1 2 3 4 5 6 7 8 9 apiVersion: v1 kind: Pod metadata: name: myregistrykey-pod1 spec: containers: - name: myregistrykey-con image: 192.168 .16 .110 :20080/secret-registry/myapp:v1

可以看到pod启动失败,启动失败的原因是拉去镜像失败.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 [root@master secrets]# kubectl apply -f secretRegistry1.yaml pod/myregistrykey-pod1 created [root@master secrets]# kubectl get pod NAME READY STATUS RESTARTS AGE myregistrykey-pod1 0/1 ImagePullBackOff 0 4s ... [root@master secrets]# kubectl logs myregistrykey-pod1 Error from server (BadRequest): container "myregistrykey-con" in pod "myregistrykey-pod1" is waiting to start: trying and failing to pull image [root@master secrets]# kubectl describe pod myregistrykey-pod1 Name: myregistrykey-pod1 Namespace: default Priority: 0 Service Account: default Node: node1/192.168.16.201 Start Time: Tue, 24 Dec 2024 23:20:47 +0800 Labels: <none> Annotations: <none> Status: Pending IP: 10.244.1.75 IPs: IP: 10.244.1.75 Containers: myregistrykey-con: Container ID: Image: 192.168.16.110:20080/secret-registry/myapp:v1 Image ID: Port: <none> Host Port: <none> State: Waiting Reason: ImagePullBackOff Ready: False Restart Count: 0 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-b52vw (ro) Conditions: Type Status Initialized True Ready False ContainersReady False PodScheduled True Volumes: kube-api-access-b52vw: Type: Projected (a volume that contains injected data from multiple sources) TokenExpirationSeconds: 3607 ConfigMapName: kube-root-ca.crt ConfigMapOptional: <nil> DownwardAPI: true QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s node.kubernetes.io/unreachable:NoExecute op=Exists for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 25s default-scheduler Successfully assigned default/myregistrykey-pod1 to node1 Normal BackOff 22s (x2 over 23s) kubelet Back-off pulling image "192.168.16.110:20080/secret-registry/myapp:v1" Warning Failed 22s (x2 over 23s) kubelet Error: ImagePullBackOff Normal Pulling 11s (x2 over 24s) kubelet Pulling image "192.168.16.110:20080/secret-registry/myapp:v1" Warning Failed 11s (x2 over 24s) kubelet Failed to pull image "192.168.16.110:20080/secret-registry/myapp:v1": Error response from daemon: pull access denied for 192.168.16.110:20080/secret-registry/myapp, repository does not exist or may require 'docker login': denied: requested access to the resource is denied Warning Failed 11s (x2 over 24s) kubelet Error: ErrImagePull [root@master secrets]#

使用 Kuberctl 创建 docker registry 认证的 secret

1 kubectl create secret docker-registry myregistrykey --docker-server=192.168.16.110:20080 --docker-username=admin --docker-password=Harbor123456 --docker-email=test@test.com

验证

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 [root@master secrets]# kubectl create secret docker-registry myregistrykey --docker-server=192.168.16.110:20080 --docker-username=admin --docker-password=Harbor123456 --docker-email=test@test.com secret/myregistrykey created [root@master secrets]# kubectl get secret myregistrykey -o yaml apiVersion: v1 data: .dockerconfigjson: eyJhdXRocyI6eyIxOTIuMTY4LjE2LjExMDoyMDA4MCI6eyJ1c2VybmFtZSI6ImFkbWluIiwicGFzc3dvcmQiOiJIYXJib3IxMjM0NTYiLCJlbWFpbCI6InRlc3RAdGVzdC5jb20iLCJhdXRoIjoiWVdSdGFXNDZTR0Z5WW05eU1USXpORFUyIn19fQ== kind: Secret metadata: creationTimestamp: "2024-12-24T15:24:15Z" name: myregistrykey namespace: default resourceVersion: "207451" uid: 403655ee-5087-426e-a853-b13b51b4331f type: kubernetes.io/dockerconfigjson [root@master secrets]# kubectl get secret myregistrykey -o jsonpath="{.data['\.dockerconfigjson']}" | base64 --decode {"auths":{"192.168.16.110:20080":{"username":"admin","password":"Harbor123456","email":"test@test.com","auth":"YWRtaW46SGFyYm9yMTIzNDU2"}}}[root@master secrets]#

创建部署配置文件 secretRegistry2.yml

在创建 Pod 的时候,通过 imagePullSecrets 来引用

1 2 3 4 5 6 7 8 9 10 11 apiVersion: v1 kind: Pod metadata: name: myregistrykey-pod2 spec: containers: - name: myregistrykey-con image: 192.168 .16 .110 :20080/secret-registry/myapp:v1 imagePullSecrets: - name: myregistrykey

验证

可以正常下载惊醒,pod启动成功

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 [root@master secrets]# kubectl apply -f secretRegistry2.yaml pod/myregistrykey-pod2 created [root@master secrets]# kubectl get pod NAME READY STATUS RESTARTS AGE myregistrykey-pod1 0/1 ImagePullBackOff 0 4m55s myregistrykey-pod2 1/1 Running 0 5s ... [root@master secrets]# kubectl describe pod myregistrykey-pod2 Name: myregistrykey-pod2 Namespace: default Priority: 0 Service Account: default Node: node2/192.168.16.202 Start Time: Tue, 24 Dec 2024 23:25:37 +0800 Labels: <none> Annotations: <none> Status: Running IP: 10.244.2.63 IPs: IP: 10.244.2.63 Containers: myregistrykey-con: Container ID: docker://008d9ba05b100f3a38ed3a0dac6d5445c25de75dea712012c72ecaa8644090bd Image: 192.168.16.110:20080/secret-registry/myapp:v1 Image ID: docker-pullable://192.168.16.110:20080/secret-registry/myapp@sha256:9eeca44ba2d410e54fccc54cbe9c021802aa8b9836a0bcf3d3229354e4c8870e Port: <none> Host Port: <none> State: Running Started: Tue, 24 Dec 2024 23:25:38 +0800 Ready: True Restart Count: 0 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-4lnxd (ro) Conditions: Type Status Initialized True Ready True ContainersReady True PodScheduled True Volumes: kube-api-access-4lnxd: Type: Projected (a volume that contains injected data from multiple sources) TokenExpirationSeconds: 3607 ConfigMapName: kube-root-ca.crt ConfigMapOptional: <nil> DownwardAPI: true QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s node.kubernetes.io/unreachable:NoExecute op=Exists for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 18s default-scheduler Successfully assigned default/myregistrykey-pod2 to node2 Normal Pulling 17s kubelet Pulling image "192.168.16.110:20080/secret-registry/myapp:v1" Normal Pulled 17s kubelet Successfully pulled image "192.168.16.110:20080/secret-registry/myapp:v1" in 93ms (93ms including waiting) Normal Created 17s kubelet Created container myregistrykey-con Normal Started 17s kubelet Started container myregistrykey-con [root@master secrets]#

Volume 容器磁盘上的文件的生命周期是短暂的,这就使得在容器中运行重要应用时会出现一些问题。首先,当容器崩溃时,kubelet 会重启它,但是容器中的文件将丢失——容器以干净的状态(镜像最初的状态)重新启动。其次,在Pod 中同时运行多个容器时,这些容器之间通常需要共享文件。Kubernetes 中的Volume 抽象就很好的解决了这些问题

Kubernetes 中的卷有明确的寿命 —— 与封装它的 Pod 相同。所f以,卷的生命比 Pod 中的所有容器都长,当这个容器重启时数据仍然得以保存。当然,当 Pod 不再存在时,卷也将不复存在。也许更重要的是,Kubernetes 支持多种类型的卷,Pod 可以同时使用任意数量的卷

卷的类型 Kubernetes 支持以下类型的卷:

awsElasticBlockStore azureDisk azureDisk cephfs csi downwardAPI emptyDir

fc flocker gcePersistentDisk gitRepo glusterfs hostPath iscsi local nfs

persistentVolumeClaim projected portworxVolume quobyte rbd scaleIO secret

storageos vsphereVolume

emptyDir 当 Pod 被分配给节点时,首先创建 emptyDir 卷,并且只要该 Pod 在该节点上运行,该卷就会存在。正如卷的名字所述,它最初是空的。Pod 中的容器可以读取和写入 emptyDir 卷中的相同文件,

emptyDir 的用法有:

暂存空间,例如用于基于磁盘的合并排序

用作长时间计算崩溃恢复时的检查点

Web服务器容器提供数据时,保存内容管理器容器提取的文件

构造一个部署配置文件 emptyDir.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 apiVersion: v1 kind: Pod metadata: name: empty-dir-pd spec: containers: - image: 192.168 .16 .110 :20080/stady/myapp:v1 name: empty-dir-container volumeMounts: - mountPath: /cache name: cache-volume volumes: - name: cache-volume emptyDir: {}

hostPath hostPath 卷将主机节点的文件系统中的文件或目录挂载到集群中

hostPath 的用途如下:

运行需要访问 Docker 内部的容器;使用 /var/lib/docker 的 hostPath

在容器中运行 cAdvisor;使用 /dev/cgroups 的 hostPath

允许 pod 指定给定的 hostPath 是否应该在 pod 运行之前存在,是否应该创建,以及它应该以什么形式存在

除了所需的 path 属性之外,用户还可以为 hostPath 卷指定 type

参数类型

参数值

含义

默认

空字符串(默认)用于向后兼容,这意味着在挂载 hostPath 卷之前不会执行任何检查

DirectoryOrCreate

Path

如果给定路径不存在,则创建一个空目录,权限为 0755,属于 Kubelet 的组和用户。

Directory

Directory

给定路径必须是一个已经存在的目录。

FileOrCreate

File

如果给定路径不存在,则创建一个空文件,权限为 0644,属于 Kubelet 的组和用户。

File

给定路径必须是一个已经存在的文件。

Socket

给定路径必须是一个已经存在的 UNIX 套接字。

CharDevice

给定路径必须是一个已经存在的字符设备。

BlockDevice

给定路径必须是一个已经存在的块设备。

使用这种卷类型是请注意,因为:

由于每个节点上的文件都不同,具有相同配置(例如从 podTemplate 创建的)的 pod 在不同节点上的行为 可能会有所不同

当 Kubernetes 按照计划添加资源感知调度时,将无法考虑 hostPath 使用的资源

在底层主机上创建的文件或目录只能由 root 写入。您需要在特权容器中以 root 身份运行进程,或修改主机 上的文件权限以便写入 hostPath 卷

构造配置文件 hostPath.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 apiVersion: v1 kind: Pod metadata: name: host-path-pd spec: containers: - image: 192.168 .16 .110 :20080/stady/myapp:v1 name: host-path-container volumeMounts: - mountPath: /test-pd name: test-volume volumes: - name: test-volume hostPath: path: /data type: Directory

查看可以看到将node节点的/data目录 挂载到了pod容器中 /test-pd 位置

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 [root@master volume]# kubectl apply -f hostPath.yaml pod/host-path-pd created [root@master volume]# kubectl get pod NAME READY STATUS RESTARTS AGE empty-dir-pd 1/1 Running 0 13m host-path-pd 1/1 Running 0 8s nginx-dm-56996c5fdc-tmjws 1/1 Running 1 (23h ago) 25h nginx-dm-56996c5fdc-wcs8g 1/1 Running 1 (23h ago) 25h [root@master volume]# kubectl exec host-path-pd -- ls /test-pd/ DS Miniconda3-py39_23.9.0-0-Linux-x86_64.sh app bak docker k8s log pkg tmp [root@master volume]#

PersistentVolume 概念 PersistentVolume (PV) 是由管理员设置的存储,它是群集的一部分。就像节点是集群中的资源一样,PV 也是集群中的资源。 PV 是 Volume 之类的卷插件,但具有独立于使用 PV 的 Pod 的生命周期。此 API 对象包含存储实现的细节,即 NFS、 iSCSI 或特定于云供应商的存储系统

PersistentVolumeClaim (PVC) 是用户存储的请求。它与 Pod 相似。Pod 消耗节点资源,PVC 消耗 PV 资源。Pod 可以请求特定级别的资源 (CPU 和内存)。声明可以请求特定的大小和访问模式(例如,可以以读/写一次或 只读多次模式挂载)

静态 pv 集群管理员创建一些 PV。它们带有可供群集用户使用的实际存储的细节。它们存在于 Kubernetes API 中,可用于消费

动态 当管理员创建的静态 PV 都不匹配用户的 PersistentVolumeClaim 时,集群可能会尝试动态地为 PVC 创建卷。此配置基于 StorageClasses :PVC 必须请求 [存储类],并且管理员必须创建并配置该类才能进行动态创建。声明该类为 “” 可以有效地禁用其动态配置

要启用基于存储级别的动态存储配置,集群管理员需要启用 API server 上的。例如,通过确保 DefaultStorageClass [准入控制器] DefaultStorageClass 位于 API server 组件的 –admission-control 标志,使用逗号分隔的有序值列表中,可以完成此操作

绑定 master 中的控制环路监视新的 PVC,寻找匹配的 PV(如果可能),并将它们绑定在一起。如果为新的 PVC 动态 调配 PV,则该环路将始终将该 PV 绑定到 PVC。否则,用户总会得到他们所请求的存储,但是容量可能超出要求 的数量。一旦 PV 和 PVC 绑定后, PersistentVolumeClaim 绑定是排他性的,不管它们是如何绑定的。 PVC 跟 PV 绑定是一对一的映射

持久化卷声明的保护 PVC 保护的目的是确保由 pod 正在使用的 PVC 不会从系统中移除,因为如果被移除的话可能会导致数据丢失

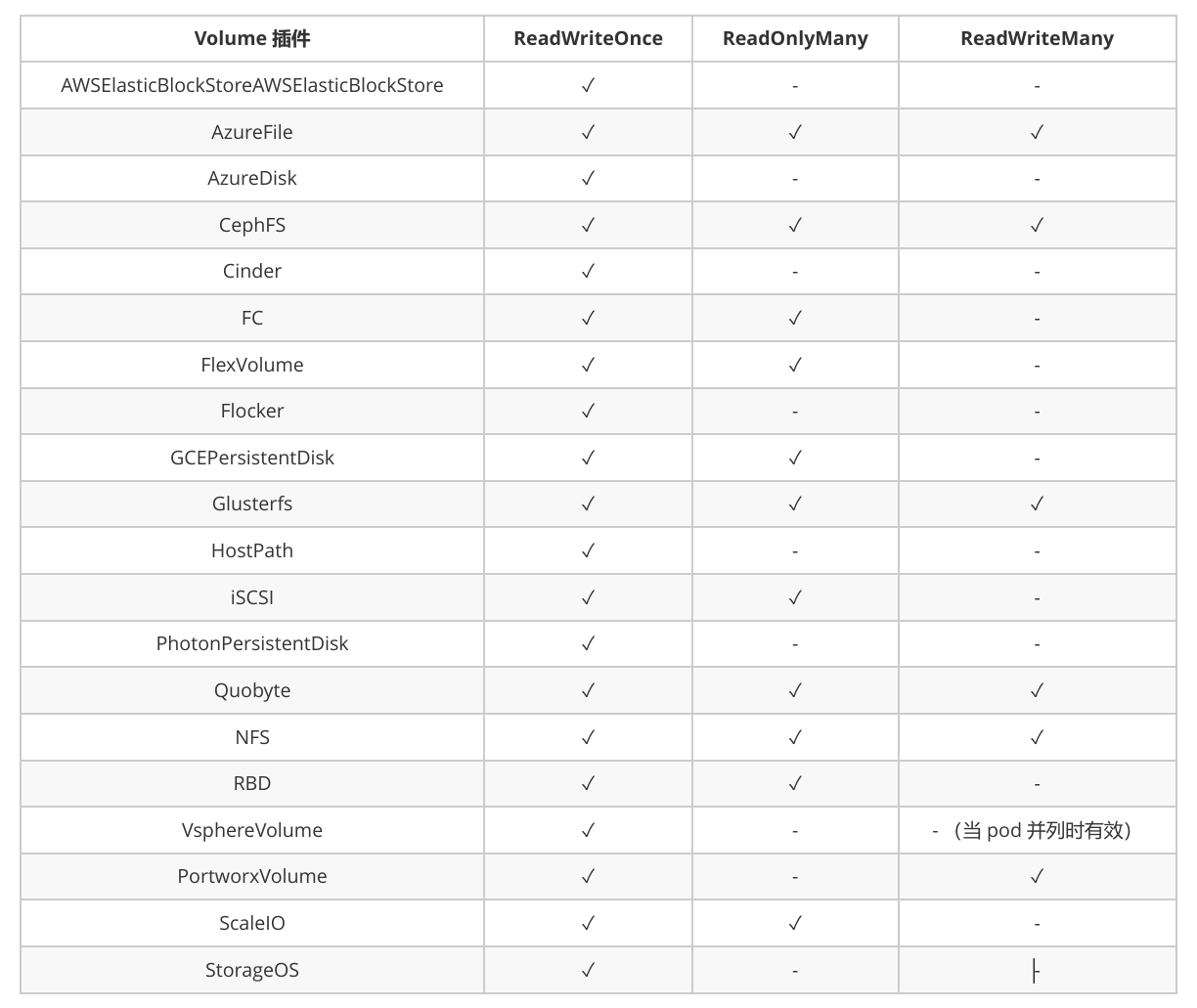

持久化卷类型 PersistentVolume 类型以插件形式实现。Kubernetes 目前支持以下插件类型:

GCEPersistentDisk AWSElasticBlockStore AzureFile AzureDisk FC (Fibre Channel)

FlexVolume Flocker NFS iSCSI RBD (Ceph Block Device) CephFS

Cinder (OpenStack block storage) Glusterfs VsphereVolume Quobyte Volumes

HostPath VMware Photon Portworx Volumes ScaleIO Volumes StorageOS

持久化卷的演示方案

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 apiVersion: v1 kind: PersistentVolume metadata: name: pv0003 spec: capacity: storage: 5Gi volumeMode: Filesystem accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Recycle storageClassName: slow mountOptions: - hard - nfsvers=4.1 nfs: path: /tmp server: 172.17 .0 .2

PV 访问模式 PersistentVolume 可以以资源提供者支持的任何方式挂载到主机上。如下表所示,供应商具有不同的功能,每个PV 的访问模式都将被设置为该卷支持的特定模式。例如,NFS 可以支持多个读/写客户端,但特定的 NFS PV 可能以只读方式导出到服务器上。每个 PV 都有一套自己的用来描述特定功能的访问模式

ReadWriteOnce——该卷可以被单个节点以读/写模式挂载

ReadOnlyMany——该卷可以被多个节点以只读模式挂载

ReadWriteMany——该卷可以被多个节点以读/写模式挂载

在命令行中,访问模式缩写为:

RWO - ReadWriteOnce

ROX - ReadOnlyMany

RWX - ReadWriteMany

回收策略

Retain(保留)——手动回收

Recycle(回收)——基本擦除(rm -rf /thevolume/*)

Delete(删除)——关联的存储资产(例如 AWS EBS、GCE PD、Azure Disk 和 OpenStack Cinder 卷)将被删除

当前,只有 NFS 和 HostPath 支持回收策略。AWS EBS、GCE PD、Azure Disk 和 Cinder 卷支持删除策略

状态 卷可以处于以下的某种状态:

Available(可用)——一块空闲资源还没有被任何声明绑定

Bound(已绑定)——卷已经被声明绑定

Released(已释放)——声明被删除,但是资源还未被集群重新声

Failed(失败)——该卷的自动回收失败

命令行会显示绑定到 PV 的 PVC 的名称

持久化演示说明 - NFS 安装 NFS 服务器 所有的节点都需要安装

在master主机使用是nfs 服务1 yum install -y nfs-common nfs-utils rpcbind

在node节点使用的是nfs客户端1 yum install -y nfs-utils rpcbind

配置增加nfs的目录

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [root@master ~]# mkdir /nfsdata [root@master ~]# chmod 666 /nfsdata [root@master ~]# chown nfsnobody /nfsdata [root@master ~]# cat /etc/exports [root@master ~]# cat > /etc/exports <<EOF > /nfsdata/nfspv1 *(rw,no_root_squash,no_all_squash,sync) > /nfsdata/nfspv2 *(rw,no_root_squash,no_all_squash,sync) > /nfsdata/nfspv3 *(rw,no_root_squash,no_all_squash,sync) > /nfsdata/nfspv4 *(rw,no_root_squash,no_all_squash,sync) > /nfsdata/nfspv5 *(rw,no_root_squash,no_all_squash,sync) > /nfsdata/nfspv6 *(rw,no_root_squash,no_all_squash,sync) > EOF [root@master ~]# systemctl start rpcbind [root@master ~]# systemctl start nfs [root@master ~]#

查看nfs提供服务的目录

1 2 3 4 [root@master ~]# showmount -e 192.168.16.200 Export list for 192.168.16.200: /nfsdata * [root@master ~]#

部署 PV 构建配置文件 nfspv1.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 apiVersion: v1 kind: PersistentVolume metadata: name: nfspv1 spec: capacity: storage: 10Gi accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Retain storageClassName: nfs nfs: path: /nfsdata/nfspv1 server: 192.168 .16 .200

1 2 3 4 5 6 7 8 [root@master pvc]# kubectl apply -f nfspv1.yaml persistentvolume/nfspv1 created [root@master pvc]# [root@master pvc]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfspv1 10Gi RWO Retain Available nfs 6m2s [root@master pvc]#

创建服务并使用 PVC

测试的副本是3 ,需要准备至少三个PV . 所以我们重新创建几个不同pv

1 2 mkdir /nfsdata/nfspv{2..6} chmod 777 /nfsdata/nfspv{2..6}

构造 多个pv 配置文件 morePv.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 apiVersion: v1 kind: PersistentVolume metadata: name: nfspv2 spec: capacity: storage: 2Gi accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Retain storageClassName: nfs nfs: path: /nfsdata/nfspv2 server: 192.168 .16 .200 --- apiVersion: v1 kind: PersistentVolume metadata: name: nfspv3 spec: capacity: storage: 4Gi accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Retain storageClassName: nfs nfs: path: /nfsdata/nfspv3 server: 192.168 .16 .200 --- apiVersion: v1 kind: PersistentVolume metadata: name: nfspv4 spec: capacity: storage: 8Gi accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Retain storageClassName: nfs nfs: path: /nfsdata/nfspv4 server: 192.168 .16 .200 --- apiVersion: v1 kind: PersistentVolume metadata: name: nfspv5 spec: capacity: storage: 1Gi accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Recycle storageClassName: nfs nfs: path: /nfsdata/nfspv5 server: 192.168 .16 .200 --- apiVersion: v1 kind: PersistentVolume metadata: name: nfspv6 spec: capacity: storage: 1Gi accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Delete storageClassName: nfs nfs: path: /nfsdata/nfspv6 server: 192.168 .16 .200

1 2 3 4 5 6 7 8 9 10 11 12 13 14 [root@master pvc]# kubectl apply -f morePv.yaml persistentvolume/nfspv2 created persistentvolume/nfspv3 created persistentvolume/nfspv4 created persistentvolume/nfspv5 created persistentvolume/nfspv6 created [root@master pvc]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfspv1 10Gi RWO Retain Available nfs 12m nfspv2 2Gi RWO Retain Available nfs 8s nfspv3 4Gi RWO Retain Available nfs 8s nfspv4 8Gi RWO Retain Available nfs 8s nfspv5 1Gi RWO Recycle Available nfs 8s nfspv6 1Gi RWO Delete Available nfs 8s

构造pvc测试的配置文件 pvc.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 apiVersion: v1 kind: Service metadata: name: pvc-svc labels: app: nginx spec: ports: - port: 80 name: pvc-web clusterIP: None selector: app: pvc-nginx --- apiVersion: apps/v1 kind: StatefulSet metadata: name: stateful-set-web spec: selector: matchLabels: app: pvc-nginx serviceName: pvc-svc replicas: 3 template: metadata: labels: app: pvc-nginx spec: containers: - name: nginx-c image: 192.168 .16 .110 :20080/stady/myapp:v1 ports: - containerPort: 80 name: pvc-web volumeMounts: - name: pvc-vm-www mountPath: /usr/share/nginx/html volumeClaimTemplates: - metadata: name: pvc-vm-www spec: accessModes: [ "ReadWriteOnce" ] storageClassName: nfs resources: requests: storage: 1Gi

查询 pod创建成功 ,并且 有三个 pv已经被绑定.

并且选取的资源是存储尽量不浪费的pv.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 [root@master pvc]# kubectl apply -f pvc.yaml service/pvc-svc created statefulset.apps/stateful-set-web created [root@master pvc]# kubectl get pod NAME READY STATUS RESTARTS AGE empty-dir-pd 1/1 Running 1 (21h ago) 21h host-path-pd 1/1 Running 1 (21h ago) 21h nginx-dm-56996c5fdc-tmjws 1/1 Running 2 (21h ago) 46h nginx-dm-56996c5fdc-wcs8g 1/1 Running 2 (21h ago) 46h stateful-set-web-0 1/1 Running 0 7s stateful-set-web-1 1/1 Running 0 5s stateful-set-web-2 1/1 Running 0 2s [root@master pvc]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfspv1 10Gi RWO Retain Available nfs 13m nfspv2 2Gi RWO Retain Bound default/pvc-vm-www-stateful-set-web-2 nfs 86s nfspv3 4Gi RWO Retain Available nfs 86s nfspv4 8Gi RWO Retain Available nfs 86s nfspv5 1Gi RWO Recycle Bound default/pvc-vm-www-stateful-set-web-0 nfs 86s nfspv6 1Gi RWO Delete Bound default/pvc-vm-www-stateful-set-web-1 nfs 86s [root@master pvc]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE pvc-vm-www-stateful-set-web-0 Bound nfspv5 1Gi RWO nfs 18s pvc-vm-www-stateful-set-web-1 Bound nfspv6 1Gi RWO nfs 16s pvc-vm-www-stateful-set-web-2 Bound nfspv2 2Gi RWO nfs 13s [root@master pvc]#

在web-0的存储写入 index.html

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 [root@master pvc]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfspv1 10Gi RWO Retain Available nfs 82m nfspv2 2Gi RWO Retain Bound default/pvc-vm-www-stateful-set-web-2 nfs 28s nfspv3 4Gi RWO Retain Available nfs 28s nfspv4 8Gi RWO Retain Available nfs 28s nfspv5 1Gi RWO Recycle Bound default/pvc-vm-www-stateful-set-web-0 nfs 28s nfspv6 1Gi RWO Delete Bound default/pvc-vm-www-stateful-set-web-1 nfs 28s [root@master pvc]# cat > /nfsdata/nfspv5/index.html <<EOF > aaaaa > EOF [root@master pvc]# [root@master pvc]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES empty-dir-pd 1/1 Running 1 (22h ago) 23h 10.244.1.77 node1 <none> <none> host-path-pd 1/1 Running 1 (22h ago) 23h 10.244.2.67 node2 <none> <none> nginx-dm-56996c5fdc-tmjws 1/1 Running 2 (22h ago) 2d 10.244.1.79 node1 <none> <none> nginx-dm-56996c5fdc-wcs8g 1/1 Running 2 (22h ago) 2d 10.244.2.68 node2 <none> <none> stateful-set-web-0 1/1 Running 0 98s 10.244.1.100 node1 <none> <none> stateful-set-web-1 1/1 Running 0 95s 10.244.2.76 node2 <none> <none> stateful-set-web-2 1/1 Running 0 92s 10.244.1.101 node1 <none> <none> [root@master pvc]# curl 10.244.1.100 aaaaa [root@master pvc]#

当前占用的pv资源的类型分别有 Delete / Recycle /Retain

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 [root@master pvc]# kubectl delete -f pvc.yaml service "pvc-svc" deleted statefulset.apps "stateful-set-web" deleted [root@master pvc]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE pvc-vm-www-stateful-set-web-0 Bound nfspv5 1Gi RWO nfs 3m7s pvc-vm-www-stateful-set-web-1 Bound nfspv6 1Gi RWO nfs 3m5s pvc-vm-www-stateful-set-web-2 Bound nfspv2 2Gi RWO nfs 3m2s [root@master pvc]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfspv1 10Gi RWO Retain Available nfs 5m23s nfspv2 2Gi RWO Retain Bound default/pvc-vm-www-stateful-set-web-2 nfs 5m16s nfspv3 4Gi RWO Retain Available nfs 5m16s nfspv4 8Gi RWO Retain Available nfs 5m16s nfspv5 1Gi RWO Retain Bound default/pvc-vm-www-stateful-set-web-0 nfs 5m16s nfspv6 1Gi RWO Retain Bound default/pvc-vm-www-stateful-set-web-1 nfs 5m16s

删除pvc 之后 pv的状态 不相同

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 [root@master pvc]# kubectl delete pvc pvc-vm-www-stateful-set-web-2 persistentvolumeclaim "pvc-vm-www-stateful-set-web-2" deleted [root@master pvc]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfspv1 10Gi RWO Retain Available nfs 6m23s nfspv2 2Gi RWO Retain Released default/pvc-vm-www-stateful-set-web-2 nfs 6m16s nfspv3 4Gi RWO Retain Available nfs 6m16s nfspv4 8Gi RWO Retain Available nfs 6m16s nfspv5 1Gi RWO Retain Bound default/pvc-vm-www-stateful-set-web-0 nfs 6m16s nfspv6 1Gi RWO Retain Bound default/pvc-vm-www-stateful-set-web-1 nfs 6m16s [root@master pvc]# [root@master pvc]# kubectl delete pvc pvc-vm-www-stateful-set-web-0 pvc-vm-www-stateful-set-web-1 pvc-vm-www-stateful-set-web-2 persistentvolumeclaim "pvc-vm-www-stateful-set-web-0" deleted persistentvolumeclaim "pvc-vm-www-stateful-set-web-1" deleted persistentvolumeclaim "pvc-vm-www-stateful-set-web-2" deleted [root@master pvc]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfspv1 10Gi RWO Retain Available nfs 64s nfspv2 2Gi RWO Retain Released default/pvc-vm-www-stateful-set-web-2 nfs 59s nfspv3 4Gi RWO Retain Available nfs 59s nfspv4 8Gi RWO Retain Available nfs 59s nfspv5 1Gi RWO Recycle Failed default/pvc-vm-www-stateful-set-web-0 nfs 59s nfspv6 1Gi RWO Delete Failed default/pvc-vm-www-stateful-set-web-1 nfs 59s

对于 NFS 类型的 PersistentVolume(PV),Kubernetes 支持以下 reclaimPolicy:

Retain:这是默认的回收策略。当 PVC 被删除时,PV 不会被自动回收或删除,需要管理员手动处理 PV 上的数据。

Recycle:这个回收策略尝试删除 PV 上的所有内容,以便 PV 可以被重新使用。但是,并非所有的 Volume 插件都支持 Recycle 策略,NFS 就是其中之一。

Delete:这个回收策略会删除 PV 以及其关联的存储资源。对于动态供应的 PV,这个策略通常被支持,但对于静态配置的 PV(如 NFS),通常不被支持,因为 NFS PV 的生命周期通常不由 Kubernetes 管理。1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 [root@master pvc]# kubectl describe pv nfspv6 Name: nfspv6 Labels: <none> Annotations: pv.kubernetes.io/bound-by-controller: yes Finalizers: [kubernetes.io/pv-protection] StorageClass: nfs Status: Failed Claim: default/pvc-vm-www-stateful-set-web-1 Reclaim Policy: Delete Access Modes: RWO VolumeMode: Filesystem Capacity: 1Gi Node Affinity: <none> Message: error getting deleter volume plugin for volume "nfspv6": no deletable volume plugin matched Source: Type: NFS (an NFS mount that lasts the lifetime of a pod) Server: 192.168.16.200 Path: /nfsdata/nfspv6 ReadOnly: false Events: Type Reason Age From Message ---- ------ ---- ---- ------- Warning VolumeFailedDelete 26s persistentvolume-controller error getting deleter volume plugin for volume "nfspv6": no deletable volume plugin matched

关于 StatefulSet

匹配 Pod name ( 网络标识 ) 的模式为:$(statefulset名称)-$(序号),比如上面的示例:web-0,web-1, web-2

StatefulSet 为每个 Pod 副本创建了一个 DNS 域名,这个域名的格式为: $(podname).(headless server name),也就意味着服务间是通过Pod域名来通信而非 Pod IP,因为当Pod所在Node发生故障时, Pod 会 被飘移到其它 Node 上,Pod IP 会发生变化,但是 Pod 域名不会有变化

StatefulSet 使用 Headless 服务来控制 Pod 的域名,这个域名的 FQDN 为:$(service name).$(namespace).svc.cluster.local,其中,“cluster.local” 指的是集群的域名

根据 volumeClaimTemplates,为每个 Pod 创建一个 pvc,pvc 的命名规则匹配模式:(volumeClaimTemplates.name)-(pod_name),比如上面的 volumeMounts.name=www, Pod name=web-[0-2],因此创建出来的 PVC 是 www-web-0、www-web-1、www-web-2

删除 Pod 不会删除其 pvc,手动删除 pvc 将自动释放 pv

Statefulset的启停顺序:

有序部署:部署StatefulSet时,如果有多个Pod副本,它们会被顺序地创建(从0到N-1)并且,在下一个 Pod运行之前所有之前的Pod必须都是Running和Ready状态。

有序删除:当Pod被删除时,它们被终止的顺序是从N-1到0。

有序扩展:当对Pod执行扩展操作时,与部署一样,它前面的Pod必须都处于Running和Ready状态。

StatefulSet使用场景:

稳定的持久化存储,即Pod重新调度后还是能访问到相同的持久化数据,基于 PVC 来实现。

稳定的网络标识符,即 Pod 重新调度后其 PodName 和 HostName 不变。

有序部署,有序扩展,基于 init containers 来实现。

有序收缩。

集群调度器 调度说明 Scheduler 是 kubernetes 的调度器,主要的任务是把定义的 pod 分配到集群的节点上。听起来非常简单,但有

公平:如何保证每个节点都能被分配资源

资源高效利用:集群所有资源最大化被使用

效率:调度的性能要好,能够尽快地对大批量的 pod 完成调度工作

灵活:允许用户根据自己的需求控制调度的逻辑

调度过程 调度分为几个部分:首先是过滤掉不满足条件的节点,这个过程称为 predicate ;然后对通过的节点按照优先级 排序,这个是 priority ;最后从中选择优先级最高的节点。如果中间任何一步骤有错误,就直接返回错误

PodFitsResources :节点上剩余的资源是否大于 pod 请求的资源

PodFitsHost :如果 pod 指定了 NodeName,检查节点名称是否和 NodeName 匹配

PodFitsHostPorts :节点上已经使用的 port 是否和 pod 申请的 port 冲突

PodSelectorMatches :过滤掉和 pod 指定的 label 不匹配的节点

NoDiskConflict :已经 mount 的 volume 和 pod 指定的 volume 不冲突,除非它们都是只读

如果在 predicate 过程中没有合适的节点,pod 会一直在 pending 状态,不断重试调度,直到有节点满足条件。

优先级由一系列键值对组成,键是该优先级项的名称,值是它的权重(该项的重要性)。这些优先级选项包括:

LeastRequestedPriority :通过计算 CPU 和 Memory 的使用率来决定权重,使用率越低权重越高。换句话说,这个优先级指标倾向于资源使用比例更低的节点

BalancedResourceAllocation :节点上 CPU 和 Memory 使用率越接近,权重越高。这个应该和上面的一起使用,不应该单独使用

ImageLocalityPriority :倾向于已经有要使用镜像的节点,镜像总大小值越大,权重越高

通过算法对所有的优先级项目和权重进行计算,得出最终的结果

自定义调度器 除了 kubernetes 自带的调度器,你也可以编写自己的调度器。通过spec:schedulername 参数指定调度器的名字,可以为 pod 选择某个调度器进行调度。比如下面的 pod 选择 my-scheduler 进行调度,而不是默认的 default-scheduler :

1 2 3 4 5 6 7 8 9 10 11 apiVersion: v1 kind: Pod metadata: name: annotation-second-scheduler labels: name: multischeduler-example spec: schedulername: my-scheduler containers: - name: pod-with-second-annotation-container image: gcr.io/google_containers/pause:2.0

调度亲和性 节点亲和性 pod.spec.nodeAffinity

requiredDuringSchedulingIgnoredDuringExecution:硬策略

preferredDuringSchedulingIgnoredDuringExecution:软策略

requiredDuringSchedulingIgnoredDuringExecution 根据命令可以查询到节点上的标签

1 2 3 4 5 6 [root@master ~]# kubectl get node --show-labels NAME STATUS ROLES AGE VERSION LABELS master Ready control-plane 46d v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers= node1 Ready <none> 46d v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node1,kubernetes.io/os=linux node2 Ready <none> 46d v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node2,kubernetes.io/os=linux [root@master ~]#

构造硬亲和策略, 不在node2节点

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 apiVersion: v1 kind: Pod metadata: name: affinity labels: app: node-affinity-pod spec: containers: - name: with-node-affinity image: 192.168 .16 .110 :20080/stady/myapp:v1 affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: NotIn values: - node2

多次创建观察所在节点,始终不会是node2

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 [root@master affi]# kubectl delete -f affiPod1.yaml && kubectl apply -f affiPod1.yaml && kubectl get pod -o wide pod "affinity" deleted pod/affinity created NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES affinity 0/1 ContainerCreating 0 0s <none> node1 <none> <none> [root@master affi]# [root@master affi]# kubectl delete -f affiPod1.yaml && kubectl apply -f affiPod1.yaml && kubectl get pod -o wide pod "affinity" deleted pod/affinity created NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES affinity 0/1 ContainerCreating 0 0s <none> node1 <none> <none> [root@master affi]# [root@master affi]# kubectl delete -f affiPod1.yaml && kubectl apply -f affiPod1.yaml && kubectl get pod -o wide pod "affinity" deleted pod/affinity created NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES affinity 0/1 ContainerCreating 0 0s <none> node1 <none> <none> [root@master affi]#

将配置中 operator: NotIn 该成 operator: In 之后再次尝试 ,始终会在node2

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 [root@master affi]# kubectl delete -f affiPod1.yaml && kubectl apply -f affiPod1.yaml && kubectl get pod -o wide pod "affinity" deleted pod/affinity created NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES affinity 0/1 ContainerCreating 0 0s <none> node2 <none> <none> [root@master affi]# [root@master affi]# kubectl delete -f affiPod1.yaml && kubectl apply -f affiPod1.yaml && kubectl get pod -o wide pod "affinity" deleted pod/affinity created NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES affinity 0/1 ContainerCreating 0 0s <none> node2 <none> <none> [root@master affi]# [root@master affi]# kubectl delete -f affiPod1.yaml && kubectl apply -f affiPod1.yaml && kubectl get pod -o wide pod "affinity" deleted pod/affinity created NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES affinity 0/1 ContainerCreating 0 0s <none> node2 <none> <none> [root@master affi]# [root@master affi]# kubectl delete -f affiPod1.yaml && kubectl apply -f affiPod1.yaml && kubectl get pod -o wide pod "affinity" deleted pod/affinity created NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES affinity 0/1 ContainerCreating 0 0s <none> node2 <none> <none> [root@master affi]#

将node2 该成不存在的节点名称 node3, pod就会始终处在 Pending , 因为没有满足条件的 node3节点1 2 3 4 5 6 [root@master affi]# kubectl delete -f affiPod1.yaml && kubectl apply -f affiPod1.yaml && kubectl get pod -o wide pod "affinity" deleted pod/affinity created NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES affinity 0/1 Pending 0 0s <none> <none> <none> <none> [root@master affi]#

preferredDuringSchedulingIgnoredDuringExecution 构造软亲和策略, 尽可能在node3节点

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 apiVersion: v1 kind: Pod metadata: name: affinity labels: app: node-affinity-pod spec: containers: - name: with-node-affinity image: 192.168 .16 .110 :20080/stady/myapp:v1 affinity: nodeAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 1 preference: matchExpressions: - key: kubernetes.io/hostname operator: In values: - node3

虽然没有node3 但是会创建成功. 最佳匹配节点是node1

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 [root@master affi]# kubectl delete -f affiPod2.yaml && kubectl apply -f affiPod2.yaml && kubectl get pod -o wide pod "affinity" deleted pod/affinity created NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES affinity 0/1 ContainerCreating 0 0s <none> node1 <none> <none> [root@master affi]# kubectl delete -f affiPod2.yaml && kubectl apply -f affiPod2.yaml && kubectl get pod -o wide pod "affinity" deleted pod/affinity created NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES affinity 0/1 ContainerCreating 0 0s <none> node1 <none> <none> [root@master affi]# kubectl delete -f affiPod2.yaml && kubectl apply -f affiPod2.yaml && kubectl get pod -o wide pod "affinity" deleted pod/affinity created NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES affinity 0/1 ContainerCreating 0 0s <none> node1 <none> <none> [root@master affi]#

将配置中的node3该成node2, 如果node2资源充足会优先启动在node2节点

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 [root@master affi]# kubectl delete -f affiPod2.yaml && kubectl apply -f affiPod2.yaml && kubectl get pod -o wide pod "affinity" deleted pod/affinity created NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES affinity 0/1 ContainerCreating 0 0s <none> node2 <none> <none> [root@master affi]# kubectl delete -f affiPod2.yaml && kubectl apply -f affiPod2.yaml && kubectl get pod -o wide pod "affinity" deleted pod/affinity created NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES affinity 0/1 ContainerCreating 0 0s <none> node2 <none> <none> [root@master affi]# kubectl delete -f affiPod2.yaml && kubectl apply -f affiPod2.yaml && kubectl get pod -o wide pod "affinity" deleted pod/affinity created NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES affinity 0/1 ContainerCreating 0 0s <none> node2 <none> <none> [root@master affi]#

合体 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 apiVersion: v1 kind: Pod metadata: name: affinity labels: app: node-affinity-pod spec: containers: - name: with-node-affinity image: 192.168 .16 .110 :20080/stady/myapp:v1 affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: NotIn values: - node02 preferredDuringSchedulingIgnoredDuringExecution: - weight: 1 preference: matchExpressions: - key: source operator: In values: - ttt

可以通过如下命令给node节点打标签并在节点亲和性中引用

1 kubectl label nodes <node-name> <key>=<value>

1 2 3 4 5 6 7 8 [root@master affi]# kubectl label nodes node1 source=ttt node/node1 labeled [root@master affi]# kubectl get node --show-labels NAME STATUS ROLES AGE VERSION LABELS master Ready control-plane 46d v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=master,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers= node1 Ready <none> 46d v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node1,kubernetes.io/os=linux,source=ttt node2 Ready <none> 46d v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node2,kubernetes.io/os=linux [root@master affi]#

键值运算关系

In:label 的值在某个列表中

NotIn:label 的值不在某个列表中

Gt:label 的值大于某个值

Lt:label 的值小于某个值

Exists:某个 label 存在

DoesNotExist:某个 label 不存在

Pod 亲和性 pod.spec.affinity.podAffinity/podAntiAffinity

preferredDuringSchedulingIgnoredDuringExecution:软策略

requiredDuringSchedulingIgnoredDuringExecution:硬策略

构造配置文件 affiPod3.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 apiVersion: v1 kind: Pod metadata: name: affinity-pod-1 labels: app: pod-1 spec: containers: - name: with-node-affinity image: 192.168 .16 .110 :20080/stady/myapp:v1 --- apiVersion: v1 kind: Pod metadata: name: affinity-pod-2 labels: app: pod-2 spec: containers: - name: with-node-affinity image: 192.168 .16 .110 :20080/stady/myapp:v1 --- apiVersion: v1 kind: Pod metadata: name: affinity-pod-3 labels: app: pod-3 spec: containers: - name: pod-3 image: 192.168 .16 .110 :20080/stady/myapp:v1 affinity: podAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: app operator: In values: - pod-1 topologyKey: kubernetes.io/hostname podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 1 podAffinityTerm: labelSelector: matchExpressions: - key: app operator: In values: - pod-2 topologyKey: kubernetes.io/hostname

1 2 3 4 5 6 7 8 9 10 [root@master affi ] pod/affinity-pod-1 created pod/affinity-pod-2 created pod/affinity-pod-3 created [root@master affi ] NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES affinity-pod-1 1 /1 Running 0 7s 10.244 .1 .126 node1 <none> <none> affinity-pod-2 1 /1 Running 0 7s 10.244 .2 .94 node2 <none> <none> affinity-pod-3 1 /1 Running 0 7s 10.244 .1 .125 node1 <none> <none> [root@master affi ]

将app1 与 app2的位置在配置文件中调换. 默认会将pod1 创建到node2节点

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 apiVersion: v1 kind: Pod metadata: name: affinity-pod-2 labels: app: pod-2 spec: containers: - name: with-node-affinity image: 192.168 .16 .110 :20080/stady/myapp:v1 --- apiVersion: v1 kind: Pod metadata: name: affinity-pod-1 labels: app: pod-1 spec: containers: - name: with-node-affinity image: 192.168 .16 .110 :20080/stady/myapp:v1 --- apiVersion: v1 kind: Pod metadata: name: affinity-pod-3 labels: app: pod-3 spec: containers: - name: pod-3 image: 192.168 .16 .110 :20080/stady/myapp:v1 affinity: podAffinity: requiredDuringSchedulingIgnoredDuringExecution: - labelSelector: matchExpressions: - key: app operator: In values: - pod-1 topologyKey: kubernetes.io/hostname podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 1 podAffinityTerm: labelSelector: matchExpressions: - key: app operator: In values: - pod-2 topologyKey: kubernetes.io/hostname

pod3 因为存在亲和性策略也会创建到node2节点

1 2 3 4 5 6 7 8 9 10 11 12 [root@master affi ] pod/affinity-pod-2 created pod/affinity-pod-1 created pod/affinity-pod-3 created [root@master affi ] NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS affinity-pod-1 1 /1 Running 0 3s 10.244 .2 .96 node2 <none> <none> app=pod-1 affinity-pod-2 1 /1 Running 0 3s 10.244 .1 .129 node1 <none> <none> app=pod-2 affinity-pod-3 1 /1 Running 0 3s 10.244 .2 .97 node2 <none> <none> app=pod-3 nginx-dm-56996c5fdc-n88vt 1 /1 Running 0 3m35s 10.244 .2 .93 node2 <none> <none> name=nginx,pod-template-hash=56996c5fdc nginx-dm-56996c5fdc-z825r 1 /1 Running 0 3m35s 10.244 .1 .124 node1 <none> <none> name=nginx,pod-template-hash=56996c5fdc [root@master affi ]

亲和性/反亲和性调度策略比较如下:

调度策略匹配

标签操作符拓扑域

支持调度目标

说明

nodeAffinity

主机

In, NotIn, Exists, DoesNotExist, Gt, Lt

否

podAffinity

POD

In, NotIn, Exists, DoesNotExist

是

podAntiAffinity

POD

In, NotIn, Exists, DoesNotExist

是

污点与容忍 节点亲和性,是 pod 的一种属性(偏好或硬性要求),它使 pod 被吸引到一类特定的节点。Taint 则相反,它使节点能够排斥一类特定的pod

Taint 和 toleration 相互配合,可以用来避免 pod 被分配到不合适的节点上。每个节点上都可以应用一个或多个 taint ,这表示对于那些不能容忍这些 taint 的 pod,是不会被该节点接受的。如果将 toleration 应用于 pod 上,则表示这些 pod 可以(但不要求)被调度到具有匹配 taint 的节点上

污点(Taint) 污点 ( Taint ) 的组成 使用 kubectl taint 命令可以给某个 Node 节点设置污点,Node 被设置上污点之后就和 Pod 之间存在了一种相

每个污点的组成如下:

每个污点有一个 key 和 value 作为污点的标签,其中 value 可以为空,effect 描述污点的作用。当前 taint effect 支持如下三个选项:

NoSchedule :表示 k8s 将不会将 Pod 调度到具有该污点的 Node 上

PreferNoSchedule :表示 k8s 将尽量避免将 Pod 调度到具有该污点的 Node 上

NoExecute :表示 k8s 将不会将 Pod 调度到具有该污点的 Node 上,同时会将 Node 上已经存在的 Pod 驱逐出去

污点的设置、查看和去除 1 2 3 4 5 6 # 设置污点 kubectl taint nodes node1 key1=value1:NoSchedule # 节点说明中,查找 Taints 字段 kubectl describe pod pod-name # 去除污点 kubectl taint nodes node1 key1:NoSchedule-

可以查看master节点. 存在一个污点 Taints: node-role.kubernetes.io/control-plane:NoSchedule.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 [root@master affi]# kubectl describe node master Name: master Roles: control-plane Labels: beta.kubernetes.io/arch=amd64 beta.kubernetes.io/os=linux kubernetes.io/arch=amd64 kubernetes.io/hostname=master kubernetes.io/os=linux node-role.kubernetes.io/control-plane= node.kubernetes.io/exclude-from-external-load-balancers= Annotations: flannel.alpha.coreos.com/backend-data: {"VNI":1,"VtepMAC":"a2:80:27:18:b8:43"} flannel.alpha.coreos.com/backend-type: vxlan flannel.alpha.coreos.com/kube-subnet-manager: true flannel.alpha.coreos.com/public-ip: 192.168.16.200 kubeadm.alpha.kubernetes.io/cri-socket: unix:///var/run/cri-dockerd.sock node.alpha.kubernetes.io/ttl: 0 volumes.kubernetes.io/controller-managed-attach-detach: true CreationTimestamp: Sat, 09 Nov 2024 18:57:43 +0800 Taints: node-role.kubernetes.io/control-plane:NoSchedule Unschedulable: false Lease: HolderIdentity: master AcquireTime: <unset> RenewTime: Thu, 26 Dec 2024 22:08:50 +0800 Conditions: Type Status LastHeartbeatTime LastTransitionTime Reason Message ---- ------ ----------------- ------------------ ------ ------- NetworkUnavailable False Thu, 26 Dec 2024 19:45:59 +0800 Thu, 26 Dec 2024 19:45:59 +0800 FlannelIsUp Flannel is running on this node MemoryPressure False Thu, 26 Dec 2024 22:08:45 +0800 Sat, 09 Nov 2024 18:57:42 +0800 KubeletHasSufficientMemory kubelet has sufficient memory available DiskPressure False Thu, 26 Dec 2024 22:08:45 +0800 Sat, 09 Nov 2024 18:57:42 +0800 KubeletHasNoDiskPressure kubelet has no disk pressure PIDPressure False Thu, 26 Dec 2024 22:08:45 +0800 Sat, 09 Nov 2024 18:57:42 +0800 KubeletHasSufficientPID kubelet has sufficient PID available Ready True Thu, 26 Dec 2024 22:08:45 +0800 Sat, 09 Nov 2024 19:39:36 +0800 KubeletReady kubelet is posting ready status Addresses:

查询当前存在的pod,并且在node1节点打 NoSchedule 污点.

1 kubectl taint nodes node1 check=MyCheck:NoExecute

affinity-pod-2 原来启动在node1上被删除掉了

nginx-dm-56996c5fdc-z825r 原来启动在node1上被删除掉了 运维该pod是通过deployment启动的,会维持在2个副本,重新启动的pod不会再启动再node1节点

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 [root@master affi]# kubectl taint nodes node1 check=MyCheck:NoExecute node/node1 tainted [root@master affi]# kubectl get pod -o wide --show-labels NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS affinity-pod-1 1/1 Running 0 22m 10.244.2.96 node2 <none> <none> app=pod-1 affinity-pod-3 1/1 Running 0 22m 10.244.2.97 node2 <none> <none> app=pod-3 nginx-dm-56996c5fdc-ddk2t 0/1 ContainerCreating 0 2s <none> node2 <none> <none> name=nginx,pod-template-hash=56996c5fdc nginx-dm-56996c5fdc-n88vt 1/1 Running 0 26m 10.244.2.93 node2 <none> <none> name=nginx,pod-template-hash=56996c5fdc [root@master affi]# kubectl get pod -o wide --show-labels NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS affinity-pod-1 1/1 Running 0 23m 10.244.2.96 node2 <none> <none> app=pod-1 affinity-pod-3 1/1 Running 0 23m 10.244.2.97 node2 <none> <none> app=pod-3 nginx-dm-56996c5fdc-ddk2t 1/1 Running 0 10s 10.244.2.98 node2 <none> <none> name=nginx,pod-template-hash=56996c5fdc nginx-dm-56996c5fdc-n88vt 1/1 Running 0 26m 10.244.2.93 node2 <none> <none> name=nginx,pod-template-hash=56996c5fdc [root@master affi]# [root@master affi]# kubectl describe node node1 | grep Taints Taints: check=MyCheck:NoExecute [root@master affi]#

删除污点,删除一个node2中的deploytment启动的pod ,有重新调度到node1上了

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 [root@master affi]# kubectl taint nodes node1 check=MyCheck:NoExecute- node/node1 untainted [root@master affi]# kubectl describe node node1 | grep Taints Taints: <none> [root@master affi]# [root@master affi]# kubectl get pod -o wide --show-labels NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS affinity-pod-1 1/1 Running 0 32m 10.244.2.96 node2 <none> <none> app=pod-1 affinity-pod-3 1/1 Running 0 32m 10.244.2.97 node2 <none> <none> app=pod-3 nginx-dm-56996c5fdc-ddk2t 1/1 Running 0 9m25s 10.244.2.98 node2 <none> <none> name=nginx,pod-template-hash=56996c5fdc nginx-dm-56996c5fdc-n88vt 1/1 Running 0 35m 10.244.2.93 node2 <none> <none> name=nginx,pod-template-hash=56996c5fdc [root@master affi]# kubectl delete pod nginx-dm-56996c5fdc-ddk2t pod "nginx-dm-56996c5fdc-ddk2t" deleted [root@master affi]# kubectl get pod -o wide --show-labels NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS affinity-pod-1 1/1 Running 0 32m 10.244.2.96 node2 <none> <none> app=pod-1 affinity-pod-3 1/1 Running 0 32m 10.244.2.97 node2 <none> <none> app=pod-3 nginx-dm-56996c5fdc-n88vt 1/1 Running 0 36m 10.244.2.93 node2 <none> <none> name=nginx,pod-template-hash=56996c5fdc nginx-dm-56996c5fdc-nqthb 1/1 Running 0 2s 10.244.1.130 node1 <none> <none> name=nginx,pod-template-hash=56996c5fdc [root@master affi]#

容忍(Tolerations) 设置了污点的 Node 将根据 taint 的 effect:NoSchedule、PreferNoSchedule、NoExecute 和 Pod 之间产生

pod.spec.tolerations

1 2 3 4 5 6 7 8 9 10 11 12 13 tolerations: - key: "key1" operator: "Equal" value: "value1" effect: "NoSchedule" tolerationSeconds: 3600 - key: "key1" operator: "Equal" value: "value1" effect: "NoExecute" - key: "key2" operator: "Exists" effect: "NoSchedule"

其中 key, vaule, effect 要与 Node 上设置的 taint 保持一致

operator 的值为 Exists 将会忽略 value 值

tolerationSeconds 用于描述当 Pod 需要被驱逐时可以在 Pod 上继续保留运行的时间

当不指定 key 值时,表示容忍所有的污点 key: 1 2 tolerations: - operator: "Exists"

当不指定 effect 值时,表示容忍所有的污点作用 1 2 3 olerations: - key: "key" operator: "Exists"

验证 在node1节点打污点

1 kubectl taint nodes node1 check=MyCheck:NoExecute

pod启动设置污点的容忍策略

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 apiVersion: v1 kind: Pod metadata: name: affinity-pod-1 labels: app: pod-1 spec: containers: - name: with-node-affinity image: 192.168 .16 .110 :20080/stady/myapp:v1 tolerations: - key: "check" operator: "Equal" value: "MyCheck" effect: "NoExecute" tolerationSeconds: 600

可以启动在node1节点

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 [root@master affi]# kubectl taint nodes node1 check=MyCheck:NoExecute node/node1 tainted [root@master affi]# kubectl describe node node1 | grep Taints Taints: check=MyCheck:NoExecute [root@master affi]# [root@master affi]# kubectl apply -f affiPod4.yaml pod/affinity-pod-1 created [root@master affi]# kubectl get pod -o wide --show-labels NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS affinity-pod-1 1/1 Running 0 3s 10.244.1.132 node1 <none> <none> app=pod-1 nginx-dm-56996c5fdc-kdmjj 1/1 Running 0 39s 10.244.2.102 node2 <none> <none> name=nginx,pod-template-hash=56996c5fdc nginx-dm-56996c5fdc-n88vt 1/1 Running 0 55m 10.244.2.93 node2 <none> <none> name=nginx,pod-template-hash=56996c5fdc [root@master affi]# ``` ##### 有多个 Master 存在时,防止资源浪费,可以如下设置 ```text kubectl taint nodes Node-Name node-role.kubernetes.io/master=:PreferNoSchedule

表示:这个 Node-Name 节点被标记为主节点,调度器会尽量避免将 Pod 调度到这个节点上,但如果必要,Pod 仍然可以被调度到这里。

固定节点 指定node名称 Pod.spec.nodeName 将 Pod 直接调度到指定的 Node 节点上,会跳过 Scheduler 的调度策略,该匹配规则是强制匹配

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 apiVersion: apps/v1 kind: Deployment metadata: name: skip-scheduler spec: replicas: 5 selector: matchLabels: app: specify-node1 template: metadata: labels: app: specify-node1 spec: nodeName: node1 containers: - name: myapp-web image: 192.168 .16 .110 :20080/stady/myapp:v1 ports: - containerPort: 80

5个副本全部执行的node1节点

1 2 3 4 5 6 7 8 9 10 11 12 [root@master affi]# kubectl apply -f affiPod5.yaml deployment.apps/skip-scheduler created [root@master affi]# kubectl get pod -o wide --show-labels NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS nginx-dm-56996c5fdc-kdmjj 1/1 Running 0 15m 10.244.2.102 node2 <none> <none> name=nginx,pod-template-hash=56996c5fdc nginx-dm-56996c5fdc-n88vt 1/1 Running 0 71m 10.244.2.93 node2 <none> <none> name=nginx,pod-template-hash=56996c5fdc skip-scheduler-849bbf848f-4z9fj 1/1 Running 0 3s 10.244.1.136 node1 <none> <none> app=specify-node1,pod-template-hash=849bbf848f skip-scheduler-849bbf848f-frcfz 1/1 Running 0 3s 10.244.1.137 node1 <none> <none> app=specify-node1,pod-template-hash=849bbf848f skip-scheduler-849bbf848f-jb7d5 1/1 Running 0 3s 10.244.1.134 node1 <none> <none> app=specify-node1,pod-template-hash=849bbf848f skip-scheduler-849bbf848f-vrj5m 1/1 Running 0 3s 10.244.1.133 node1 <none> <none> app=specify-node1,pod-template-hash=849bbf848f skip-scheduler-849bbf848f-xtgk8 1/1 Running 0 3s 10.244.1.135 node1 <none> <none> app=specify-node1,pod-template-hash=849bbf848f [root@master affi]#

指定标签 Pod.spec.nodeSelector:通过 kubernetes 的 label-selector 机制选择节点,由调度器调度策略匹配 label,而后调度 Pod 到目标节点,该匹配规则属于强制约束

对node2节点打标签

1 kubectl label nodes node2 type=backEndNode2

构造配置文件 affiPod6.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 apiVersion: apps/v1 kind: Deployment metadata: name: node-selector-pod spec: replicas: 5 selector: matchLabels: app: specify-label template: metadata: labels: app: specify-label spec: nodeSelector: type: backEndNode2 containers: - name: myapp-web image: 192.168 .16 .110 :20080/stady/myapp:v1 ports: - containerPort: 80

5个pod副本只会在打了标签的节点上

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 [root@master affi]# kubectl get node node2 --show-labels NAME STATUS ROLES AGE VERSION LABELS node2 Ready <none> 47d v1.28.2 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=node2,kubernetes.io/os=linux,type=backEndNode2 [root@master affi]# [root@master affi]# kubectl apply -f affiPod6.yaml deployment.apps/node-selector-pod created [root@master affi]# kubectl get pod -o wide --show-labels NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS nginx-dm-56996c5fdc-kdmjj 1/1 Running 0 22m 10.244.2.102 node2 <none> <none> name=nginx,pod-template-hash=56996c5fdc nginx-dm-56996c5fdc-n88vt 1/1 Running 0 77m 10.244.2.93 node2 <none> <none> name=nginx,pod-template-hash=56996c5fdc node-selector-pod-75c6ff5d4b-bwhnd 1/1 Running 0 22s 10.244.2.106 node2 <none> <none> app=specify-label,pod-template-hash=75c6ff5d4b node-selector-pod-75c6ff5d4b-ttzrm 1/1 Running 0 22s 10.244.2.103 node2 <none> <none> app=specify-label,pod-template-hash=75c6ff5d4b node-selector-pod-75c6ff5d4b-vrxs4 1/1 Running 0 22s 10.244.2.104 node2 <none> <none> app=specify-label,pod-template-hash=75c6ff5d4b node-selector-pod-75c6ff5d4b-w2gf4 1/1 Running 0 22s 10.244.2.105 node2 <none> <none> app=specify-label,pod-template-hash=75c6ff5d4b node-selector-pod-75c6ff5d4b-xf5fn 1/1 Running 0 22s 10.244.2.107 node2 <none> <none> app=specify-label,pod-template-hash=75c6ff5d4b skip-scheduler-849bbf848f-4z9fj 1/1 Running 0 6m56s 10.244.1.136 node1 <none> <none> app=specify-node1,pod-template-hash=849bbf848f skip-scheduler-849bbf848f-frcfz 1/1 Running 0 6m56s 10.244.1.137 node1 <none> <none> app=specify-node1,pod-template-hash=849bbf848f skip-scheduler-849bbf848f-jb7d5 1/1 Running 0 6m56s 10.244.1.134 node1 <none> <none> app=specify-node1,pod-template-hash=849bbf848f skip-scheduler-849bbf848f-vrj5m 1/1 Running 0 6m56s 10.244.1.133 node1 <none> <none> app=specify-node1,pod-template-hash=849bbf848f skip-scheduler-849bbf848f-xtgk8 1/1 Running 0 6m56s 10.244.1.135 node1 <none> <none> app=specify-node1,pod-template-hash=849bbf848f [root@master affi]#

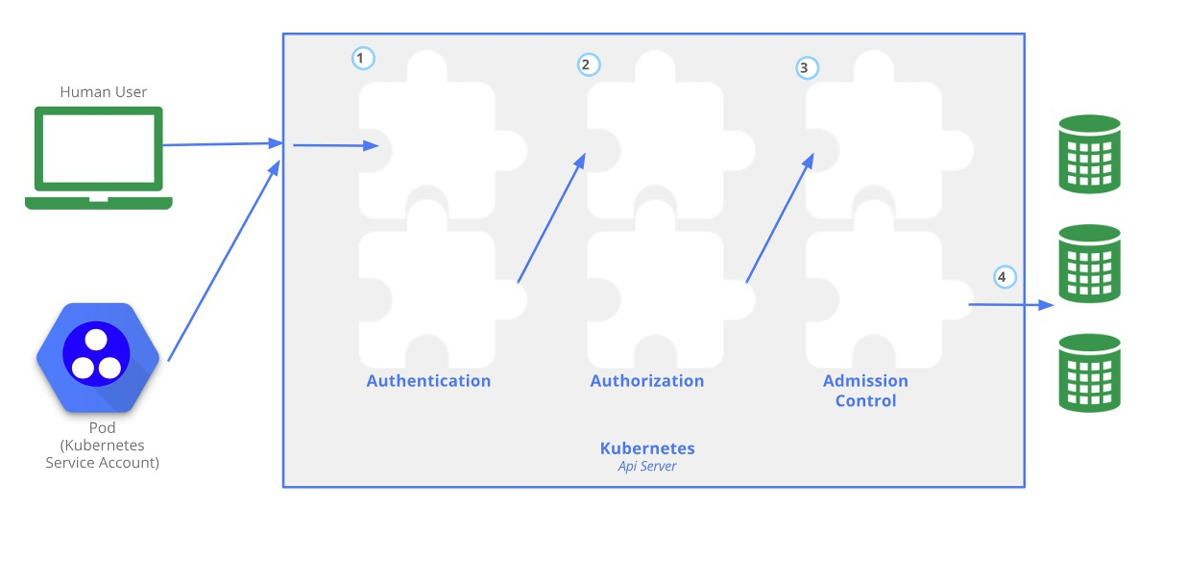

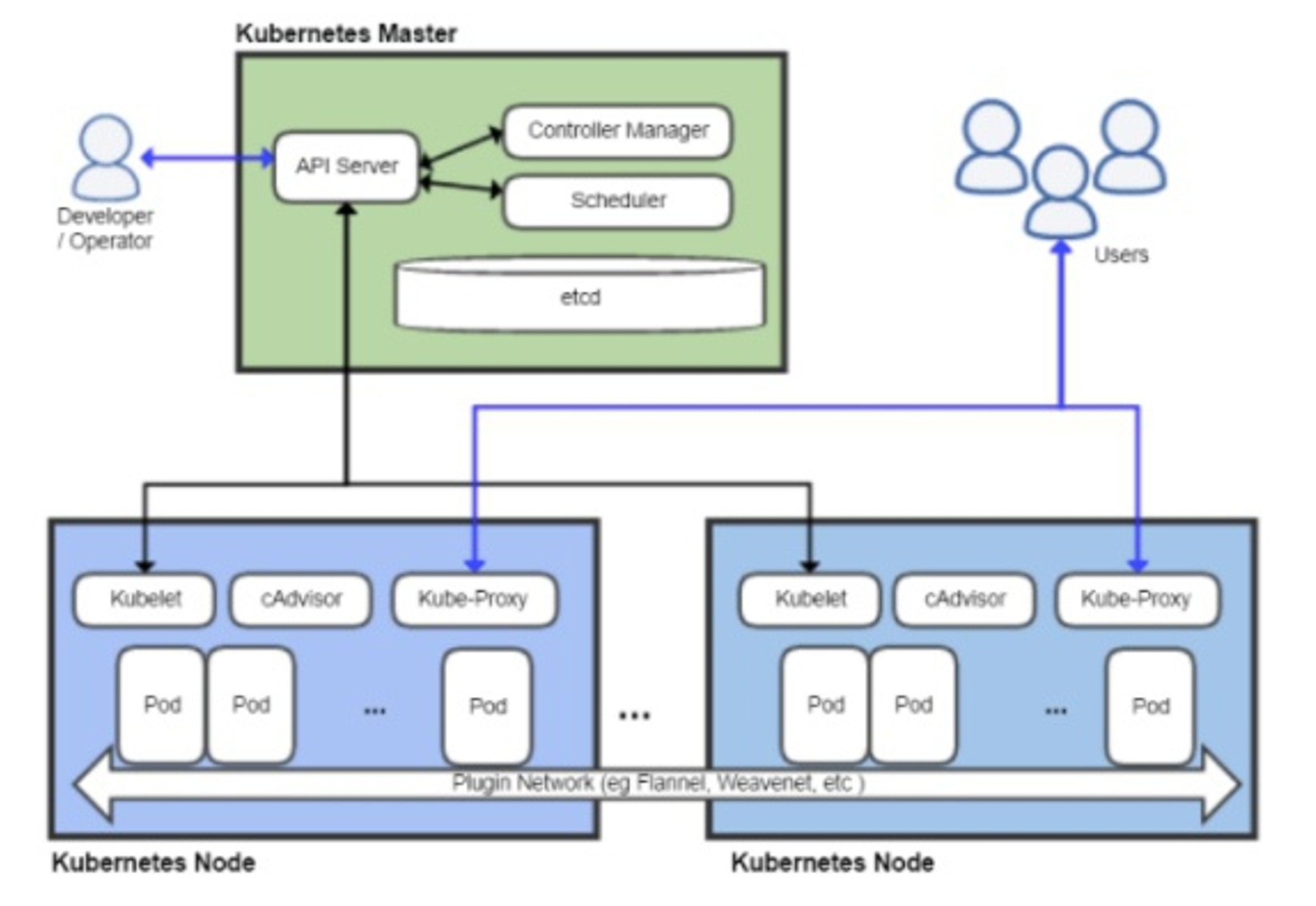

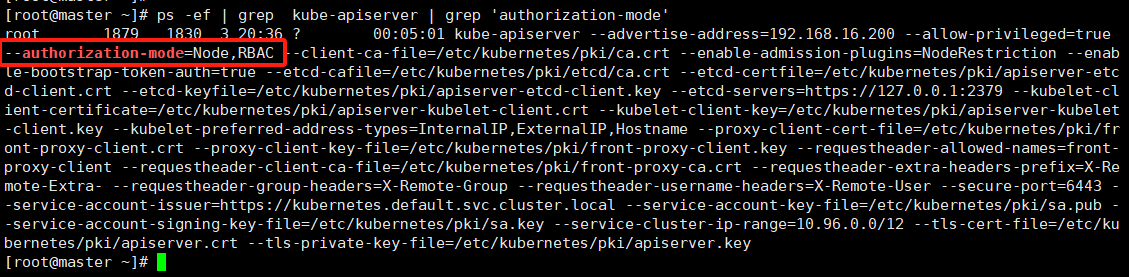

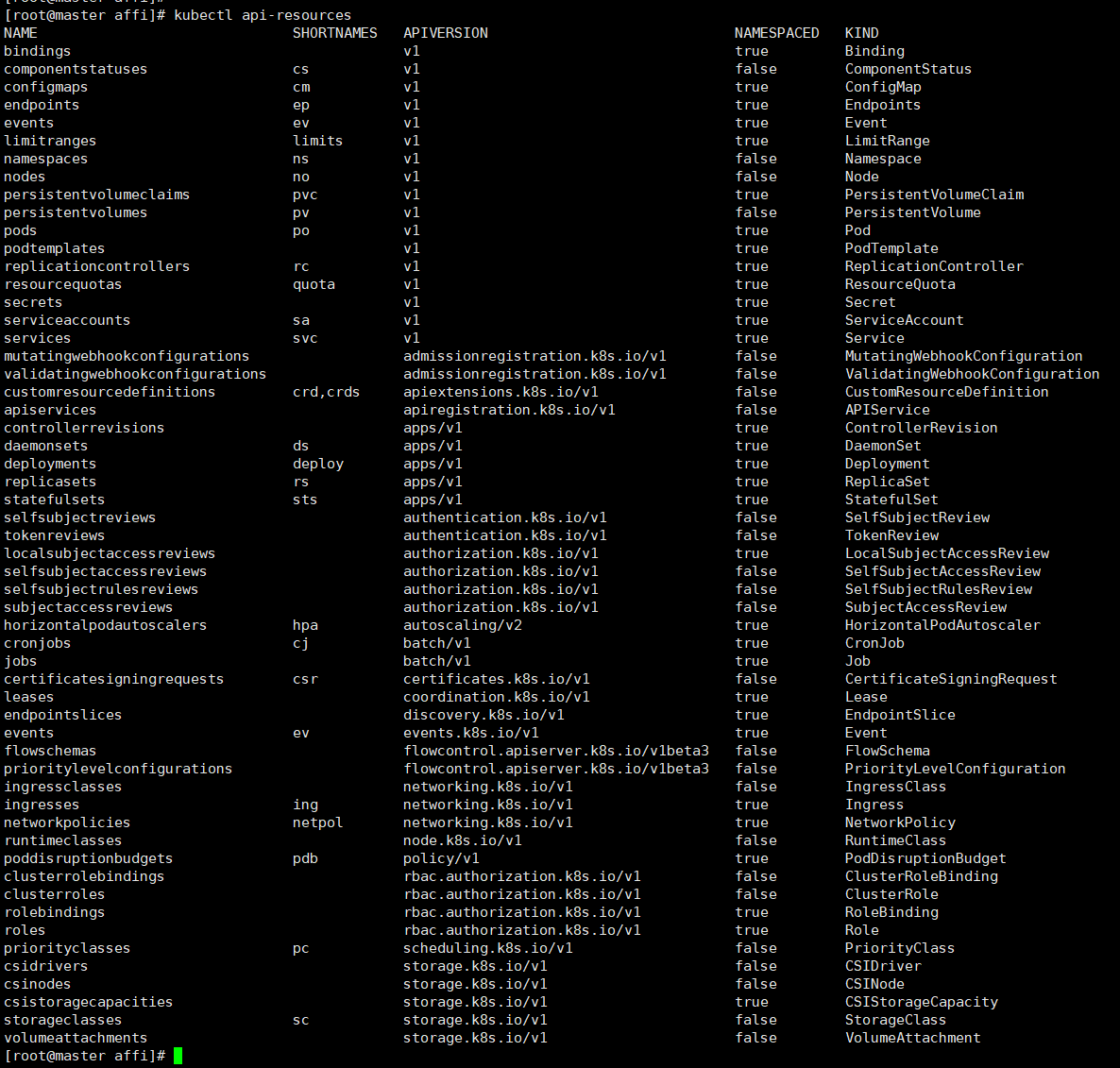

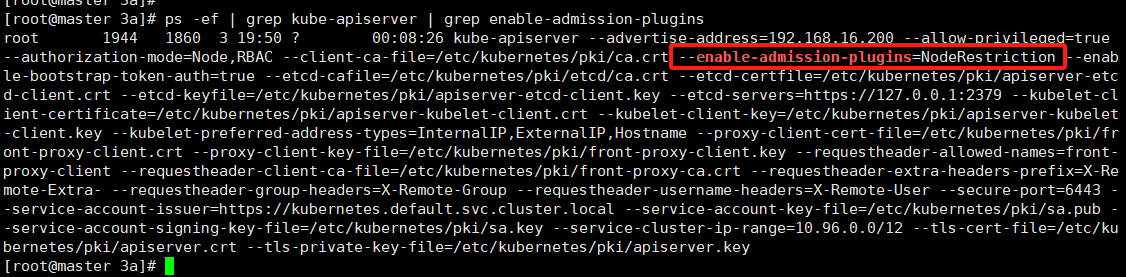

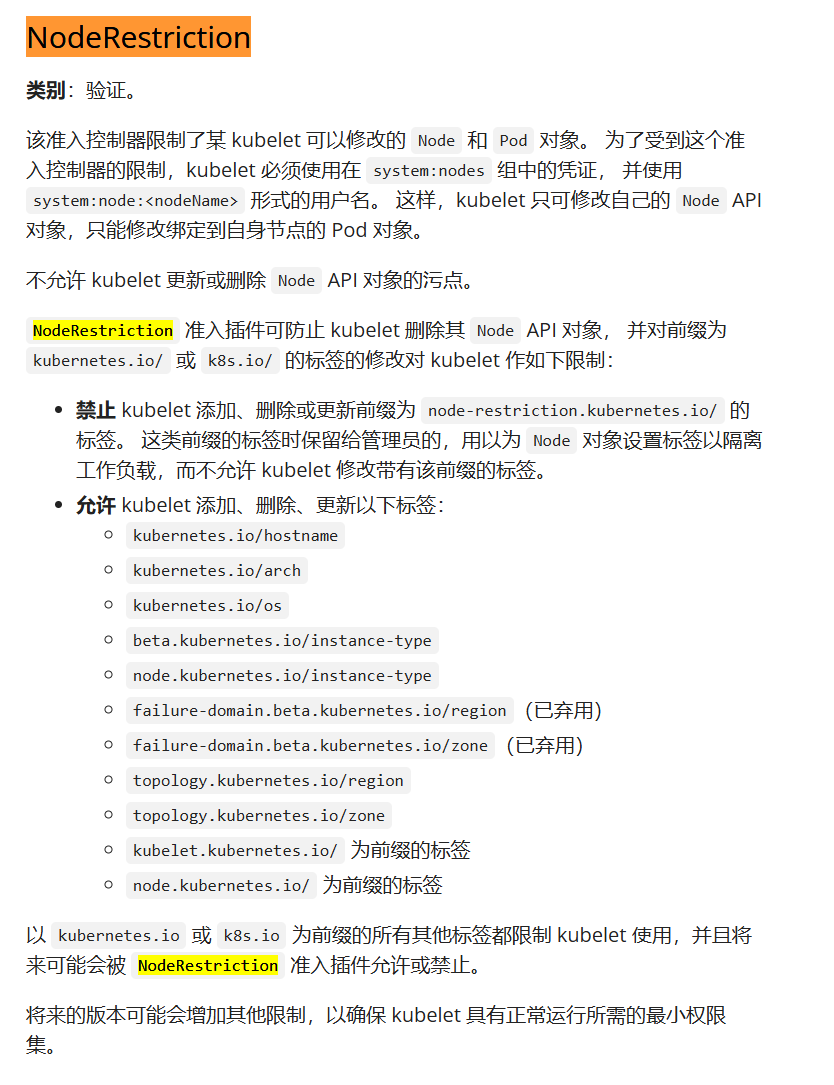

集群安全 Kubernetes 作为一个分布式集群的管理工具,保证集群的安全性是其一个重要的任务。API Server 是集群内部各个组件通信的中介,也是外部控制的入口。所以 Kubernetes 的安全机制基本就是围绕保护 API Server 来设计的。Kubernetes 使用了认证(Authentication)、鉴权(Authorization)、准入控制(Admission Control)三步来保证API Server的安全

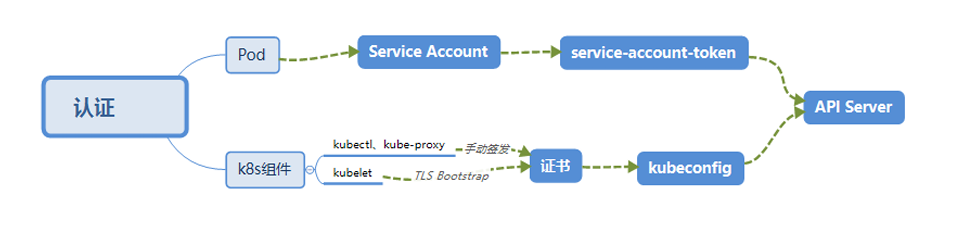

认证 Authentication

HTTP Token 认证:通过一个 Token 来识别合法用户

HTTP Token 的认证是用一个很长的特殊编码方式的并且难以被模仿的字符串 - Token 来表达客户的一种方式。Token 是一个很长的很复杂的字符串,每一个 Token 对应一个用户名存储在 API Server 能访问的文件中。当客户端发起 API 调用请求时,需要在 HTTP Header 里放入 Token

HTTP Base 认证:通过 用户名+密码 的方式认证

用户名+:+密码 用 BASE64 算法进行编码后的字符串放在 HTTP Request 中的 Heather Authorization 域里发送给服务端,服务端收到后进行编码,获取用户名及密码

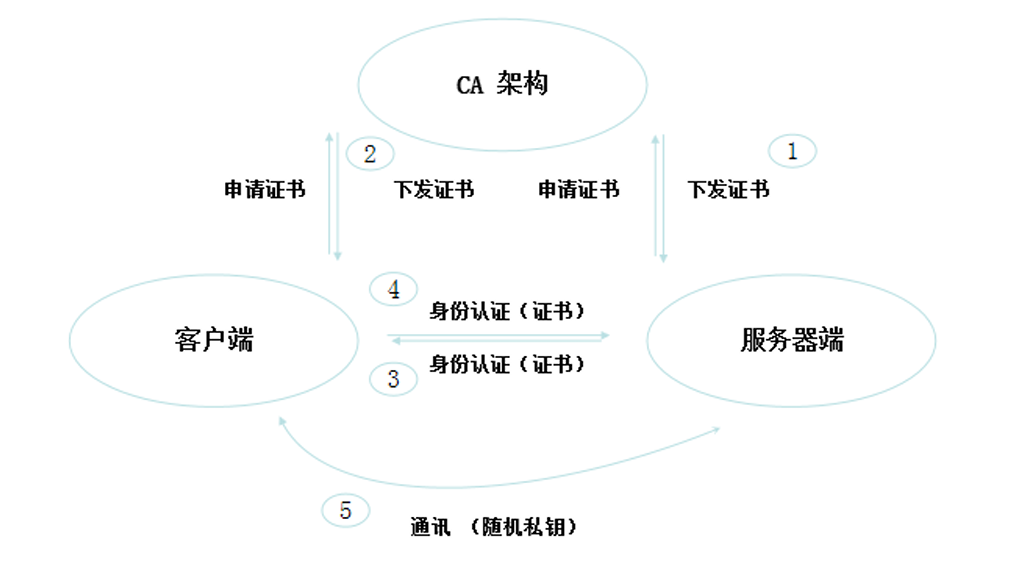

最严格的 HTTPS 证书认证:基于 CA 根证书签名的客户端身份认证方式

HTTPS 证书认证: 需要认证的节点: 两种类型

Kubenetes 组件对 API Server 的访问:kubectl、Controller Manager、Scheduler、kubelet、kube-proxy

Kubernetes 管理的 Pod 对容器的访问:Pod(dashborad 也是以 Pod 形式运行)

安全性说明

Controller Manager、Scheduler 与 API Server 在同一台机器,所以直接使用 API Server 的非安全端口访问,–insecure-bind-address=127.0.0.1 (图中 绿色区域内部访问)

kubectl、kubelet、kube-proxy 访问 API Server 就都需要证书进行 HTTPS 双向认证 (图中 以蓝色线条 与 蓝色线条)

证书颁发

手动签发:通过 k8s 集群的跟 ca 进行签发 HTTPS 证书

自动签发:kubelet 首次访问 API Server 时,使用 token 做认证,通过后,Controller Manager 会为 kubelet 生成一个证书,以后的访问都是用证书做认证了

kubeconfig kubeconfig 文件包含集群参数(CA证书、API Server地址),客户端参数(上面生成的证书和私钥),集群context 信息(集群名称、用户名)。Kubenetes 组件通过启动时指定不同的 kubeconfig 文件可以切换到不同的集群

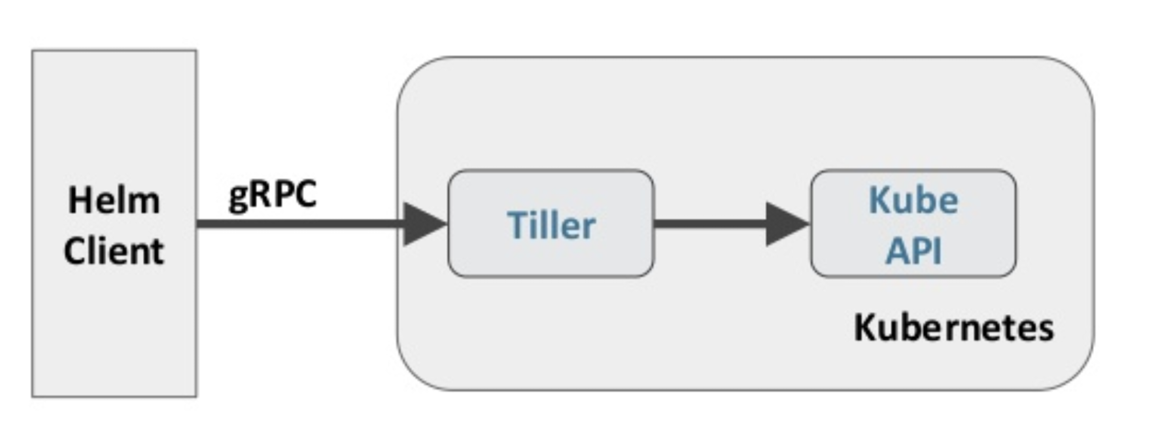

ServiceAccount Pod中的容器访问API Server。因为Pod的创建、销毁是动态的,所以要为它手动生成证书就不可行了。 Kubenetes使用了Service Account解决Pod 访问API Server的认证问题 (图中 蓝色区域的应用访问 API Service 对应的黑色线条)

举例:查看 flannel pod内的 SA 信息

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 [root@master ~]# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE ... ingress-nginx ingress-nginx-admission-patch-d9ghq 0/1 Completed 0 3d23h ingress-nginx ingress-nginx-controller-749f794b9-hd862 1/1 Running 0 24h kube-flannel kube-flannel-ds-b55zx 1/1 Running 1 (22h ago) 23h kube-flannel kube-flannel-ds-c22v9 1/1 Running 18 (22h ago) 48d kube-flannel kube-flannel-ds-vv4c8 1/1 Running 22 (22h ago) 48d ... [root@master ~]# kubectl exec -it kube-flannel-ds-b55zx -n kube-flannel -- ls -l /run/secrets/kubernetes.io/serviceaccount Defaulted container "kube-flannel" out of: kube-flannel, install-cni-plugin (init), install-cni (init) total 0 lrwxrwxrwx 1 root root 13 Dec 27 12:36 ca.crt -> ..data/ca.crt lrwxrwxrwx 1 root root 16 Dec 27 12:36 namespace -> ..data/namespace lrwxrwxrwx 1 root root 12 Dec 27 12:36 token -> ..data/token [root@master ~]#

Secret 与 SA 的关系 Kubernetes 设计了一种资源对象叫做 Secret,分为两类,一种是用于 ServiceAccount 的 service-account token, 另一种是用于保存用户自定义保密信息的 Opaque。ServiceAccount 中用到包含三个部分:Token、 ca.crt、namespace

token是使用 API Server 私钥签名的 JWT。用于访问API Server时,Server端认证

ca.crt,根证书。用于Client端验证API Server发送的证书

namespace, 标识这个service-account-token的作用域名空间

1 2 kubectl get secret --all-namespaces kubectl describe secret default-token-5gm9r --namespace=kube-system

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 [root@master ~]# kubectl describe secret ingress-nginx-admission --namespace=ingress-nginx Name: ingress-nginx-admission Namespace: ingress-nginx Labels: <none> Annotations: <none> Type: Opaque Data ==== key: 227 bytes ca: 570 bytes cert: 660 bytes [root@master ~]# kubectl get secret ingress-nginx-admission --namespace=ingress-nginx -o jsonpath="{.data['key']}" | base64 --decode -----BEGIN EC PRIVATE KEY----- MHcCAQEEIIRmloegSsnHKnVG8EviOuLSJCFU+kW/ksKSk4XrpTLUoAoGCCqGSM49 AwEHoUQDQgAE9HtEfcJG1poY3ugmrtb2SQseYZ3eU98JwTK3w4VV8VJCBa86p+NQ Nxf/FcXZiCx0VlMU+x1fvx6WNmq8ZNx95g== -----END EC PRIVATE KEY----- [root@master ~]# kubectl get secret ingress-nginx-admission --namespace=ingress-nginx -o jsonpath="{.data['ca']}" | base64 --decode -----BEGIN CERTIFICATE----- MIIBdzCCARygAwIBAgIRAN5zGMUtB1SQ7U8nNI+T+i4wCgYIKoZIzj0EAwIwDzEN MAsGA1UEChMEbmlsMTAgFw0yNDEyMjMxNDM0MzVaGA8yMTI0MTEyOTE0MzQzNVow DzENMAsGA1UEChMEbmlsMTBZMBMGByqGSM49AgEGCCqGSM49AwEHA0IABIdqaStn IxpYfxl08/Rtw/UBkfHJtod8yCGNAV2yTyLAUryGNPGtnqqG1sSw0lrtvNUl4gA+ ghDw7fP9UtqSPiCjVzBVMA4GA1UdDwEB/wQEAwICBDATBgNVHSUEDDAKBggrBgEF BQcDATAPBgNVHRMBAf8EBTADAQH/MB0GA1UdDgQWBBT/KmBFIkWJGImJfHW9gmlL DBjSYTAKBggqhkjOPQQDAgNJADBGAiEA/ceuvC+sfaKaJyo03VJ3MA1Esqu0UOs0 Bn3U/Axf6WMCIQDaqfgWhPtP4uJzJUXlbdtxp/rx56Ap9RBSfVuc+AtuRg== -----END CERTIFICATE----- [root@master ~]# kubectl get secret ingress-nginx-admission --namespace=ingress-nginx -o jsonpath="{.data['cert']}" | base64 --decode -----BEGIN CERTIFICATE----- MIIBujCCAWCgAwIBAgIQXqMj0ZvHF6ryLHVDvjVbqDAKBggqhkjOPQQDAjAPMQ0w CwYDVQQKEwRuaWwxMCAXDTI0MTIyMzE0MzQzNVoYDzIxMjQxMTI5MTQzNDM1WjAP MQ0wCwYDVQQKEwRuaWwyMFkwEwYHKoZIzj0CAQYIKoZIzj0DAQcDQgAE9HtEfcJG 1poY3ugmrtb2SQseYZ3eU98JwTK3w4VV8VJCBa86p+NQNxf/FcXZiCx0VlMU+x1f vx6WNmq8ZNx95qOBmzCBmDAOBgNVHQ8BAf8EBAMCBaAwEwYDVR0lBAwwCgYIKwYB BQUHAwEwDAYDVR0TAQH/BAIwADBjBgNVHREEXDBagiJpbmdyZXNzLW5naW54LWNv bnRyb2xsZXItYWRtaXNzaW9ugjRpbmdyZXNzLW5naW54LWNvbnRyb2xsZXItYWRt aXNzaW9uLmluZ3Jlc3Mtbmdpbnguc3ZjMAoGCCqGSM49BAMCA0gAMEUCIEaqMkzx Y3/X60/GB/D26qZMEEZZOfQifbS5kzYz+hs8AiEAgyItgq5Is5sCtZykp7Akjba6 xkQjRMcMSY67OhZU/Lo= -----END CERTIFICATE----- [root@master ~]#

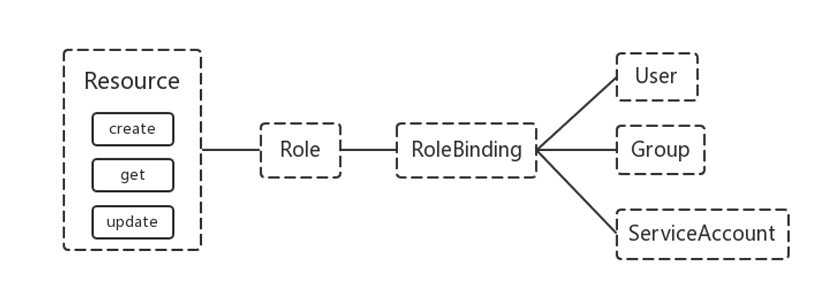

默认情况下,每个 namespace 都会有一个 ServiceAccount,如果 Pod 在创建时没有指定 ServiceAccount, 就会使用 Pod 所属的 namespace 的 ServiceAccount