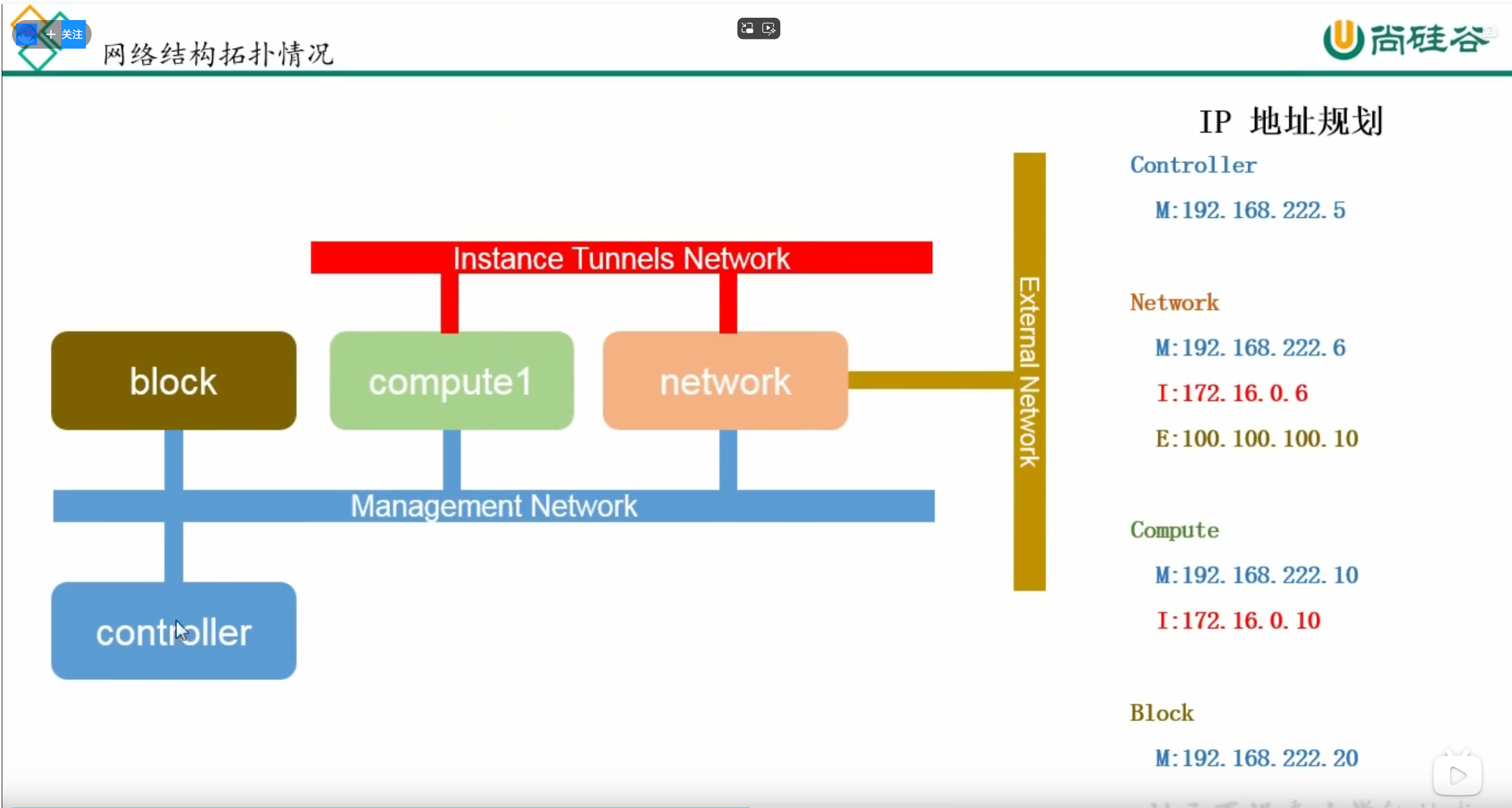

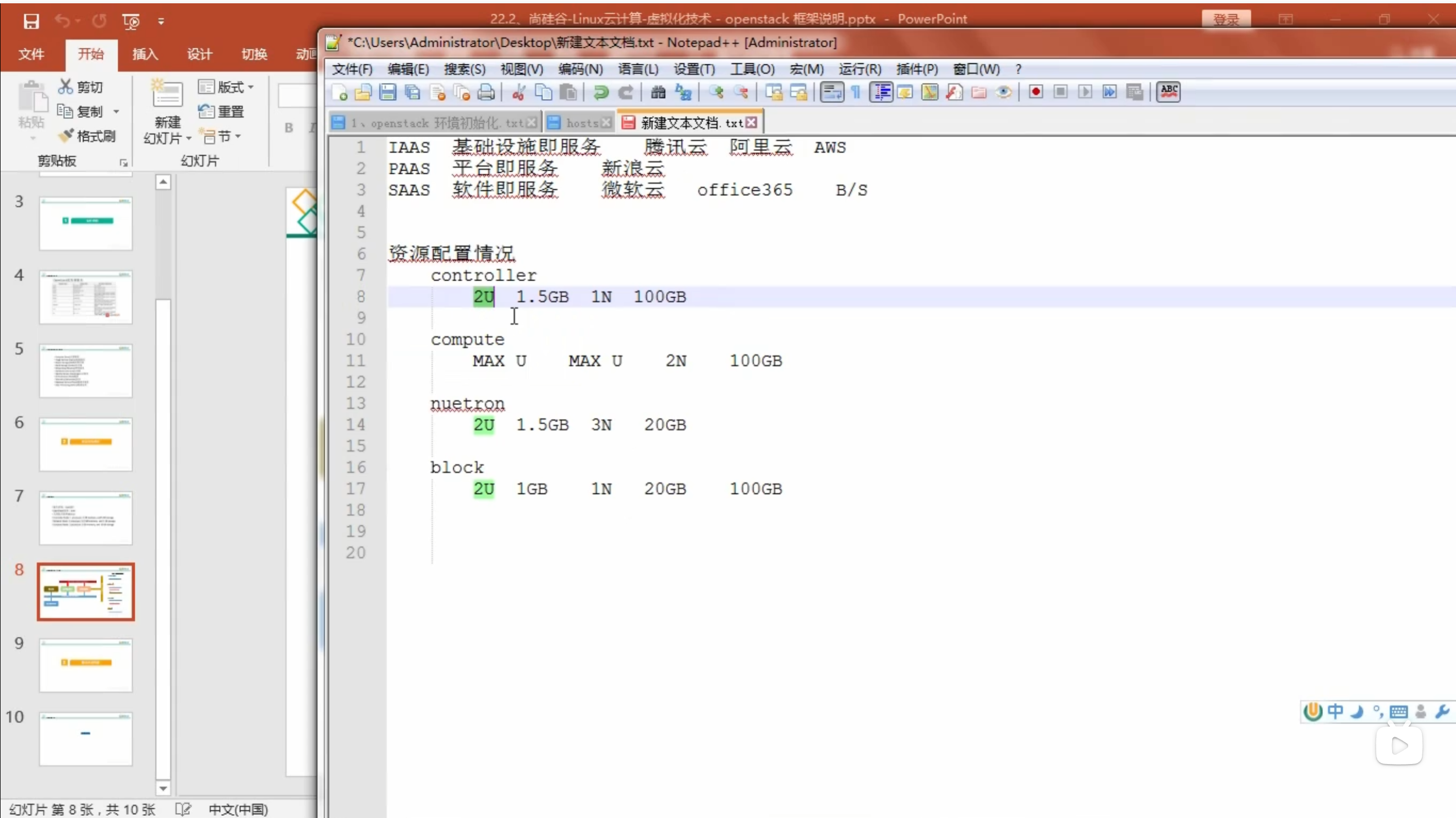

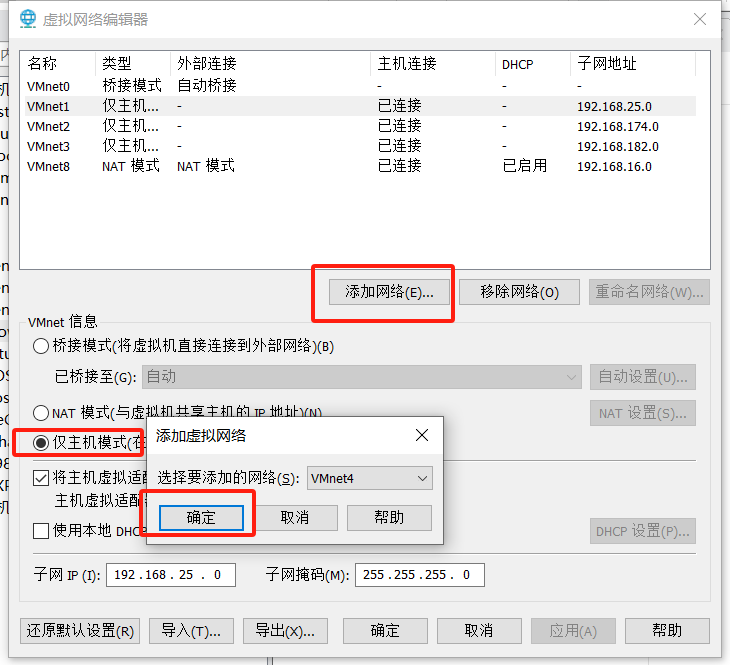

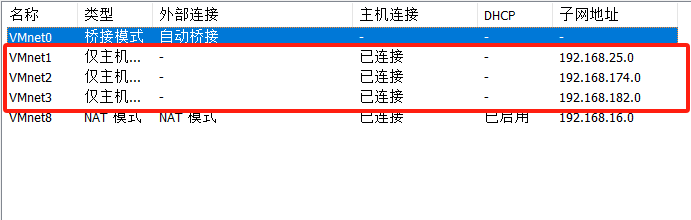

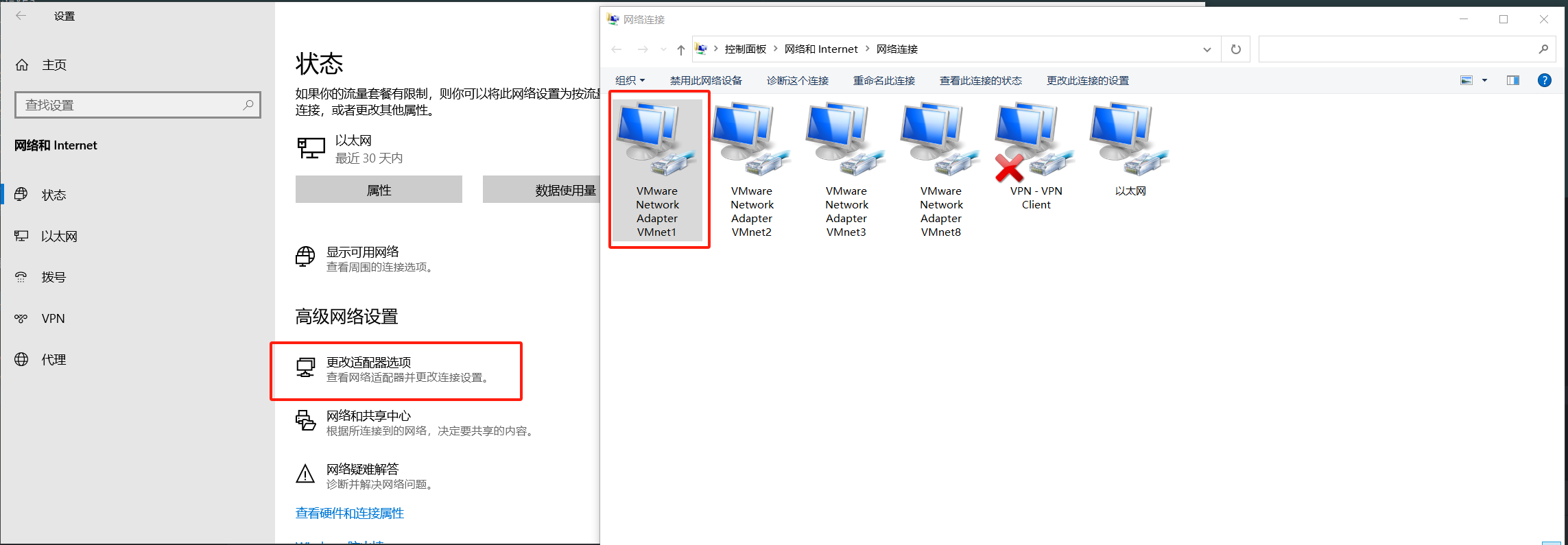

环境架构 虚拟机配置规划 虚拟机网络配置 配置三个host网络,分别用于管理网络,应用网络,外部网络

配置三个网络都是host模式

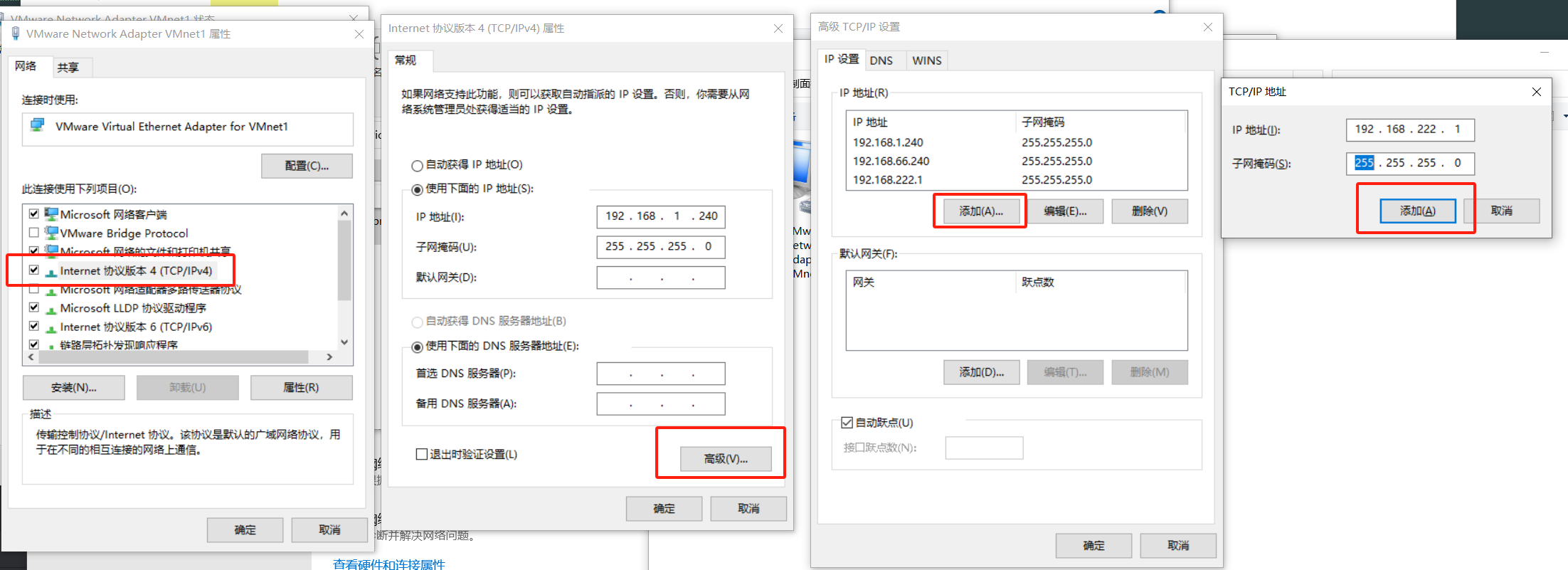

windows网卡配置 VMware Network Adapter VMnet1 配置管理段IP 192.168.222.1

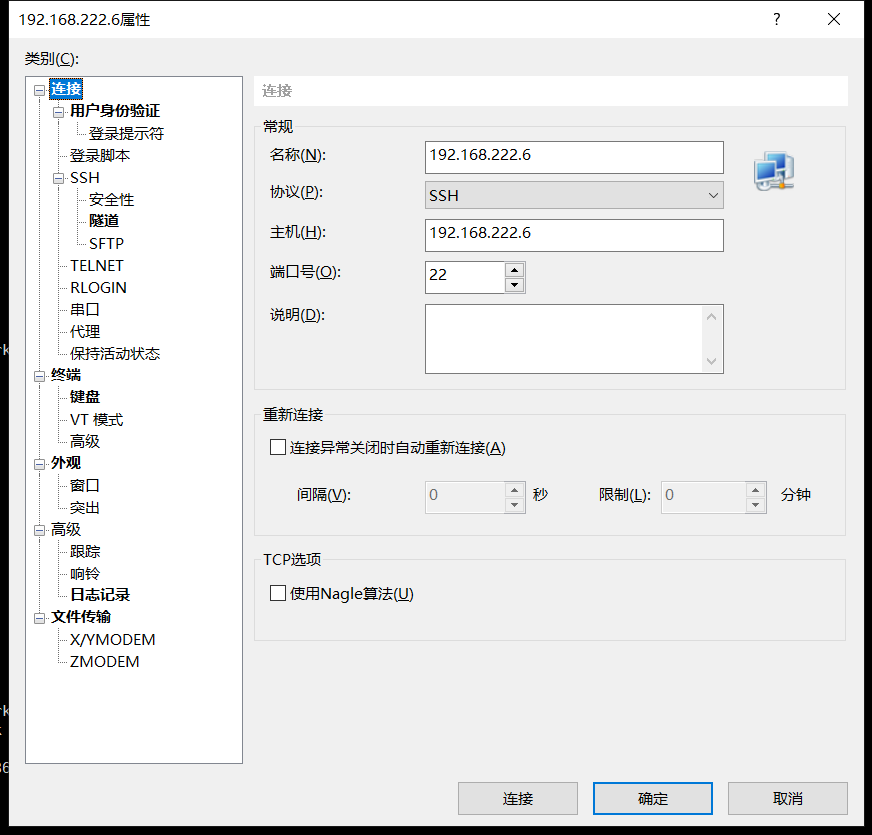

配置好之后就可以使用管理IP 192.168.222.6 连接nuetron主机了

连接主机(使用管理网段示例) openstack环境搭建 测试使用镜像是的CentOS-7.0-1406-x86_64

主机安装完系统之后统一做配置 (后面不一定有交待,如果没有交代,默认这些操作都已经做过了)

关闭防火墙 1 2 systemctl stop firewalld systemctl disable firewalld

关闭selinux 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [root@localhost ~]# setenforce 0 [root@localhost ~]# cat /etc/selinux/config # This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - No SELinux policy is loaded. SELINUX=disabled # SELINUXTYPE= can take one of these two values: # targeted - Targeted processes are protected, # minimum - Modification of targeted policy. Only selected processes are protected. # mls - Multi Level Security protection. SELINUXTYPE=targeted

关闭网卡守护进程 1 2 systemctl stop NetworkManager systemctl disable NetworkManager

配置yum源(ali源) 修改 CentOS-Base.repo 为ali源

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 # CentOS-Base.repo # # update status of each mirror to pick mirrors that are updated to and # geographically close to the client. You should use this for CentOS updates # unless you are manually picking other mirrors. # # remarked out baseurl= line instead. # [base] name=CentOS-$releasever - Base - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/os/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/os/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/os/$basearch/ gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 # released updates [updates] name=CentOS-$releasever - Updates - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/updates/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/updates/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/updates/$basearch/ gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 # additional packages that may be useful [extras] name=CentOS-$releasever - Extras - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/extras/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/extras/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/extras/$basearch/ gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 # additional packages that extend functionality of existing packages [centosplus] name=CentOS-$releasever - Plus - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/centosplus/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/centosplus/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/centosplus/$basearch/ gpgcheck=1 enabled=0 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 # contrib - packages by Centos Users [contrib] name=CentOS-$releasever - Contrib - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/contrib/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/contrib/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/contrib/$basearch/ gpgcheck=1 enabled=0 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

安装epel扩展yum源 1 2 yum install -y wget wget -O /etc/yum.repos.d/epel-7.repo http://mirrors.aliyun.com/repo/epel-7.repo

yum源更新 keystone 服务搭建 配置主机名 1 hostnamectl set-hostname controller.nice.com

配置网卡 192.168.222.5 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 [root@controller ~]# cat /etc/sysconfig/network-scripts/ifcfg-eno16777736 HWADDR=00:0C:29:67:B3:7D TYPE=Ethernet BOOTPROTO=static IPADDR=192.168.222.5 NETMASK=255.255.255.0 DEFROUTE=yes PEERDNS=yes PEERROUTES=yes IPV4_FAILURE_FATAL=no IPV6INIT=yes IPV6_AUTOCONF=yes IPV6_DEFROUTE=yes IPV6_PEERDNS=yes IPV6_PEERROUTES=yes IPV6_FAILURE_FATAL=no NAME=eno16777736 UUID=a138dbb8-d58d-4031-b9a7-05ba0ace214d ONBOOT=yes [root@controller ~]#

安装mariadb 1 yum install -y mariadb mariadb-server MySQL-python

修改数据库监听地址 1 yum install -y vim net-tools

增加配置 /etc/my.cnf

1 2 3 4 5 6 7 8 9 [mysqld] bind-address = 192.168.222.5 default-storage-engine = innodb innodb_file_per_table collation-server = utf8_general_ci init-connect = 'SET NAMES utf8' character-set-server = utf8

初始化数据库

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 [root@localhost my.cnf.d]# mysql_secure_installation NOTE: RUNNING ALL PARTS OF THIS SCRIPT IS RECOMMENDED FOR ALL MariaDB SERVERS IN PRODUCTION USE! PLEASE READ EACH STEP CAREFULLY! In order to log into MariaDB to secure it, we'll need the current password for the root user. If you've just installed MariaDB, and you haven't set the root password yet, the password will be blank, so you should just press enter here. Enter current password for root (enter for none): OK, successfully used password, moving on... Setting the root password ensures that nobody can log into the MariaDB root user without the proper authorisation. Set root password? [Y/n] y New password: Re-enter new password: Password updated successfully! Reloading privilege tables.. ... Success! By default, a MariaDB installation has an anonymous user, allowing anyone to log into MariaDB without having to have a user account created for them. This is intended only for testing, and to make the installation go a bit smoother. You should remove them before moving into a production environment. Remove anonymous users? [Y/n] y ... Success! Normally, root should only be allowed to connect from 'localhost'. This ensures that someone cannot guess at the root password from the network. Disallow root login remotely? [Y/n] y ... Success! By default, MariaDB comes with a database named 'test' that anyone can access. This is also intended only for testing, and should be removed before moving into a production environment. Remove test database and access to it? [Y/n] y - Dropping test database... ... Success! - Removing privileges on test database... ... Success! Reloading the privilege tables will ensure that all changes made so far will take effect immediately. Reload privilege tables now? [Y/n] y ... Success! Cleaning up... All done! If you've completed all of the above steps, your MariaDB installation should now be secure. Thanks for using MariaDB! [root@localhost my.cnf.d]#

安装消息队列 Messaing Server 服务 安装RabbitMQ 1 yum -y install rabbitmq-server

1 2 systemctl enable rabbitmq-server systemctl start rabbitmq-server

默认用户名密码都是guest

1 rabbitmqctl change_password guest new_password

时间同步服务器 安装 修改配置文件 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 [root@localhost my.cnf.d]# cat /etc/ntp.conf # For more information about this file, see the man pages # ntp.conf(5), ntp_acc(5), ntp_auth(5), ntp_clock(5), ntp_misc(5), ntp_mon(5). driftfile /var/lib/ntp/drift # Permit time synchronization with our time source, but do not # permit the source to query or modify the service on this system. restrict default nomodify notrap nopeer noquery # Permit all access over the loopback interface. This could # be tightened as well, but to do so would effect some of # the administrative functions. restrict 127.0.0.1 restrict ::1 # Hosts on local network are less restricted. restrict 192.168.222.0 mask 255.255.255.0 nomodify notrap # Use public servers from the pool.ntp.org project. # Please consider joining the pool (http://www.pool.ntp.org/join.html). #server 0.centos.pool.ntp.org iburst #server 1.centos.pool.ntp.org iburst #server 2.centos.pool.ntp.org iburst #server 3.centos.pool.ntp.org iburst server 127.127.1.0 fudge 127.127.1.0 stratum 10 #broadcast 192.168.1.255 autokey # broadcast server #broadcastclient # broadcast client #broadcast 224.0.1.1 autokey # multicast server #multicastclient 224.0.1.1 # multicast client #manycastserver 239.255.254.254 # manycast server #manycastclient 239.255.254.254 autokey # manycast client # Enable public key cryptography. #crypto includefile /etc/ntp/crypto/pw # Key file containing the keys and key identifiers used when operating # with symmetric key cryptography. keys /etc/ntp/keys # Specify the key identifiers which are trusted. #trustedkey 4 8 42 # Specify the key identifier to use with the ntpdc utility. #requestkey 8 # Specify the key identifier to use with the ntpq utility. #controlkey 8 # Enable writing of statistics records. #statistics clockstats cryptostats loopstats peerstats # Disable the monitoring facility to prevent amplification attacks using ntpdc # monlist command when default restrict does not include the noquery flag. See # CVE-2013-5211 for more details. # Note: Monitoring will not be disabled with the limited restriction flag. disable monitor

开启时间同步服务 1 2 systemctl start ntpd systemctl enable ntpd

域名解析 host文件增加/修改 /etc/hosts

1 2 3 4 192.168.222.5 controller.nice.com 192.168.222.6 network.nice.com 192.168.222.10 computer1.nice.com 192.168.222.20 block1.nice.com

先决条件 创建keystone数据库 mysql -uroot -p

1 2 3 CREATE DATABASE keystone ; grant all privileges on keystone.* to`keystone`@`localhost` identified by 'keystone' ; grant all privileges on keystone.* to`keystone`@`%` identified by 'keystone' ;

生成一个随机值作为管理令牌的初始值 记录下载后续待用

1 2 [root@controller ~]# openssl rand -hex 10 cb8ae2320d62e8a0e1c4

安装keystone服务包与客户端工具 配置yum源 1 2 3 4 5 6 [root@network yum.repos.d]# cat CentOS-OpenStack-juno.repo [centotack-juno] name=openstack-juno baseurl=https://repos.fedorapeople.org/openstack/EOL/openstack-juno/epel-7/ enabled=1 gpgcheck=0

安装 1 yum install openstack-keystone python-keystoneclient

配置keystone配置文件 /etc/keystone/keystone.conf 1 2 3 4 5 6 7 8 9 10 11 12 13 14 [DEFAULT] ... admin_token=cb8ae2320d62e8a0e1c4 ... [database] ... connection=mysql://keystone:keystone@localhost/keystone ... [token] .... provider=keystone.token.providers.uuid.Provider driver=keystone.token.persistence.backends.sql.Token ...

admin_token 配置的是刚才生成的随机令牌

connection配置的是连接数据库含义如下 ={数据库类型}://{数据库用户}/{密码}@{主机}/{数据库名}

设置通用证书密钥 设置用户 keystone 组 keystone

1 keystone-manage pki_setup --keystone-user keystone --keystone-group keystone

修改目录权限

1 2 3 chown -R keystone:keystone /var/log/keystone chown -R keystone:keystone /etc/keystone/ssl chmod -R o-rwx /etc/keystone/ssl

初始化 keystone 数据库 su -s /bin/sh -c “keystone-manage db_sync” keystone

完成之后登录数据库查看是否是否初始化成功

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 [root@controller ~]# mysql -uroot -p Enter password: Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 13 Server version: 5.5.68-MariaDB MariaDB Server Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> show databases ; +--------------------+ | Database | +--------------------+ | information_schema | | keystone | | mysql | | performance_schema | +--------------------+ 4 rows in set (0.00 sec) MariaDB [(none)]> use keystone ; Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A Database changed MariaDB [keystone]> show tables ; +-----------------------+ | Tables_in_keystone | +-----------------------+ | assignment | | credential | | domain | | endpoint | | group | | id_mapping | | migrate_version | | policy | | project | | region | | revocation_event | | role | | service | | token | | trust | | trust_role | | user | | user_group_membership | +-----------------------+ 18 rows in set (0.00 sec) MariaDB [keystone]>

开启 keystone服务 1 2 systemctl enable openstack-keystone.service systemctl start openstack-keystone.service

1 2 3 4 # 默认情况下 服务器会无限制存储到期的令牌环, 在资源有限的情况下会严重影响服务器性能,配置计划任务,每小时删除过期的令牌 (crontab -l -u keystone 2>&1 |grep -q token_flush) || echo '@hourly /usr/bin/keystone-manage token_flush > /var/log/keystone/keystone-tokenflush.log 2>&1' >> /var/spool/cron/keystone # 查看是否配置成功 crontab -l -u keystone

创建tenants(租户),users(用户)与roles(角色) 先决条件 配置管理员令牌(刚才生成了令牌) 1 export OS_SERVICE_TOKEN=cb8ae2320d62e8a0e1c4

配置端点 1 export OS_SERVICE_ENDPOINT=http://controller.nice.com:35357/v2.0

创建用于管理的租户用户和角色 创建admin租户 1 2 3 4 5 6 7 8 9 10 11 keystone tenant-create --name admin --description "Admin Tenant" ```text [root@controller ~]# keystone tenant-create --name admin --description "Admin Tenant" +-------------+----------------------------------+ | Property | Value | +-------------+----------------------------------+ | description | Admin Tenant | | enabled | True | | id | df3d2c39592340bea97aa881613c61d1 | | name | admin | +-------------+----------------------------------+

创建admin用户 1 keystone user-create --name admin --pass admin --email admin@123.cn

1 2 3 4 5 6 7 8 9 10 11 [root@controller ~]# keystone user-create --name admin --pass admin --email admin@123.cn +----------+----------------------------------+ | Property | Value | +----------+----------------------------------+ | email | admin@123.cn | | enabled | True | | id | fc9527661139494c9d2985fcdf95dc06 | | name | admin | | username | admin | +----------+----------------------------------+ [root@controller ~]#

创建admin角色 1 keystone role-create --name admin

1 2 3 4 5 6 7 8 [root@controller ~]# keystone role-create --name admin +----------+----------------------------------+ | Property | Value | +----------+----------------------------------+ | id | 6e5110bfc3144e4f89745e654202b934 | | name | admin | +----------+----------------------------------+ [root@controller ~]#

添加admin租户和用户到admin角色 1 keystone user-role-add --tenant admin --user admin --role admin

创建dashboard 访问的”member “ 角色 1 keystone role-create --name _member_

1 2 3 4 5 6 7 8 [root@controller ~]# keystone role-create --name _member_ +----------+----------------------------------+ | Property | Value | +----------+----------------------------------+ | id | 7154d88610014d268e99c41933a63150 | | name | _member_ | +----------+----------------------------------+ [root@controller ~]#

添加admin租户和用户到_member_角色 1 keystone user-role-add --tenant admin --user admin --role _member_

创建一个用于演示的demo租户和用户 创建demo租户 1 2 3 4 5 6 7 8 9 10 11 keystone tenant-create --name demo --description "Demo Tenant" ```text [root@controller ~]# keystone tenant-create --name demo --description "Demo Tenant" +-------------+----------------------------------+ | Property | Value | +-------------+----------------------------------+ | description | Demo Tenant | | enabled | True | | id | 872294473e5a442da0f0197364e98a41 | | name | demo | +-------------+----------------------------------+

创建demo用户 1 keystone user-create --name demo --pass demo --email demo@123.cn

1 2 3 4 5 6 7 8 9 10 11 [root@controller ~]# keystone user-create --name demo --pass demo --email demo@123.cn +----------+----------------------------------+ | Property | Value | +----------+----------------------------------+ | email | demo@123.cn | | enabled | True | | id | 41ae3374dfd34052aeba97ea855d2794 | | name | demo | | username | demo | +----------+----------------------------------+ [root@controller ~]#

创建demo租户和用户访问的”member “劫色 1 keystone user-role-add --tenant demo --user demo --role _member_

OpenStack服务也需要一个租户,用户和橘色用来和其他服务进行交互.所以需要创建一个service的租户.任何一个OpenStack服务都要和它关联 创建service租户 1 keystone tenant-create --name service --description "Service Tenant"

1 2 3 4 5 6 7 8 9 10 [root@controller ~]# keystone tenant-create --name service --description "Service Tenant" +-------------+----------------------------------+ | Property | Value | +-------------+----------------------------------+ | description | Service Tenant | | enabled | True | | id | 4fd22434679c49038c3ab3ebec5803d9 | | name | service | +-------------+----------------------------------+ [root@controller ~]#

创建服务实体和API端点 在OpenStack环境中,identity服务管理一个服务目录,并使用这个目录在OpenStack环境中定位其他服务 为identity服务创建一个服务实体

1 keystone service-create --name keystone --type identity --description "OpenStack Identity"

1 2 3 4 5 6 7 8 9 10 11 [root@controller ~]# keystone service-create --name keystone --type identity --description "OpenStack Identity" +-------------+----------------------------------+ | Property | Value | +-------------+----------------------------------+ | description | OpenStack Identity | | enabled | True | | id | 2c127d343d4d476c96e0090b90c2dcaf | | name | keystone | | type | identity | +-------------+----------------------------------+ [root@controller ~]#

OpenStack环境中,identity服务管理目录以及与服务相关API端点.服务使用这个目录来沟通其他服务. OpenStack为每个服务提供了三个API端点: admin(管理), internal(内部),public(公共)

1 keystone endpoint-create --service-id $(keystone service-list| awk '/identity/ {print $2}') --publicurl http://controller.nice.com:5000/v2.0 --internalurl http://controller.nice.com:5000/v2.0 --adminurl http://controller.nice.com:35357/v2.0 --region regionOne

1 2 3 4 5 6 7 8 9 10 11 12 [root@controller ~]# keystone endpoint-create --service-id $(keystone service-list| awk '/identity/ {print $2}') --publicurl http://controller.nice.com:5000/v2.0 --internalurl http://controller.nice.com:5000/v2.0 --adminurl http://controller.nice.com:35357/v2.0 --region regionOne +-------------+---------------------------------------+ | Property | Value | +-------------+---------------------------------------+ | adminurl | http://controller.nice.com:35357/v2.0 | | id | bfcbe122324f414bbb4372f64237d37a | | internalurl | http://controller.nice.com:5000/v2.0 | | publicurl | http://controller.nice.com:5000/v2.0 | | region | regionOne | | service_id | 2c127d343d4d476c96e0090b90c2dcaf | +-------------+---------------------------------------+ [root@controller ~]#

确认操作是否正确 删除环境变量 1 unset OS_SERVICE_TOKEN OS_SERVICE_ENDPOINT

1 2 3 4 5 6 7 8 root@controller ~]# env | grep OS HOSTNAME=controller.nice.com OS_SERVICE_TOKEN=cb8ae2320d62e8a0e1c4 OS_SERVICE_ENDPOINT=http://controller.nice.com:35357/v2.0 [root@controller ~]# unset OS_SERVICE_TOKEN OS_SERVICE_ENDPOINT [root@controller ~]# env | grep OS HOSTNAME=controller.nice.com

使用admin租户和用户请求认证令牌 1 keystone --os-tenant-name admin --os-username admin --os-password admin --os-auth-url http://controller.nice.com:35357/v2.0 token-get

1 2 3 4 5 6 7 8 9 10 [root@controller ~]# keystone --os-tenant-name admin --os-username admin --os-password admin --os-auth-url http://controller.nice.com:35357/v2.0 token-get +-----------+----------------------------------+ | Property | Value | +-----------+----------------------------------+ | expires | 2024-11-14T23:48:47Z | | id | cfabd6894b7b498daf75422903bcf73b | | tenant_id | df3d2c39592340bea97aa881613c61d1 | | user_id | fc9527661139494c9d2985fcdf95dc06 | +-----------+----------------------------------+ [root@controller ~]#

以admin住户和用户的身份查看租户列表 1 keystone --os-tenant-name admin --os-username admin --os-password admin --os-auth-url http://controller.nice.com:35357/v2.0 tenant-list

1 2 3 4 5 6 7 8 9 [root@controller ~]# keystone --os-tenant-name admin --os-username admin --os-password admin --os-auth-url http://controller.nice.com:35357/v2.0 tenant-list +----------------------------------+---------+---------+ | id | name | enabled | +----------------------------------+---------+---------+ | df3d2c39592340bea97aa881613c61d1 | admin | True | | 872294473e5a442da0f0197364e98a41 | demo | True | | 4fd22434679c49038c3ab3ebec5803d9 | service | True | +----------------------------------+---------+---------+ [root@controller ~]#

以admin住户和用户的身份查看用户列表 1 keystone --os-tenant-name admin --os-username admin --os-password admin --os-auth-url http://controller.nice.com:35357/v2.0 user-list

1 2 3 4 5 6 7 8 [root@controller ~]# keystone --os-tenant-name admin --os-username admin --os-password admin --os-auth-url http://controller.nice.com:35357/v2.0 user-list +----------------------------------+-------+---------+--------------+ | id | name | enabled | email | +----------------------------------+-------+---------+--------------+ | fc9527661139494c9d2985fcdf95dc06 | admin | True | admin@123.cn | | 41ae3374dfd34052aeba97ea855d2794 | demo | True | demo@123.cn | +----------------------------------+-------+---------+--------------+ [root@controller ~]#

使用demo租户和用户请求认证令牌 1 keystone --os-tenant-name demo --os-username demo --os-password demo --os-auth-url http://controller.nice.com:35357/v2.0 token-get

1 2 3 4 5 6 7 8 9 10 [root@controller ~]# keystone --os-tenant-name demo --os-username demo --os-password demo --os-auth-url http://controller.nice.com:35357/v2.0 token-get +-----------+----------------------------------+ | Property | Value | +-----------+----------------------------------+ | expires | 2024-11-14T23:53:53Z | | id | 33d4cc0c2dc6422fa2ca9644894e32a4 | | tenant_id | 872294473e5a442da0f0197364e98a41 | | user_id | 41ae3374dfd34052aeba97ea855d2794 | +-----------+----------------------------------+ [root@controller ~]#

以demo住户和用户的身份查看用户列表 1 keystone --os-tenant-name demo --os-username demo --os-password demo --os-auth-url http://controller.nice.com:35357/v2.0 user-list

1 2 3 [root@controller ~]# keystone --os-tenant-name demo --os-username demo --os-password demo --os-auth-url http://controller.nice.com:35357/v2.0 user-list You are not authorized to perform the requested action: admin_required (HTTP 403) [root@controller ~]#

创建OpenStack客户端环境脚本 用以快速切换身份

admin-openrc.sh 1 2 3 4 export OS_TENANT_NAME=admin export OS_USERNAME=admin export OS_PASSWORD=admin export OS_AUTH_URL=http://controller.nice.com:35357/v2.0

demo-openrc.sh 1 2 3 4 export OS_TENANT_NAME=demo export OS_USERNAME=demo export OS_PASSWORD=demo export OS_AUTH_URL=http://controller.nice.com:5000/v2.0

通过脚本快速切换身份进行操作 1 2 3 4 5 6 7 8 9 10 11 12 13 14 [root@controller ~]# keystone user-list Expecting an auth URL via either --os-auth-url or env[OS_AUTH_URL] [root@controller ~]# source admin-openrc.sh [root@controller ~]# keystone user-list +----------------------------------+-------+---------+--------------+ | id | name | enabled | email | +----------------------------------+-------+---------+--------------+ | fc9527661139494c9d2985fcdf95dc06 | admin | True | admin@123.cn | | 41ae3374dfd34052aeba97ea855d2794 | demo | True | demo@123.cn | +----------------------------------+-------+---------+--------------+ [root@controller ~]# source demo-openrc.sh [root@controller ~]# keystone user-list You are not authorized to perform the requested action: admin_required (HTTP 403) [root@controller ~]#

glance 服务搭建(controller节点) 先决条件 创建glance数据库 mysql -uroot -p

1 2 3 CREATE DATABASE glance ; grant all privileges on glance.* to`glance`@`localhost` identified by 'glance' ; grant all privileges on glance.* to`glance`@`%` identified by 'glance' ;

启用admin身份 创建认证 创建glance用户 1 keystone user-create --name glance --pass glance

1 2 3 4 5 6 7 8 9 10 11 [root@controller ~]# keystone user-create --name glance --pass glance +----------+----------------------------------+ | Property | Value | +----------+----------------------------------+ | email | | | enabled | True | | id | 1f14c7a785b24a0d8ec4ca2e5a9a413c | | name | glance | | username | glance | +----------+----------------------------------+ [root@controller ~]#

将glance用户链接到service租户和admin角色 1 keystone user-role-add --user glance --tenant service --role admin

创建glance服务 1 keystone service-create --name glance --type image --description "OpenStack Image Service"

1 2 3 4 5 6 7 8 9 10 11 [root@controller ~]# keystone service-create --name glance --type image --description "OpenStack Image Service" +-------------+----------------------------------+ | Property | Value | +-------------+----------------------------------+ | description | OpenStack Image Service | | enabled | True | | id | aad24925355e4259a68f6ecbf916d0c5 | | name | glance | | type | image | +-------------+----------------------------------+ [root@controller ~]#

为OpenStack镜像服务创建认证服务端点 1 2 3 4 5 6 keystone endpoint-create \ --service-id $(keystone service-list | awk '/image/ {print $2}') \ --publicurl http://controller.nice.com:9292 \ --internalurl http://controller.nice.com:9292 \ --adminurl http://controller.nice.com:9292 \ --region regionOne

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 [root@controller ~]# keystone endpoint-create \ > --service-id $(keystone service-list | awk '/image/ {print $2}') \ > --publicurl http://controller.nice.com:9292 \ > --internalurl http://controller.nice.com:9292 \ > --adminurl http://controller.nice.com:9292 \ > --region regionOne +-------------+----------------------------------+ | Property | Value | +-------------+----------------------------------+ | adminurl | http://controller.nice.com:9292 | | id | 9bc2d67dadd14632bc11aca14ed395c8 | | internalurl | http://controller.nice.com:9292 | | publicurl | http://controller.nice.com:9292 | | region | regionOne | | service_id | aad24925355e4259a68f6ecbf916d0c5 | +-------------+----------------------------------+ [root@controller ~]#

安装并配置glance软件包 安装 1 yum install openstack-glance python-glanceclient

修改配置文件 /etc/glance/glance-api.conf [database]小节 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [database] ... connection=mysql://glance:glance@controller.nice.com/glance [keystone_authtoken] ... auth_url=http://controller.nice.com:5000/v2.0 identity_url=http://controller.nice.com:35357 admin_tenant_name=service admin_user=glance admin_password=glance [paste_deploy] ... flavor=keystone

保存退出

修改/etc/glance/glance-registry.conf [database]小结 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [database] ... connection=mysql://glance:glance@controller.nice.com/glance [keystone_authtoken] ... auth_url=http://controller.nice.com:5000/v2.0 identity_url=http://controller.nice.com:35357 admin_tenant_name=service admin_user=glance admin_password=glance [paste_deploy] ... flavor=keystone

[glance_store] 小结配置本地文件系统存储和镜像文件的存放路径 如果没有该小结则自行添加

1 2 3 [glance_store] default_store=file filesystem_store_datadir=/var/lib/glance/images/

保存退出

初始化镜像服务的数据库 1 su -s /bin/sh -c "glance-manage db_sync" glance

开启glance服务 1 2 systemctl enable openstack-glance-api.service openstack-glance-registry.service systemctl start openstack-glance-api.service openstack-glance-registry.service

验证安装 https://launchpad.net/cirros/trunk/0.3.0/+download/cirros-0.3.0-x86_64-disk.img

镜像下载可以参考cirros 1 2 3 source admin-openrc.sh glance image-create --name "cirros-0.3.0-x86_64" --file cirros-0.3.0-x86_64-disk.img \ --disk-format qcow2 --container-format bare --is-public True --progress

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 [root@controller ~]# glance image-create --name "cirros-0.3.0-x86_64" --file cirros-0.3.0-x86_64-disk.img \ > --disk-format qcow2 --container-format bare --is-public True --progress [=============================>] 100% +------------------+--------------------------------------+ | Property | Value | +------------------+--------------------------------------+ | checksum | 50bdc35edb03a38d91b1b071afb20a3c | | container_format | bare | | created_at | 2024-11-17T14:14:10 | | deleted | False | | deleted_at | None | | disk_format | qcow2 | | id | b3f733d1-9e8f-4e0f-becb-2eb44229b5a2 | | is_public | True | | min_disk | 0 | | min_ram | 0 | | name | cirros-0.3.0-x86_64 | | owner | df3d2c39592340bea97aa881613c61d1 | | protected | False | | size | 9761280 | | status | active | | updated_at | 2024-11-17T14:14:10 | | virtual_size | None | +------------------+--------------------------------------+ [root@controller ~]#

1 2 3 4 5 6 7 [root@controller ~]# glance image-list +--------------------------------------+---------------------+-------------+------------------+---------+--------+ | ID | Name | Disk Format | Container Format | Size | Status | +--------------------------------------+---------------------+-------------+------------------+---------+--------+ | b3f733d1-9e8f-4e0f-becb-2eb44229b5a2 | cirros-0.3.0-x86_64 | qcow2 | bare | 9761280 | active | +--------------------------------------+---------------------+-------------+------------------+---------+--------+ [root@controller ~]#

nova 服务构建 controller 节点操作 创建nova数据库 mysql -uroot -p

1 2 3 CREATE DATABASE nova ; grant all privileges on nova.* to`nova`@`localhost` identified by 'nova' ; grant all privileges on nova.* to`nova`@`%` identified by 'nova' ;

执行admin环境脚本 认证服务中创建计算服务的认证信息 创建nova用户 1 keystone user-create --name nova --pass nova

1 2 3 4 5 6 7 8 9 10 11 [root@controller ~]# keystone user-create --name nova --pass nova +----------+----------------------------------+ | Property | Value | +----------+----------------------------------+ | email | | | enabled | True | | id | 1348970f15bc402a9b3ee9e0ff78f1a6 | | name | nova | | username | nova | +----------+----------------------------------+ [root@controller ~]#

链接nova用户到service租户和admin角色 1 keystone user-role-add --user nova --tenant service --role admin

创建nova服务 1 keystone service-create --name nova --type compute --description "OpenStack Compute"

1 2 3 4 5 6 7 8 9 10 11 [root@controller ~]# keystone service-create --name nova --type compute --description "OpenStack Compute" +-------------+----------------------------------+ | Property | Value | +-------------+----------------------------------+ | description | OpenStack Compute | | enabled | True | | id | de34ad20177c461889a39dc02eaad208 | | name | nova | | type | compute | +-------------+----------------------------------+ [root@controller ~]#

创建计算服务的端点 1 2 3 4 5 6 keystone endpoint-create \ --service-id $(keystone service-list | awk '/compute/{print $2}') \ --publicurl http://controller.nice.com:8774/v2/%\(tenant_id\)s \ --internalurl http://controller.nice.com:8774/v2/%\(tenant_id\)s \ --adminurl http://controller.nice.com:8774/v2/%\(tenant_id\)s \ --region regionOne

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 [root@controller ~]# keystone endpoint-create \ > --service-id $(keystone service-list | awk '/compute/{print $2}') \ > --publicurl http://controller.nice.com:8774/v2/%\(tenant_id\)s \ > --internalurl http://controller.nice.com:8774/v2/%\(tenant_id\)s \ > --adminurl http://controller.nice.com:8774/v2/%\(tenant_id\)s \ > --region regionOne +-------------+--------------------------------------------------+ | Property | Value | +-------------+--------------------------------------------------+ | adminurl | http://controller.nice.com:8774/v2/%(tenant_id)s | | id | 69ac5996abd342ee804df2c271880fd8 | | internalurl | http://controller.nice.com:8774/v2/%(tenant_id)s | | publicurl | http://controller.nice.com:8774/v2/%(tenant_id)s | | region | regionOne | | service_id | de34ad20177c461889a39dc02eaad208 | +-------------+--------------------------------------------------+ [root@controller ~]#

安装和配置计算控制组件(controller节点) 安装软件包 1 yum install openstack-nova-api openstack-nova-cert openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler python-novaclient

编辑/etc/nova/nova.conf [database]小结 1 2 3 [database] connection=mysql://nova:nova@controller.nice.com/nova

编辑[DEFAULT]节点 1 2 3 4 5 6 [DEFAULT] ... rpc_backend=rabbit rabbit_host=controller.nice.com rabbit_password=guest ....

编辑[DEFAULT]节点和[keystone_auth] 1 2 3 4 5 6 7 8 9 10 11 12 13 14 [DEFAULT] ... auth_strategy=keystone [keystone_authtoken] ... auth_uri=http://controller.nice.com:5000/v2.0 identity_uri=http://controller.nice.com:35357 admin_tenant_name=service admin_user=nova admin_password=nova ...

编辑[DEFAULT]节点,配置my_ip 1 2 3 4 [DEFAULT] ... my_ip=192.168.222.5

编辑[DEFAULT]节点,配置vnc 1 2 3 4 5 [DEFAULT] ... vncserver_listen=192.168.222.5 vncserver_proxyclient_address=192.168.222.5 ...

编辑[glance]小结配置服务地址 1 2 3 [glance] ... host=controller.nice.com

初始化计算数据库 1 su -s /bin/sh -c "nova-manage db sync " nova

启动nova控制服务 1 2 3 systemctl enable openstack-nova-api.service openstack-nova-cert.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service systemctl start openstack-nova-api.service openstack-nova-cert.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

1 2 3 4 5 6 7 8 9 10 [root@controller ~]# nova service-list +----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+ | Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason | +----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+ | 1 | nova-consoleauth | controller.nice.com | internal | enabled | up | 2024-11-17T16:34:02.000000 | - | | 2 | nova-cert | controller.nice.com | internal | enabled | up | 2024-11-17T16:34:02.000000 | - | | 3 | nova-scheduler | controller.nice.com | internal | enabled | up | 2024-11-17T16:33:59.000000 | - | | 4 | nova-conductor | controller.nice.com | internal | enabled | up | 2024-11-17T16:34:07.000000 | - | +----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+ [root@controller ~]#

安装并配置一个compute节点(compute节点) computer初始化

关闭防火墙 1 2 systemctl stop firewalld systemctl disable firewalld

关闭selinux 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [root@computer1 ~]# setenforce 0 [root@computer1 ~]# vi /etc/selinux/config # This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - No SELinux policy is loaded. SELINUX=disabled # SELINUXTYPE= can take one of these two values: # targeted - Targeted processes are protected, # minimum - Modification of targeted policy. Only selected processes are protected. # mls - Multi Level Security protection. SELINUXTYPE=targeted

关闭网卡守护服务 1 2 systemctl stop NetworkManager systemctl disable NetworkManager

设定主机名 1 hostnamectl set-hostname computer1.nice.com

网卡设置 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 [root@computer1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eno16777736 HWADDR=00:0C:29:49:67:30 TYPE=Ethernet BOOTPROTO=static IPADDR=192.168.222.10 NETMASK=255.255.255.0 DEFROUTE=yes PEERDNS=yes PEERROUTES=yes IPV4_FAILURE_FATAL=no IPV6INIT=yes IPV6_AUTOCONF=yes IPV6_DEFROUTE=yes IPV6_PEERDNS=yes IPV6_PEERROUTES=yes IPV6_FAILURE_FATAL=no NAME=eno16777736 UUID=387dc370-59f7-4518-b20e-0d6bcbe5ed8e ONBOOT=yes [root@computer1 ~]# [root@computer1 ~]# [root@computer1 ~]# cat /etc/sysconfig/network-scripts/ifcfg-eno33554960 HWADDR=00:0C:29:49:67:3A TYPE=Ethernet BOOTPROTO=static IPADDR=172.16.0.10 NETMASK=255.255.255.0 DEFROUTE=yes PEERDNS=yes PEERROUTES=yes IPV4_FAILURE_FATAL=no IPV6INIT=yes IPV6_AUTOCONF=yes IPV6_DEFROUTE=yes IPV6_PEERDNS=yes IPV6_PEERROUTES=yes IPV6_FAILURE_FATAL=no NAME=eno33554960 UUID=b6f463e3-1838-499c-bf3e-3f48e5365a53 ONBOOT=yes [root@computer1 ~]#

域名解析 host文件增加/修改 /etc/hosts

1 2 3 4 192.168.222.5 controller.nice.com 192.168.222.6 network.nice.com 192.168.222.10 computer1.nice.com 192.168.222.20 block1.nice.com

yum修改 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 [root@computer1 ~]# cat /etc/yum.repos.d/CentOS-Base.repo # CentOS-Base.repo # # The mirror system uses the connecting IP address of the client and the # update status of each mirror to pick mirrors that are updated to and # geographically close to the client. You should use this for CentOS updates # unless you are manually picking other mirrors. # # If the mirrorlist= does not work for you, as a fall back you can try the # remarked out baseurl= line instead. # # [base] name=CentOS-$releasever - Base - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/os/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/os/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/os/$basearch/ gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #released updates [updates] name=CentOS-$releasever - Updates - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/updates/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/updates/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/updates/$basearch/ gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #additional packages that may be useful [extras] name=CentOS-$releasever - Extras - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/extras/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/extras/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/extras/$basearch/ gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #additional packages that extend functionality of existing packages [centosplus] name=CentOS-$releasever - Plus - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/centosplus/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/centosplus/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/centosplus/$basearch/ gpgcheck=1 enabled=0 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 #contrib - packages by Centos Users [contrib] name=CentOS-$releasever - Contrib - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/contrib/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/contrib/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/contrib/$basearch/ gpgcheck=1 enabled=0 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

openstack 的yum源

1 2 3 4 5 6 [root@network yum.repos.d]# cat CentOS-OpenStack-juno.repo [centotack-juno] name=openstack-juno baseurl=https://repos.fedorapeople.org/openstack/EOL/openstack-juno/epel-7/ enabled=1 gpgcheck=0

安装epel扩展yum源 (ali源) 1 wget -O /etc/yum.repos.d/epel-7.repo http://mirrors.aliyun.com/repo/epel-7.repo

安装yum-plugin-priorities, 防止高优先级软件被低优先级软件覆盖 1 yum -y install yum-plugin-priorities

更新操作系统 时间同步服务器 安装 配置每分钟时间同步 1 2 3 [root@computer1 ~]# crontab -l */2 * * * * /sbin/ntpdate -u controller.nice.com &>/dev/null [root@computer1 ~]

安装软件包 1 yum install openstack-nova-compute sysfsutils

编辑/etc/nova/nova.conf 文件 编辑[DEFAULT]小结, 配置rabbit 1 2 3 4 5 6 [DEFAULT] ... rpc_backend=rabbit rabbit_host=controller.nice.com rabbit_password=guest

编辑[DEFAULT]和[keystone_authtoken]小结,配置认证服务 1 2 3 4 5 6 7 8 9 10 11 12 13 [DEFAULT] ... auth_strategy=keystone ... [keystone_authtoken] ... auth_uri=http://controller.nice.com:5000/v2.0 identity_uri=http://controller.nice.com:35357 admin_tenant_name=service admin_user=nova admin_password=nova ...

编辑[DEFAULT]小节 配置my_ip 1 2 3 [DEFAULT] ... my_ip=192.168.222.10

编辑[DEFAULT]小节 开启并配置远程控制台访问 1 2 3 4 5 6 [DEFAULT] ... vnc_enabled=True vncserver_listen=0.0.0.0 vncserver_proxyclient_address=192.168.222.10 novncproxy_base_url=http://controller.nice.com:6080/vnc_auto.html

编辑[glance]小节,配置镜像地址 1 2 3 4 [glance] ... host=controller.nice.com

完成安装

检查是不是支持虚拟化 1 egrep -c '(vmx|svm)' /proc/cpuinfo

1 2 3 [root@computer1 ~]# egrep -c '(vmx|svm)' /proc/cpuinfo 0 [root@computer1 ~]#

返回>=1说明计算节点硬件支持虚拟化 无需额外配置

返回=0 说明计算节点不支持虚拟化,必须配置libvirt由使用kvm改成qemu

修改/etc/nova/nova.conf 文件中[libvirt]小节

1 2 3 [libvirt] ... virt_type=qemu

启动计算服务及依赖服务并设置他们开机自动启动 1 2 3 systemctl enable libvirtd.service openstack-nova-compute.service systemctl start libvirtd.service systemctl start openstack-nova-compute.service

在controller节点验证,nova-compute服务已正常上线

1 2 3 4 5 6 7 8 9 10 11 [root@controller ~]# nova service-list +----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+ | Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason | +----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+ | 1 | nova-consoleauth | controller.nice.com | internal | enabled | up | 2024-11-17T17:04:03.000000 | - | | 2 | nova-cert | controller.nice.com | internal | enabled | up | 2024-11-17T17:04:03.000000 | - | | 3 | nova-scheduler | controller.nice.com | internal | enabled | up | 2024-11-17T17:04:10.000000 | - | | 4 | nova-conductor | controller.nice.com | internal | enabled | up | 2024-11-17T17:04:08.000000 | - | | 5 | nova-compute | computer1.nice.com | nova | enabled | up | - | - | +----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+ [root@controller ~]#

以noav请求镜像

1 2 3 4 5 6 [root@controller ~]# nova image-list +--------------------------------------+---------------------+--------+--------+ | ID | Name | Status | Server | +--------------------------------------+---------------------+--------+--------+ | b3f733d1-9e8f-4e0f-becb-2eb44229b5a2 | cirros-0.3.0-x86_64 | ACTIVE | | +--------------------------------------+---------------------+--------+--------+

nuetron主机配置 先决条件(controller节点操作) 创建数据库 1 2 3 CREATE DATABASE neutron ;grant all privileges on neutron.* to `neutron`@`localhost` identified by 'neutron' ;grant all privileges on neutron.* to `neutron`@`% ` identified by 'neutron' ;

执行admin环境脚本 在认证服务中创建网络服务的认证信息. 创建用户 1 keystone user-create --name neutron --pass neutron

1 2 3 4 5 6 7 8 9 10 [root@controller ~]# keystone user-create --name neutron --pass neutron +----------+----------------------------------+ | Property | Value | +----------+----------------------------------+ | email | | | enabled | True | | id | ff37d5cecea4426aa3770bf43c1d76ff | | name | neutron | | username | neutron | +----------+----------------------------------+

链接neutron用户到service租户和admin角色 1 keystone user-role-add --user neutron --tenant service --role admin

创建neutron服务 1 keystone service-create --name neutron --type network --description "OpenStack Network"

1 2 3 4 5 6 7 8 9 10 [root@controller ~]# keystone service-create --name neutron --type network --description "OpenStack Network" +-------------+----------------------------------+ | Property | Value | +-------------+----------------------------------+ | description | OpenStack Network | | enabled | True | | id | 5381d1e1563f4c31b497efcd95a133b1 | | name | neutron | | type | network | +-------------+----------------------------------+

创建neutron服务端点 1 2 3 4 5 6 keystone endpoint-create \ --service-id $(keystone service-list|awk '/network/{print $2}') \ --publicurl http://controller.nice.com:9696 \ --adminurl http://controller.nice.com:9696 \ --internalurl http://controller.nice.com:9696 \ --region regionOne

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 [root@controller ~]# keystone endpoint-create \ > --service-id $(keystone service-list|awk '/network/{print $2}') \ > --publicurl http://controller.nice.com:9696 \ > --adminurl http://controller.nice.com:9696 \ > --internalurl http://controller.nice.com:9696 \ > --region regionOne +-------------+----------------------------------+ | Property | Value | +-------------+----------------------------------+ | adminurl | http://controller.nice.com:9696 | | id | 192db2f2a5154baabe0791641c9c08b8 | | internalurl | http://controller.nice.com:9696 | | publicurl | http://controller.nice.com:9696 | | region | regionOne | | service_id | 5381d1e1563f4c31b497efcd95a133b1 | +-------------+----------------------------------+ [root@controller ~]#

安装服务组件(controller节点操作) 1 yum install openstack-neutron openstack-neutron-ml2 python-neutronclient which

期间遇到错误处理1 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 [root@controller ~]# yum install openstack-neutron 已加载插件:fastestmirror, priorities Loading mirror speeds from cached hostfile * base: mirrors.aliyun.com * extras: mirrors.aliyun.com * updates: mirrors.aliyun.com 正在解决依赖关系 --> 正在检查事务 ---> 软件包 openstack-neutron.noarch.0.2014.2.3-1.el7 将被 安装 --> 正在处理依赖关系 python-neutron = 2014.2.3-1.el7,它被软件包 openstack-neutron-2014.2.3-1.el7.noarch 需要 --> 正在处理依赖关系 radvd,它被软件包 openstack-neutron-2014.2.3-1.el7.noarch 需要 --> 正在处理依赖关系 keepalived,它被软件包 openstack-neutron-2014.2.3-1.el7.noarch 需要 --> 正在处理依赖关系 dnsmasq-utils,它被软件包 openstack-neutron-2014.2.3-1.el7.noarch 需要 --> 正在处理依赖关系 conntrack-tools,它被软件包 openstack-neutron-2014.2.3-1.el7.noarch 需要 --> 正在检查事务 ---> 软件包 conntrack-tools.x86_64.0.1.4.4-7.el7 将被 安装 --> 正在处理依赖关系 libnetfilter_cttimeout.so.1(LIBNETFILTER_CTTIMEOUT_1.1)(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要 --> 正在处理依赖关系 libnetfilter_cttimeout.so.1(LIBNETFILTER_CTTIMEOUT_1.0)(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要 --> 正在处理依赖关系 libnetfilter_cthelper.so.0(LIBNETFILTER_CTHELPER_1.0)(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要 --> 正在处理依赖关系 libnetfilter_queue.so.1()(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要 --> 正在处理依赖关系 libnetfilter_cttimeout.so.1()(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要 --> 正在处理依赖关系 libnetfilter_cthelper.so.0()(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要 ---> 软件包 dnsmasq-utils.x86_64.0.2.76-17.el7_9.3 将被 安装 ---> 软件包 keepalived.x86_64.0.1.3.5-19.el7 将被 安装 --> 正在处理依赖关系 libnetsnmpmibs.so.31()(64bit),它被软件包 keepalived-1.3.5-19.el7.x86_64 需要 --> 正在处理依赖关系 libnetsnmpagent.so.31()(64bit),它被软件包 keepalived-1.3.5-19.el7.x86_64 需要 --> 正在处理依赖关系 libnetsnmp.so.31()(64bit),它被软件包 keepalived-1.3.5-19.el7.x86_64 需要 ---> 软件包 python-neutron.noarch.0.2014.2.3-1.el7 将被 安装 --> 正在处理依赖关系 python-jsonrpclib,它被软件包 python-neutron-2014.2.3-1.el7.noarch 需要 ---> 软件包 radvd.x86_64.0.2.17-3.el7 将被 安装 --> 正在检查事务 ---> 软件包 libnetfilter_cthelper.x86_64.0.1.0.0-11.el7 将被 安装 ---> 软件包 libnetfilter_cttimeout.x86_64.0.1.0.0-7.el7 将被 安装 ---> 软件包 libnetfilter_queue.x86_64.0.1.0.2-2.el7_2 将被 安装 ---> 软件包 net-snmp-agent-libs.x86_64.1.5.7.2-49.el7_9.4 将被 安装 --> 正在处理依赖关系 libsensors.so.4()(64bit),它被软件包 1:net-snmp-agent-libs-5.7.2-49.el7_9.4.x86_64 需要 ---> 软件包 net-snmp-libs.x86_64.1.5.7.2-49.el7_9.4 将被 安装 ---> 软件包 python-jsonrpclib.noarch.0.0.1.3-2.el7 将被 安装 --> 正在检查事务 ---> 软件包 lm_sensors-libs.x86_64.0.3.4.0-8.20160601gitf9185e5.el7_9.1 将被 安装 --> 处理 python-neutron-2014.2.3-1.el7.noarch 与 python-eventlet >= 0.16.0 的冲突 --> 解决依赖关系完成 错误:python-neutron conflicts with python2-eventlet-0.18.4-1.el7.noarch 您可以尝试添加 --skip-broken 选项来解决该问题 您可以尝试执行:rpm -Va --nofiles --nodigest

1 2 3 4 [root@controller ~]# yum list |grep python | grep eventlet python2-eventlet.noarch 0.18.4-1.el7 @epel python-eventlet.noarch 0.15.2-1.el7 centotack-juno python2-eventlet-doc.noarch 0.18.4-1.el7 epel

可以看到epel仓库有 高版本的软件,openstack使用的是低版本

1 rpm -e --nodeps python2-eventlet-0.18.4-1.el7.noarch

将epel仓库删除,

1 2 yum clean all yum makecache

手动下载安装低版本安装包

1 yum install python-eventlet-0.15.2-1.el7.noarch

再重新安装

1 yum install openstack-neutron openstack-neutron-ml2 which

期间遇到错误处理2 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 [root@controller yum.repos.d]# yum install openstack-neutron openstack-neutron-ml2 which 已加载插件:fastestmirror, priorities Loading mirror speeds from cached hostfile * base: mirrors.aliyun.com * extras: mirrors.aliyun.com * updates: mirrors.aliyun.com 软件包 which-2.20-7.el7.x86_64 已安装并且是最新版本 正在解决依赖关系 --> 正在检查事务 ---> 软件包 openstack-neutron.noarch.0.2014.2.3-1.el7 将被 安装 --> 正在处理依赖关系 python-neutron = 2014.2.3-1.el7,它被软件包 openstack-neutron-2014.2.3-1.el7.noarch 需要 --> 正在处理依赖关系 radvd,它被软件包 openstack-neutron-2014.2.3-1.el7.noarch 需要 --> 正在处理依赖关系 keepalived,它被软件包 openstack-neutron-2014.2.3-1.el7.noarch 需要 --> 正在处理依赖关系 dnsmasq-utils,它被软件包 openstack-neutron-2014.2.3-1.el7.noarch 需要 --> 正在处理依赖关系 conntrack-tools,它被软件包 openstack-neutron-2014.2.3-1.el7.noarch 需要 ---> 软件包 openstack-neutron-ml2.noarch.0.2014.2.3-1.el7 将被 安装 --> 正在处理依赖关系 python-ncclient,它被软件包 openstack-neutron-ml2-2014.2.3-1.el7.noarch 需要 --> 正在检查事务 ---> 软件包 conntrack-tools.x86_64.0.1.4.4-7.el7 将被 安装 --> 正在处理依赖关系 libnetfilter_cttimeout.so.1(LIBNETFILTER_CTTIMEOUT_1.1)(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要 --> 正在处理依赖关系 libnetfilter_cttimeout.so.1(LIBNETFILTER_CTTIMEOUT_1.0)(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要 --> 正在处理依赖关系 libnetfilter_cthelper.so.0(LIBNETFILTER_CTHELPER_1.0)(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要 --> 正在处理依赖关系 libnetfilter_queue.so.1()(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要 --> 正在处理依赖关系 libnetfilter_cttimeout.so.1()(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要 --> 正在处理依赖关系 libnetfilter_cthelper.so.0()(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要 ---> 软件包 dnsmasq-utils.x86_64.0.2.76-17.el7_9.3 将被 安装 ---> 软件包 keepalived.x86_64.0.1.3.5-19.el7 将被 安装 --> 正在处理依赖关系 libnetsnmpmibs.so.31()(64bit),它被软件包 keepalived-1.3.5-19.el7.x86_64 需要 --> 正在处理依赖关系 libnetsnmpagent.so.31()(64bit),它被软件包 keepalived-1.3.5-19.el7.x86_64 需要 --> 正在处理依赖关系 libnetsnmp.so.31()(64bit),它被软件包 keepalived-1.3.5-19.el7.x86_64 需要 ---> 软件包 openstack-neutron-ml2.noarch.0.2014.2.3-1.el7 将被 安装 --> 正在处理依赖关系 python-ncclient,它被软件包 openstack-neutron-ml2-2014.2.3-1.el7.noarch 需要 ---> 软件包 python-neutron.noarch.0.2014.2.3-1.el7 将被 安装 --> 正在处理依赖关系 python-jsonrpclib,它被软件包 python-neutron-2014.2.3-1.el7.noarch 需要 ---> 软件包 radvd.x86_64.0.2.17-3.el7 将被 安装 --> 正在检查事务 ---> 软件包 libnetfilter_cthelper.x86_64.0.1.0.0-11.el7 将被 安装 ---> 软件包 libnetfilter_cttimeout.x86_64.0.1.0.0-7.el7 将被 安装 ---> 软件包 libnetfilter_queue.x86_64.0.1.0.2-2.el7_2 将被 安装 ---> 软件包 net-snmp-agent-libs.x86_64.1.5.7.2-49.el7_9.4 将被 安装 --> 正在处理依赖关系 libsensors.so.4()(64bit),它被软件包 1:net-snmp-agent-libs-5.7.2-49.el7_9.4.x86_64 需要 ---> 软件包 net-snmp-libs.x86_64.1.5.7.2-49.el7_9.4 将被 安装 ---> 软件包 openstack-neutron-ml2.noarch.0.2014.2.3-1.el7 将被 安装 --> 正在处理依赖关系 python-ncclient,它被软件包 openstack-neutron-ml2-2014.2.3-1.el7.noarch 需要 ---> 软件包 python-jsonrpclib.noarch.0.0.1.3-1.el7 将被 安装 --> 正在检查事务 ---> 软件包 lm_sensors-libs.x86_64.0.3.4.0-8.20160601gitf9185e5.el7_9.1 将被 安装 ---> 软件包 openstack-neutron-ml2.noarch.0.2014.2.3-1.el7 将被 安装 --> 正在处理依赖关系 python-ncclient,它被软件包 openstack-neutron-ml2-2014.2.3-1.el7.noarch 需要 --> 解决依赖关系完成 错误:软件包:openstack-neutron-ml2-2014.2.3-1.el7.noarch (centotack-juno) 需要:python-ncclient 您可以尝试添加 --skip-broken 选项来解决该问题 您可以尝试执行:rpm -Va --nofiles --nodigest [root@controller yum.repos.d]#

发现juno仓库并没有python-ncclient

1 yum install python-ncclient

之后再下载

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 [root@controller yum.repos.d]# yum install openstack-neutron openstack-neutron-ml2 which 已加载插件:fastestmirror, priorities Loading mirror speeds from cached hostfile * base: mirrors.aliyun.com * extras: mirrors.aliyun.com * updates: mirrors.aliyun.com 软件包 which-2.20-7.el7.x86_64 已安装并且是最新版本 正在解决依赖关系 --> 正在检查事务 ---> 软件包 openstack-neutron.noarch.0.2014.2.3-1.el7 将被 安装 --> 正在处理依赖关系 python-neutron = 2014.2.3-1.el7,它被软件包 openstack-neutron-2014.2.3-1.el7.noarch 需要 --> 正在处理依赖关系 radvd,它被软件包 openstack-neutron-2014.2.3-1.el7.noarch 需要 --> 正在处理依赖关系 keepalived,它被软件包 openstack-neutron-2014.2.3-1.el7.noarch 需要 --> 正在处理依赖关系 dnsmasq-utils,它被软件包 openstack-neutron-2014.2.3-1.el7.noarch 需要 --> 正在处理依赖关系 conntrack-tools,它被软件包 openstack-neutron-2014.2.3-1.el7.noarch 需要 ---> 软件包 openstack-neutron-ml2.noarch.0.2014.2.3-1.el7 将被 安装 --> 正在检查事务 ---> 软件包 conntrack-tools.x86_64.0.1.4.4-7.el7 将被 安装 --> 正在处理依赖关系 libnetfilter_cttimeout.so.1(LIBNETFILTER_CTTIMEOUT_1.1)(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要 --> 正在处理依赖关系 libnetfilter_cttimeout.so.1(LIBNETFILTER_CTTIMEOUT_1.0)(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要 --> 正在处理依赖关系 libnetfilter_cthelper.so.0(LIBNETFILTER_CTHELPER_1.0)(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要 --> 正在处理依赖关系 libnetfilter_queue.so.1()(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要 --> 正在处理依赖关系 libnetfilter_cttimeout.so.1()(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要 --> 正在处理依赖关系 libnetfilter_cthelper.so.0()(64bit),它被软件包 conntrack-tools-1.4.4-7.el7.x86_64 需要 ---> 软件包 dnsmasq-utils.x86_64.0.2.76-17.el7_9.3 将被 安装 ---> 软件包 keepalived.x86_64.0.1.3.5-19.el7 将被 安装 --> 正在处理依赖关系 libnetsnmpmibs.so.31()(64bit),它被软件包 keepalived-1.3.5-19.el7.x86_64 需要 --> 正在处理依赖关系 libnetsnmpagent.so.31()(64bit),它被软件包 keepalived-1.3.5-19.el7.x86_64 需要 --> 正在处理依赖关系 libnetsnmp.so.31()(64bit),它被软件包 keepalived-1.3.5-19.el7.x86_64 需要 ---> 软件包 python-neutron.noarch.0.2014.2.3-1.el7 将被 安装 --> 正在处理依赖关系 python-jsonrpclib,它被软件包 python-neutron-2014.2.3-1.el7.noarch 需要 ---> 软件包 radvd.x86_64.0.2.17-3.el7 将被 安装 --> 正在检查事务 ---> 软件包 libnetfilter_cthelper.x86_64.0.1.0.0-11.el7 将被 安装 ---> 软件包 libnetfilter_cttimeout.x86_64.0.1.0.0-7.el7 将被 安装 ---> 软件包 libnetfilter_queue.x86_64.0.1.0.2-2.el7_2 将被 安装 ---> 软件包 net-snmp-agent-libs.x86_64.1.5.7.2-49.el7_9.4 将被 安装 --> 正在处理依赖关系 libsensors.so.4()(64bit),它被软件包 1:net-snmp-agent-libs-5.7.2-49.el7_9.4.x86_64 需要 ---> 软件包 net-snmp-libs.x86_64.1.5.7.2-49.el7_9.4 将被 安装 ---> 软件包 python-jsonrpclib.noarch.0.0.1.3-2.el7 将被 安装 --> 正在检查事务 ---> 软件包 lm_sensors-libs.x86_64.0.3.4.0-8.20160601gitf9185e5.el7_9.1 将被 安装 --> 解决依赖关系完成 依赖关系解决 ===================================================================================================================================================================================== Package 架构 版本 源 大小 ===================================================================================================================================================================================== 正在安装: openstack-neutron noarch 2014.2.3-1.el7 centotack-juno 55 k openstack-neutron-ml2 noarch 2014.2.3-1.el7 centotack-juno 35 k 为依赖而安装: conntrack-tools x86_64 1.4.4-7.el7 base 187 k dnsmasq-utils x86_64 2.76-17.el7_9.3 updates 31 k keepalived x86_64 1.3.5-19.el7 base 332 k libnetfilter_cthelper x86_64 1.0.0-11.el7 base 18 k libnetfilter_cttimeout x86_64 1.0.0-7.el7 base 18 k libnetfilter_queue x86_64 1.0.2-2.el7_2 base 23 k lm_sensors-libs x86_64 3.4.0-8.20160601gitf9185e5.el7_9.1 updates 42 k net-snmp-agent-libs x86_64 1:5.7.2-49.el7_9.4 updates 707 k net-snmp-libs x86_64 1:5.7.2-49.el7_9.4 updates 752 k python-jsonrpclib noarch 0.1.3-2.el7 epel 28 k python-neutron noarch 2014.2.3-1.el7 centotack-juno 2.4 M radvd x86_64 2.17-3.el7 base 94 k 事务概要 ===================================================================================================================================================================================== 安装 2 软件包 (+12 依赖软件包) 总下载量:4.7 M 安装大小:18 M Is this ok [y/d/N]: y Downloading packages: (1/14): conntrack-tools-1.4.4-7.el7.x86_64.rpm | 187 kB 00:00:00 (2/14): dnsmasq-utils-2.76-17.el7_9.3.x86_64.rpm | 31 kB 00:00:00 (3/14): libnetfilter_cthelper-1.0.0-11.el7.x86_64.rpm | 18 kB 00:00:00 (4/14): keepalived-1.3.5-19.el7.x86_64.rpm | 332 kB 00:00:00 (5/14): libnetfilter_cttimeout-1.0.0-7.el7.x86_64.rpm | 18 kB 00:00:00 (6/14): libnetfilter_queue-1.0.2-2.el7_2.x86_64.rpm | 23 kB 00:00:00 (7/14): lm_sensors-libs-3.4.0-8.20160601gitf9185e5.el7_9.1.x86_64.rpm | 42 kB 00:00:00 (8/14): net-snmp-agent-libs-5.7.2-49.el7_9.4.x86_64.rpm | 707 kB 00:00:00 (9/14): net-snmp-libs-5.7.2-49.el7_9.4.x86_64.rpm | 752 kB 00:00:00 (10/14): python-jsonrpclib-0.1.3-2.el7.noarch.rpm | 28 kB 00:00:00 (11/14): openstack-neutron-ml2-2014.2.3-1.el7.noarch.rpm | 35 kB 00:00:01 (12/14): radvd-2.17-3.el7.x86_64.rpm | 94 kB 00:00:00 (13/14): openstack-neutron-2014.2.3-1.el7.noarch.rpm | 55 kB 00:00:03 (14/14): python-neutron-2014.2.3-1.el7.noarch.rpm | 2.4 MB 00:00:39 ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 总计 115 kB/s | 4.7 MB 00:00:41 Running transaction check Running transaction test Transaction test succeeded Running transaction 正在安装 : 1:net-snmp-libs-5.7.2-49.el7_9.4.x86_64 1/14 正在安装 : dnsmasq-utils-2.76-17.el7_9.3.x86_64 2/14 正在安装 : libnetfilter_cthelper-1.0.0-11.el7.x86_64 3/14 正在安装 : libnetfilter_cttimeout-1.0.0-7.el7.x86_64 4/14 正在安装 : lm_sensors-libs-3.4.0-8.20160601gitf9185e5.el7_9.1.x86_64 5/14 正在安装 : 1:net-snmp-agent-libs-5.7.2-49.el7_9.4.x86_64 6/14 正在安装 : keepalived-1.3.5-19.el7.x86_64 7/14 正在安装 : libnetfilter_queue-1.0.2-2.el7_2.x86_64 8/14 正在安装 : conntrack-tools-1.4.4-7.el7.x86_64 9/14 正在安装 : python-jsonrpclib-0.1.3-2.el7.noarch 10/14 正在安装 : python-neutron-2014.2.3-1.el7.noarch 11/14 正在安装 : radvd-2.17-3.el7.x86_64 12/14 正在安装 : openstack-neutron-2014.2.3-1.el7.noarch 13/14 正在安装 : openstack-neutron-ml2-2014.2.3-1.el7.noarch 14/14 验证中 : 1:net-snmp-agent-libs-5.7.2-49.el7_9.4.x86_64 1/14 验证中 : keepalived-1.3.5-19.el7.x86_64 2/14 验证中 : openstack-neutron-2014.2.3-1.el7.noarch 3/14 验证中 : radvd-2.17-3.el7.x86_64 4/14 验证中 : python-jsonrpclib-0.1.3-2.el7.noarch 5/14 验证中 : libnetfilter_queue-1.0.2-2.el7_2.x86_64 6/14 验证中 : python-neutron-2014.2.3-1.el7.noarch 7/14 验证中 : 1:net-snmp-libs-5.7.2-49.el7_9.4.x86_64 8/14 验证中 : openstack-neutron-ml2-2014.2.3-1.el7.noarch 9/14 验证中 : lm_sensors-libs-3.4.0-8.20160601gitf9185e5.el7_9.1.x86_64 10/14 验证中 : conntrack-tools-1.4.4-7.el7.x86_64 11/14 验证中 : libnetfilter_cttimeout-1.0.0-7.el7.x86_64 12/14 验证中 : libnetfilter_cthelper-1.0.0-11.el7.x86_64 13/14 验证中 : dnsmasq-utils-2.76-17.el7_9.3.x86_64 14/14 已安装: openstack-neutron.noarch 0:2014.2.3-1.el7 openstack-neutron-ml2.noarch 0:2014.2.3-1.el7 作为依赖被安装: conntrack-tools.x86_64 0:1.4.4-7.el7 dnsmasq-utils.x86_64 0:2.76-17.el7_9.3 keepalived.x86_64 0:1.3.5-19.el7 libnetfilter_cthelper.x86_64 0:1.0.0-11.el7 libnetfilter_cttimeout.x86_64 0:1.0.0-7.el7 libnetfilter_queue.x86_64 0:1.0.2-2.el7_2 lm_sensors-libs.x86_64 0:3.4.0-8.20160601gitf9185e5.el7_9.1 net-snmp-agent-libs.x86_64 1:5.7.2-49.el7_9.4 net-snmp-libs.x86_64 1:5.7.2-49.el7_9.4 python-jsonrpclib.noarch 0:0.1.3-2.el7 python-neutron.noarch 0:2014.2.3-1.el7 radvd.x86_64 0:2.17-3.el7 完毕! [root@controller yum.repos.d]#

安装成功

因为卸载过软件包,重启controller节点验证没有影响 1 2 3 4 5 6 7 8 9 10 11 12 [root@controller ~]# source admin-openrc.sh [root@controller ~]# nova service-list +----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+ | Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason | +----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+ | 1 | nova-consoleauth | controller.nice.com | internal | enabled | up | 2024-11-17T18:21:46.000000 | - | | 2 | nova-cert | controller.nice.com | internal | enabled | up | 2024-11-17T18:21:46.000000 | - | | 3 | nova-scheduler | controller.nice.com | internal | enabled | up | 2024-11-17T18:21:46.000000 | - | | 4 | nova-conductor | controller.nice.com | internal | enabled | up | 2024-11-17T18:21:46.000000 | - | | 5 | nova-compute | computer1.nice.com | nova | enabled | up | 2024-11-17T18:20:54.000000 | - | +----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+ [root@controller ~]#

配置网络服务组件(controller节点操作) 编辑/etc/neutron/neutron.conf文件,并完成下列操作 编辑[database]小节,配置数据库链接 1 2 3 [database] .... connection=mysql://neutron:neutron@controller.nice.com/neutron

编辑[DEFAULT]小节 配置RabbitMQ消息队列访问 1 2 3 4 5 6 [DEFAULT] ... rpc_backend=rabbit rabbit_host=controller.nice.com rabbit_password=guest

编辑[DEFAULT]和[keystone_authtoken]小节,配置认证服务访问信息 1 2 3 4 5 6 7 8 9 10 11 12 13 [DEFAULT] ... auth_strategy=keystone ... [keystone_authtoken] ... auth_uri=http://controller.nice.com:5000/v2.0 identity_uri=http://controller.nice.com:35357 admin_tenant_name=service admin_user=neutron admin_password=neutron ...

编辑[DEFAULT]节点,启用Modular Layer2(ML2)插件,路由服务 1 2 3 4 5 6 [DEFAULT] ... core_plugin=ml2 service_plugins=router allow_overlapping_ips=True ...

编辑[DEFAULT]节点,配置当网络拓扑结构发生变化时通知计算服务 1 2 3 4 5 6 7 8 9 10 [DEFAULT] .... notify_nova_on_port_status_changes=True notify_nova_on_port_data_changes=True nova_url=http://controller.nice.com:8774/v2 nova_admin_auth_url=http://controller.nice.com:35357/v2.0 nova_region_name=regionOne nova_admin_username=nova nova_admin_tenant_id=${查询service的租户ID命令: keystone tenant-get service} nova_admin_password=nova

配置Modular Layer 2 (ML2) plug-in (controller节点操作) 编辑/etc/neutron/plugins/ml2/ml2_conf.ini 编辑[ml2]小节, 启用flat和generic routing encapsulation(GRE)网络类型驱动,配置GRE租户网络和OVS驱动机制 1 2 3 4 5 [ml2] ... type_drivers=flat,gre tenant_network_types=gre mechanism_drivers=openvswitch

编辑[ml2_type_gre]小节,配置隧道标识范围 1 2 3 4 [ml2_type_gre] ... tunnel_id_ranges=1:1000

编辑[securitygroup]小节, 启用安全组,启用ipset并配置OVS防火墙驱动 1 2 3 4 5 [securitygroup] ... enable_security_group=True enable_ipset=True firewall_driver=neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

配置计算服务使用Neutron(controller节点操作) 默认情况下计算服务使用的时传统网络,我们需要重新配置

编辑/etc/nova/nova.conf文件 编辑[DEFAULT]小节 配置API接口和驱动程序 1 2 3 4 5 6 [DEFAULT] ... network_api_class=nova.network.neutronv2.api.API security_group_api=neutron linuxnet_interface_driver=nova.network.linux_net.LinuxOVSInterfaceDriver firewall_driver=nova.virt.firewall.NoopFirewallDriver

编辑[neutron]小节 配置访问参数 1 2 3 4 5 6 7 ... url=http://controller.nice.com:9696 auth_strategy=keystone admin_auth_url=http://controller.nice.com:35357/v2.0 admin_tenant_name=service admin_username=neutron admin_password=neutron

完成配置(controller节点操作) 为ML2插件配置文件创建链接文件 1 ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

初始化数据库 1 su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade juno" neutron

重启管理节点上的nova服务 1 systemctl restart openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service

启动网络服务并配置开机自动启动 1 2 systemctl enable neutron-server.service systemctl start neutron-server.service

验证 执行管理员脚本

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 [root@controller ~]# source admin-openrc.sh [root@controller ~]# nova service-list +----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+ | Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason | +----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+ | 1 | nova-consoleauth | controller.nice.com | internal | enabled | up | 2024-11-17T19:28:29.000000 | - | | 2 | nova-cert | controller.nice.com | internal | enabled | up | 2024-11-17T19:28:29.000000 | - | | 3 | nova-scheduler | controller.nice.com | internal | enabled | up | 2024-11-17T19:28:25.000000 | - | | 4 | nova-conductor | controller.nice.com | internal | enabled | up | 2024-11-17T19:28:25.000000 | - | | 5 | nova-compute | computer1.nice.com | nova | enabled | up | 2024-11-17T19:28:23.000000 | - | +----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+ [root@controller ~]# neutron ext-list +-----------------------+-----------------------------------------------+ | alias | name | +-----------------------+-----------------------------------------------+ | security-group | security-group | | l3_agent_scheduler | L3 Agent Scheduler | | ext-gw-mode | Neutron L3 Configurable external gateway mode | | binding | Port Binding | | provider | Provider Network | | agent | agent | | quotas | Quota management support | | dhcp_agent_scheduler | DHCP Agent Scheduler | | l3-ha | HA Router extension | | multi-provider | Multi Provider Network | | external-net | Neutron external network | | router | Neutron L3 Router | | allowed-address-pairs | Allowed Address Pairs | | extraroute | Neutron Extra Route | | extra_dhcp_opt | Neutron Extra DHCP opts | | dvr | Distributed Virtual Router | +-----------------------+-----------------------------------------------+ [root@controller ~]#

neutron节点(network节点) 初始化 关闭主机防火墙 1 2 systemctl stop firewalld systemctl disable firewalld

关闭selinux 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 [root@localhost ~]# setenforce 0 [root@localhost ~]# cat /etc/selinux/config # This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - No SELinux policy is loaded. SELINUX=disabled # SELINUXTYPE= can take one of these two values: # targeted - Targeted processes are protected, # minimum - Modification of targeted policy. Only selected processes are protected. # mls - Multi Level Security protection. SELINUXTYPE=targeted

ip地址配置 修改网卡配置文件,管理网段网卡

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 [root@localhost ~]# cat /etc/sysconfig/network-scripts/ifcfg-eno16777736 HWADDR=00:0C:29:E5:FA:B2 TYPE=Ethernet BOOTPROTO=static IPADDR=192.168.222.6 NETMASK=255.255.255.0 DEFROUTE=yes PEERDNS=yes PEERROUTES=yes IPV4_FAILURE_FATAL=no IPV6INIT=yes IPV6_AUTOCONF=yes IPV6_DEFROUTE=yes IPV6_PEERDNS=yes IPV6_PEERROUTES=yes IPV6_FAILURE_FATAL=no NAME=eno16777736 UUID=2cc54e58-3622-4e1c-bf28-3d388dfa12fb ONBOOT=yes

应用网段网卡

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 [root@localhost ~]# cat /etc/sysconfig/network-scripts/ifcfg-eno33554960 HWADDR=00:0C:29:E5:FA:BC TYPE=Ethernet BOOTPROTO=static IPADDR=172.16.0.6 NETMASK=255.255.255.0 DEFROUTE=yes PEERDNS=yes PEERROUTES=yes IPV4_FAILURE_FATAL=no IPV6INIT=yes IPV6_AUTOCONF=yes IPV6_DEFROUTE=yes IPV6_PEERDNS=yes IPV6_PEERROUTES=yes IPV6_FAILURE_FATAL=no NAME=eno33554960 UUID=e92ab93a-1485-441a-8782-08f03ba8ba40 ONBOOT=yes [root@localhost ~]#

外部网卡

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 [root@localhost ~]# cat /etc/sysconfig/network-scripts/ifcfg-eno50332184 HWADDR=00:0C:29:E5:FA:C6 TYPE=Ethernet BOOTPROTO=static IPADDR=100.100.100.11 NETMASK=255.255.255.0 DEFROUTE=yes PEERDNS=yes PEERROUTES=yes IPV4_FAILURE_FATAL=no IPV6INIT=yes IPV6_AUTOCONF=yes IPV6_DEFROUTE=yes IPV6_PEERDNS=yes IPV6_PEERROUTES=yes IPV6_FAILURE_FATAL=no NAME=eno50332184 UUID=90655bc9-137f-495c-8b4a-6e6c3412fa1b ONBOOT=yes [root@localhost ~]#

修改完之后重启网卡

1 systemctl restart network

关闭网卡守护进程 1 2 systemctl stop NetworkManager systemctl disable NetworkManager

配置yum源 修改 CentOS-Base.repo 为ali源

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 # CentOS-Base.repo # # update status of each mirror to pick mirrors that are updated to and # geographically close to the client. You should use this for CentOS updates # unless you are manually picking other mirrors. # # remarked out baseurl= line instead. # [base] name=CentOS-$releasever - Base - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/os/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/os/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/os/$basearch/ gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 # released updates [updates] name=CentOS-$releasever - Updates - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/updates/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/updates/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/updates/$basearch/ gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 # additional packages that may be useful [extras] name=CentOS-$releasever - Extras - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/extras/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/extras/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/extras/$basearch/ gpgcheck=1 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 # additional packages that extend functionality of existing packages [centosplus] name=CentOS-$releasever - Plus - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/centosplus/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/centosplus/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/centosplus/$basearch/ gpgcheck=1 enabled=0 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7 # contrib - packages by Centos Users [contrib] name=CentOS-$releasever - Contrib - mirrors.aliyun.com failovermethod=priority baseurl=http://mirrors.aliyun.com/centos/$releasever/contrib/$basearch/ http://mirrors.aliyuncs.com/centos/$releasever/contrib/$basearch/ http://mirrors.cloud.aliyuncs.com/centos/$releasever/contrib/$basearch/ gpgcheck=1 enabled=0 gpgkey=http://mirrors.aliyun.com/centos/RPM-GPG-KEY-CentOS-7

设置主机名称 1 hostnamectl set-hostname network.nice.com

安装openstack预备包 安装yum-plugin-priorities, 防止高优先级软件被低优先级软件覆盖 1 yum -y install yum-plugin-priorities

安装epel扩展yum源 (ali源) 1 wget -O /etc/yum.repos.d/epel-7.repo http://mirrors.aliyun.com/repo/epel-7.repo

更新操作系统 更新hosts文件 /etc/hosts

1 2 3 4 192.168.222.5 controller.nice.com 192.168.222.6 network.nice.com 192.168.222.10 computer1.nice.com 192.168.222.20 block1.nice.com

配置时间同步 配置定时任务

1 2 3 [root@network ~]# crontab -l */2 * * * * /sbin/ntpdate -u controller.nice.com &>/dev/null [root@network ~]#

开启路由转发 编辑/etc/sysctl.conf文件增加如下参数

1 2 3 net.ipv4.ip_forward=1 net.ipv4.conf.all.rp_filter=0 net.ipv4.conf.default.rp_filter=0

使参数生效

安装网络组件(network节点) 1 yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-openvswitch

配置网络通用组件(network节点) 编辑/etc/neutron/neutron.conf 文件 编辑[database] ,注释掉任何connection选项,network节点不能智联数据库 编辑[DEFAULT]小节,配置rabbitMQ消息队列访问 1 2 3 4 5 [DEFAULT] ... rpc_backend=rabbit rabbit_host=controller.nice.com rabbit_password=guest

编辑[DEFAULT]和[keystone_authtoken]小节,配置认证服务访问 1 2 3 4 5 6 7 8 9 10 11 12 13 [DEFAULT] ... auth_strategy=keystone ... [keystone_authtoken] ... auth_uri=http://controller.nice.com:5000/v2.0 identity_uri=http://controller.nice.com:35357 admin_tenant_name=service admin_user=neutron admin_password=neutron ...

编辑[DEFAULT]小节,启用Modular Layer(ML2)插件 1 2 3 4 5 6 [DEFAULT] ... core_plugin=ml2 service_plugins=router allow_overlapping_ips=True ...

配置Modular Layer 2 (ML2) plug-in (network节点操作) 编辑/etc/neutron/plugins/ml2/ml2_conf.ini 编辑[ml2]小节, 启用flat和generic routing encapsulation(GRE)网络类型驱动,配置GRE租户网络和OVS驱动机制 1 2 3 4 5 [ml2] ... type_drivers=flat,gre tenant_network_types=gre mechanism_drivers=openvswitch

编辑[ml2_type_flat],配置外部网络 1 2 3 [ml2_type_flat] ... flat_network=external

编辑[ml2_type_gre]小节,配置隧道标识范围 1 2 3 4 [ml2_type_gre] ... tunnel_id_ranges=1:1000

编辑[securitygroup]小节, 启用安全组,启用ipset并配置OVS防火墙驱动 1 2 3 4 5 [securitygroup] ... enable_security_group=True enable_ipset=True firewall_driver=neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

编辑[ovs]小节, 配置Open vSwitch(OVS)代理 如果找不到就在后面直接添加该内容

1 2 3 4 5 6 [ovs] ... local_ip=${实例网络地址:172.16.0.6} tunnel_type=gre enable_tunneling=True brige_mappings=external:br-ex

配置Layer-3(L3) agent(network节点) 编辑/etc/neutron/l3_agent.ini文件 编辑[DEFAULT]小节,配置驱动,启用网络命名空间, 配置外部网络桥接 1 2 3 4 ... interface_driver=neutron.agent.linux.interface.OVSInterfaceDriver use_namespaces=True external_network_bridge=br-ex

配置DHCP agent(network节点) 编辑/etc/neutron/dhcp_agent.ini文件 编辑[DEFAULT] 配置驱动和启用命名空间 1 2 3 4 5 [DEFAULT] ... interface_driver=neutron.agent.linux.interface.OVSInterfaceDriver dhcp_driver=neutron.agent.linux.dhcp.Dnsmasq use_namespaces=True

(!在VMware虚拟机中可能时必要的) 配置DHCP选项,将MUT改为1454bytes,以改善网络性能 编辑/etc/neutron/dhcp_agent.ini 编辑[DEFAULT]小节,启用dnsmasq配置

1 2 3 [DEFAULT] ... dnsmasq_config_file=/etc/neutron/dnsmasq-neutron.conf

创建并编辑/etc/neutron/dnsmasq-neutron.conf 文件 启用DHCP MTU选项并配置值为1454bytes

1 2 3 dhcp-option-force=26,1454 user=neutron group=neutron

中止任何已经存在的dnsmasq进程 编辑[DEFAULT]小节, 配置访问参数 1 2 3 4 5 6 7 8 [DEFAULT] ... auth_url=http://controller.nice.com:5000/v2.0 auth_region=regionOne admin_tenant_name=service admin_user=neutron admin_password=neutron

编辑[DEFAULT],配置元数据主机 1 2 3 [DEFAULT] ... nova_metadata_ip=controller.nice.com

编辑[DEFAULT],配置元数据代理共享机密安好 1 2 3 [DEFAULT] ... metadata_proxy_shared_secret=woshianhao

在controller节点,编辑/etc/nova/nova.conf 编辑[neutron] 启用元数据代理并配置机密暗号 1 2 3 4 [neutron] ... service_metadata_proxy=True metadata_proxy_shared_secret=woshianhao

在controller节点重启compute API服务 1 systemctl restart openstack-nova-api.service

配置Open vSwitch(OVS)服务(network节点) 启动VOS服务并配置开机自动启动 1 2 systemctl enable openvswitch.service systemctl start openvswitch.service

添加外部网桥(external birdge) 添加一个端口到外部网桥,用于链接外部物理网络 1 ovs-vsctl add-port br-ex eno50332184

完成安装(network节点) 创建网络服务初始化脚本的符号链接 1 2 3 4 ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini cp /usr/lib/systemd/system/neutron-openvswitch-agent.service /usr/lib/systemd/system/neutron-openvswitch-agent.service.orig sed -i 's,plugins/openvswitch/ovs_neutron_plugin.ini,plugin.ini,g' /usr/lib/systemd/system/neutron-openvswitch-agent.service

启动网络服务 1 2 systemctl enable neutron-openvswitch-agent.service neutron-l3-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service neutron-ovs-cleanup.service systemctl start neutron-openvswitch-agent.service neutron-l3-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service neutron-ovs-cleanup.service

验证(在controller节点) 执行admin环境变量脚本 列出neutron代理确认启动neutron agents成功 1 2 3 4 5 6 7 8 9 +--------------------------------------+--------------------+------------------+-------+----------------+---------------------------+ | id | agent_type | host | alive | admin_state_up | binary | +--------------------------------------+--------------------+------------------+-------+----------------+---------------------------+ | 61a22180-6f83-492e-b164-6d9d8e30a8e1 | Open vSwitch agent | network.nice.com | :-) | True | neutron-openvswitch-agent | | a8d3ab3d-0164-4d20-a398-bd3a294afb33 | DHCP agent | network.nice.com | :-) | True | neutron-dhcp-agent | | b81a7972-76ed-4e34-a560-e9d2d9211858 | Metadata agent | network.nice.com | :-) | True | neutron-metadata-agent | | cb6ca35c-7f17-4571-bae7-c82d87905ecb | L3 agent | network.nice.com | :-) | True | neutron-l3-agent | +--------------------------------------+--------------------+------------------+-------+----------------+---------------------------+ [root@controller ~]#

安装并配置compute1节点(compute1节点) 配置先决条件(compute1节点) 编辑/etc/sysctl.conf ,使其包含如下参数 1 2 net.ipv4.conf.all.rp_filter=0 net.ipv4.conf.default.rp_filter=0

使/etc/sysctl.conf配置生效 安装网络组件(compute1节点) 1 yum install openstack-neutron-ml2 openstack-neutron-openvswitch

配置网络通用组件(compute1节点) 编辑/etc/neutron/neutron.conf 文件 编辑[database] ,注释掉任何connection选项,compute1节点不能智联数据库 编辑[DEFAULT]小节,配置rabbitMQ消息队列访问 1 2 3 4 5 [DEFAULT] ... rpc_backend=rabbit rabbit_host=controller.nice.com rabbit_password=guest

编辑[DEFAULT]和[keystone_authtoken]小节,配置认证服务访问 1 2 3 4 5 6 7 8 9 10 11 12 13 [DEFAULT] ... auth_strategy=keystone ... [keystone_authtoken] ... auth_uri=http://controller.nice.com:5000/v2.0 identity_uri=http://controller.nice.com:35357 admin_tenant_name=service admin_user=neutron admin_password=neutron ...

编辑[DEFAULT]小节,启用Modular Layer(ML2)插件 1 2 3 4 5 6 [DEFAULT] ... core_plugin=ml2 service_plugins=router allow_overlapping_ips=True ...

配置Modular Layer 2 (ML2) plug-in (compute1节点) 编辑/etc/neutron/plugins/ml2/ml2_conf.ini 编辑[ml2]小节, 启用flat和generic routing encapsulation(GRE)网络类型驱动,配置GRE租户网络和OVS驱动机制 1 2 3 4 5 [ml2] ... type_drivers=flat,gre tenant_network_types=gre mechanism_drivers=openvswitch

编辑[ml2_type_gre]小节,配置隧道标识范围 1 2 3 4 [ml2_type_gre] ... tunnel_id_ranges=1:1000

编辑[securitygroup]小节, 启用安全组,启用ipset并配置OVS防火墙驱动 1 2 3 4 5 [securitygroup] ... enable_security_group=True enable_ipset=True firewall_driver=neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

编辑[ovs]小节, 配置Open vSwitch(OVS)代理 如果找不到就在后面直接添加该内容

1 2 3 4 5 [ovs] ... local_ip=${实例网络地址:172.16.0.10} tunnel_type=gre enable_tunneling=True

启动Open vSwitch (OVS) service(compute1节点操作) 启动服务 1 2 systemctl enable openvswitch.service systemctl start openvswitch.service

配置计算服务使用Neutron(computer1节点操作) 编辑/etc/nova/nova.conf文件 编辑[DEFAULT]小节 配置API接口和驱动程序 1 2 3 4 5 6 [DEFAULT] ... network_api_class=nova.network.neutronv2.api.API security_group_api=neutron linuxnet_interface_driver=nova.network.linux_net.LinuxOVSInterfaceDriver firewall_driver=nova.virt.firewall.NoopFirewallDriver

编辑[neutron]小节 配置访问参数 1 2 3 4 5 6 7 ... url=http://controller.nice.com:9696 auth_strategy=keystone admin_auth_url=http://controller.nice.com:35357/v2.0 admin_tenant_name=service admin_username=neutron admin_password=neutron

完成安装(compute1节点) 创建网络服务初始化脚本的符号链接 1 2 3 4 ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini cp /usr/lib/systemd/system/neutron-openvswitch-agent.service /usr/lib/systemd/system/neutron-openvswitch-agent.service.orig sed -i 's,plugins/openvswitch/ovs_neutron_plugin.ini,plugin.ini,g' /usr/lib/systemd/system/neutron-openvswitch-agent.service

重启nova服务 1 systemctl restart openstack-nova-compute.service

启动OVS代理服务并设置开机启动 1 2 systemctl enable neutron-openvswitch-agent.service systemctl start neutron-openvswitch-agent.service