思考 近期我的腾讯云服务器到期了. 是否还要续费呢?

4C/8G的轻量服务器大概需要每个月150元 .一年大概1800元. 我只能得到一些省心的服务.一年后一无所有…

花钱组装一台垃圾主机.淘宝查询二手设备价格

CPU

G4960T/4C

35元

内存

8G

70元

主板

适配

160元

硬盘

128G

50元

M2 三星

硬盘

500G

170元

固态SSD

硬盘

1T

100元

机械

散热

适配

50元

新的

机箱

适配

160元

新的

电源

650W

闲置

显卡

1060

闲置

线/螺丝

适配

20元

总计

-

815元

1 2 3 4 常态负载很低.功耗按照100W计算 一天 = 100W*24*0.6 约 1.44 元 一年大概 525元 . 经测试实际待机功耗不到40W 估算电费最多300元. (其中1060显卡就占用了20W左右,如果下掉显卡功耗总共20W左右) 一年后还会收获一些垃圾设备, 极端一点, 即便出二手也不会贬值太多.

所以我决定下云,换成实体主机咯

1 如果要做内网穿透,有一台一年99元`vps ,做中转还是比较合适的`

整个组网结构就变成了如下这昂

1 2 终端 ------- 腾讯云vps ------- 家庭服务器 公网 内网穿透

那么说干就干. 一眨眼的功夫就

买回了家.

组装好了机器.

安装好了ubuntu22系统.

修改镜像为清华源 清华源

修改 /etc/apt/sources.list

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 # 默认注释了源码镜像以提高 apt update 速度,如有需要可自行取消注释 deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-updates main restricted universe multiverse deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-backports main restricted universe multiverse # 以下安全更新软件源包含了官方源与镜像站配置,如有需要可自行修改注释切换 deb http://security.ubuntu.com/ubuntu/ focal-security main restricted universe multiverse # deb-src http://security.ubuntu.com/ubuntu/ focal-security main restricted universe multiverse # 预发布软件源,不建议启用 # deb https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse # # deb-src https://mirrors.tuna.tsinghua.edu.cn/ubuntu/ focal-proposed main restricted universe multiverse

资源更新完,那么下面开始服务器部署吧 磁盘与目录规划 目前我挂载了两个盘,

一个500G的SSD盘用做应用与数据盘.

一个160G的机械盘用做日志/测试应用/冷数据临时盘/maven仓库/node仓库 等.

下载资源规划 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 Disk /dev/sdb:476.96 GiB,512110190592 字节,1000215216 个扇区 Disk model: ONDA A-48 512GB 单元:扇区 / 1 * 512 = 512 字节 扇区大小(逻辑/物理):512 字节 / 512 字节 I/O 大小(最小/最佳):512 字节 / 512 字节 磁盘标签类型:dos 磁盘标识符:0xd36f32b1 设备 启动 起点 末尾 扇区 大小 Id 类型 /dev/sdb1 * 4096 1000214527 1000210432 477G 7 HPFS/NTFS/exFAT Disk /dev/sdc:149.5 GiB,160041885696 字节,312581808 个扇区 Disk model: ST9160314AS 单元:扇区 / 1 * 512 = 512 字节 扇区大小(逻辑/物理):512 字节 / 512 字节 I/O 大小(最小/最佳):512 字节 / 512 字节 磁盘标签类型:dos 磁盘标识符:0xab77bb4d 设备 启动 起点 末尾 扇区 大小 Id 类型 /dev/sdc1 * 63 312576704 312576642 149G 7 HPFS/NTFS/exFAT

500G NFS配置 将500G磁盘作为为NFS存储. 家用PC也可以访问. linux也可以访问

格式化磁盘并挂载到目录上 1 2 3 4 5 6 7 # mkdir -p /mnt/NfsShareFolder chown 777 /mnt/NfsShareFolder # 格式化磁盘 mkfs.ext4 /dev/sdb1 # 挂载自盘 mount /dev/sdb1 /mnt/NfsShareFolder

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 root@lqz-mdz:~# mkfs.ext4 /dev/sdb1 mke2fs 1.45.5 (07-Jan-2020) /dev/sdb1 有一个标签为“系统”的 ntfs 文件系统 无论如何也要继续?(y,N) y 丢弃设备块: 完成 创建含有 125026304 个块(每块 4k)和 31260672 个 inode 的文件系统 文件系统 UUID:67688c10-7997-41b7-9d1d-000b06737028 超级块的备份存储于下列块: 32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208, 4096000, 7962624, 11239424, 20480000, 23887872, 71663616, 78675968, 102400000 正在分配组表: 完成 正在写入 inode表: 完成 创建日志(262144 个块): 完成 写入超级块和文件系统账户统计信息: 已完成 root@lqz-mdz:~# mount /dev/sdb1 /mnt/NfsShareFolder root@lqz-mdz:~# df -h 文件系统 容量 已用 可用 已用% 挂载点 udev 3.8G 0 3.8G 0% /dev tmpfs 781M 1.9M 779M 1% /run /dev/mapper/vgubuntu-root 116G 6.6G 103G 7% / tmpfs 3.9G 0 3.9G 0% /dev/shm tmpfs 5.0M 4.0K 5.0M 1% /run/lock tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup /dev/loop0 64M 64M 0 100% /snap/core20/1828 /dev/loop1 128K 128K 0 100% /snap/bare/5 /dev/loop2 347M 347M 0 100% /snap/gnome-3-38-2004/119 /dev/loop3 50M 50M 0 100% /snap/snapd/18357 /dev/loop4 46M 46M 0 100% /snap/snap-store/638 /dev/loop5 92M 92M 0 100% /snap/gtk-common-themes/1535 /dev/sda1 511M 6.1M 505M 2% /boot/efi tmpfs 781M 24K 781M 1% /run/user/126 tmpfs 781M 8.0K 781M 1% /run/user/1000 /dev/sdb1 469G 28K 445G 1% /mnt/NfsShareFolder

配置开机自动挂载磁盘 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 lqz@lqz-mdz:~$ blkid /dev/sdb1 /dev/sdb1: UUID="67688c10-7997-41b7-9d1d-000b06737028" TYPE="ext4" PARTUUID="d36f32b1-01" lqz@lqz-mdz:~$ root@lqz-mdz:~# vi /etc/fstab # /etc/fstab: static file system information. # # Use 'blkid' to print the universally unique identifier for a # device; this may be used with UUID= as a more robust way to name devices # that works even if disks are added and removed. See fstab(5). # # <file system> <mount point> <type> <options> <dump> <pass> /dev/mapper/vgubuntu-root / ext4 errors=remount-ro 0 1 # /boot/efi was on /dev/sda1 during installation UUID=75E5-6D16 /boot/efi vfat umask=0077 0 1 /dev/mapper/vgubuntu-swap_1 none swap sw 0 0 # 500g ssd UUID=67688c10-7997-41b7-9d1d-000b06737028 /mnt/NfsShareFolder ext4 defaults 0 2 # 进行测试言责 mount -a 会挂载所有磁盘 root@lqz-mdz:~# umount /mnt/NfsShareFolder root@lqz-mdz:~# df -h 文件系统 容量 已用 可用 已用% 挂载点 udev 3.8G 0 3.8G 0% /dev tmpfs 781M 1.9M 779M 1% /run /dev/mapper/vgubuntu-root 116G 6.6G 103G 7% / tmpfs 3.9G 0 3.9G 0% /dev/shm tmpfs 5.0M 4.0K 5.0M 1% /run/lock tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup /dev/loop0 64M 64M 0 100% /snap/core20/1828 /dev/loop1 128K 128K 0 100% /snap/bare/5 /dev/loop2 347M 347M 0 100% /snap/gnome-3-38-2004/119 /dev/loop3 50M 50M 0 100% /snap/snapd/18357 /dev/loop4 46M 46M 0 100% /snap/snap-store/638 /dev/loop5 92M 92M 0 100% /snap/gtk-common-themes/1535 /dev/sda1 511M 6.1M 505M 2% /boot/efi tmpfs 781M 24K 781M 1% /run/user/126 tmpfs 781M 8.0K 781M 1% /run/user/1000 root@lqz-mdz:~# mount -a root@lqz-mdz:~# df -h 文件系统 容量 已用 可用 已用% 挂载点 udev 3.8G 0 3.8G 0% /dev tmpfs 781M 1.9M 779M 1% /run /dev/mapper/vgubuntu-root 116G 6.6G 103G 7% / tmpfs 3.9G 0 3.9G 0% /dev/shm tmpfs 5.0M 4.0K 5.0M 1% /run/lock tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup /dev/loop0 64M 64M 0 100% /snap/core20/1828 /dev/loop1 128K 128K 0 100% /snap/bare/5 /dev/loop2 347M 347M 0 100% /snap/gnome-3-38-2004/119 /dev/loop3 50M 50M 0 100% /snap/snapd/18357 /dev/loop4 46M 46M 0 100% /snap/snap-store/638 /dev/loop5 92M 92M 0 100% /snap/gtk-common-themes/1535 /dev/sda1 511M 6.1M 505M 2% /boot/efi tmpfs 781M 24K 781M 1% /run/user/126 tmpfs 781M 8.0K 781M 1% /run/user/1000 /dev/sdb1 469G 28K 445G 1% /mnt/NfsShareFolder root@lqz-mdz:~#

安装nfs服务 1 apt install nfs-kernel-server

编辑 /etc/exports 增加访问权限 1 /mnt/NfsShareFolder 192.168.1.0/24(rw,sync,no_subtree_check)

1 2 3 4 5 6 7 8 9 10 11 12 root@lqz-mdz:~# systemctl status nfs-server ● nfs-server.service - NFS server and services Loaded: loaded (/lib/systemd/system/nfs-server.service; enabled; vendor preset: enabled) Active: active (exited) since Sat 2024-06-15 21:29:33 CST; 48s ago Main PID: 3665 (code=exited, status=0/SUCCESS) Tasks: 0 (limit: 9266) Memory: 0B CGroup: /system.slice/nfs-server.service 6月 15 21:29:32 lqz-mdz systemd[1]: Starting NFS server and services... 6月 15 21:29:33 lqz-mdz systemd[1]: Finished NFS server and services. root@lqz-mdz:~#

重新导出所有NFS定义的文件系统 1 2 3 root@lqz-mdz:~# exportfs -arv exporting 192.168.1.0/24:/mnt/NfsShareFolder root@lqz-mdz:~#

查看是否可以看到共享目录

1 2 3 4 root@lqz-mdz:~# showmount -e 192.168.3.120 Export list for 192.168.3.120: /mnt/NfsShareFolder 192.168.3.0/24 root@lqz-mdz:~#

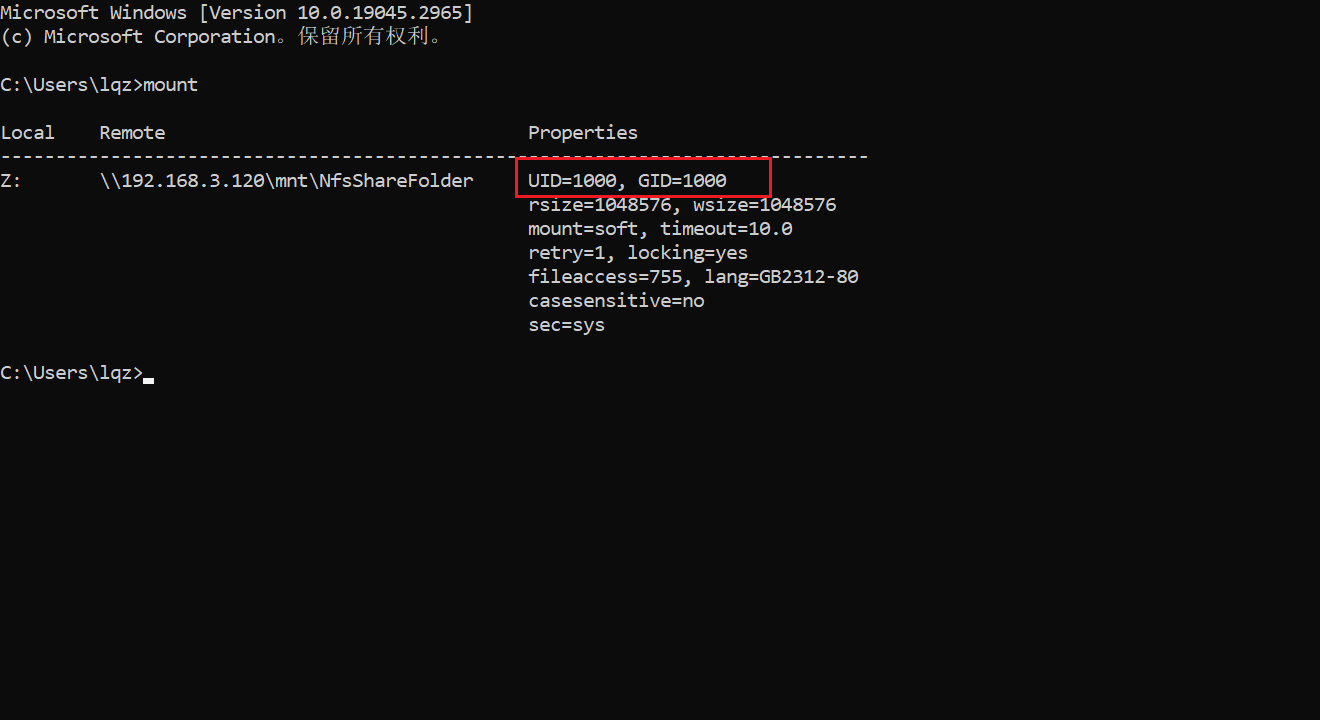

windows 验证是否可用 配置网络映射地址为

1 \\192.168.1.120\mnt\NfsShareFolder

NFS使用遇到的问题

从其他服务器批量导出镜像命令1 docker images | grep -v REPOSITORY |sort -k1 |awk '{ n=split($1,attr_1,"/"); image=attr_1[1] ;for(i=2;i<=n;i++){image=image "_" attr_1[i]} ; print "docker save " $1 ":" $2 " -o " image "_" $2 ".tar"}'

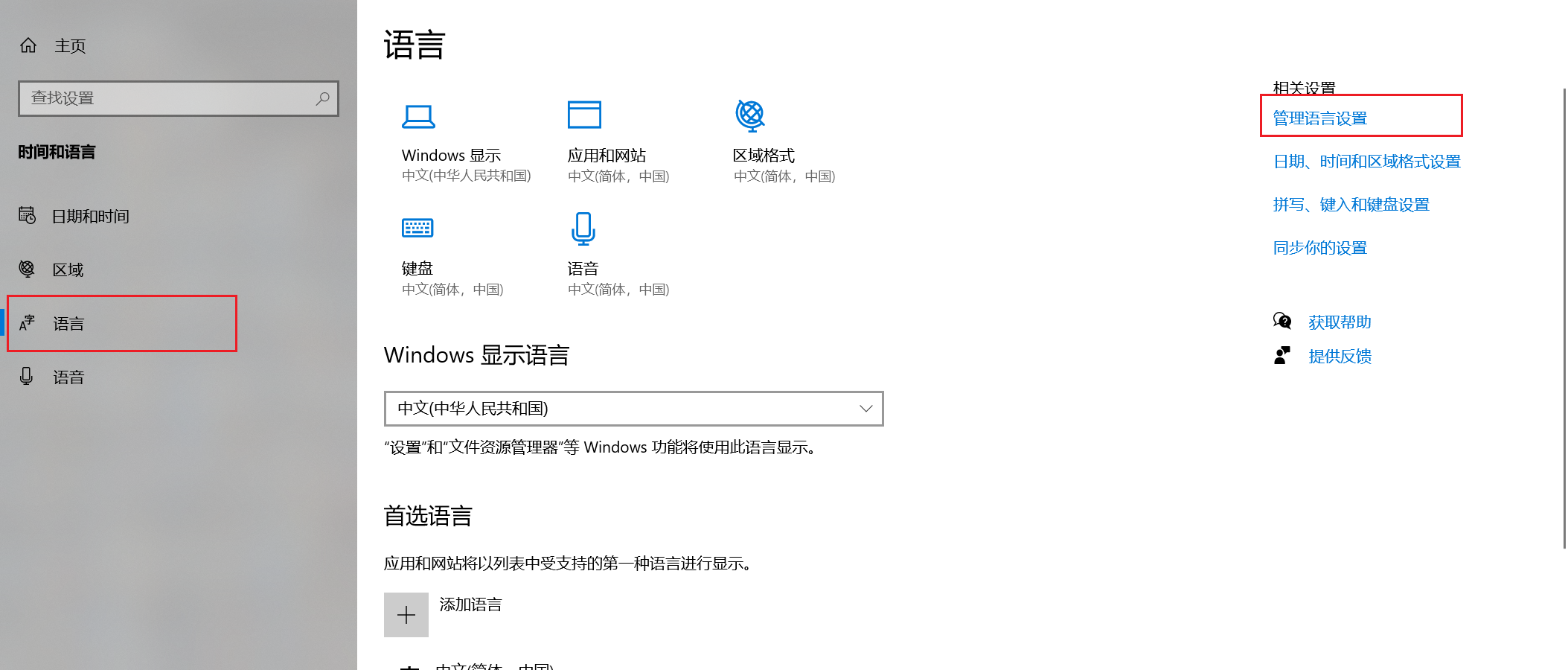

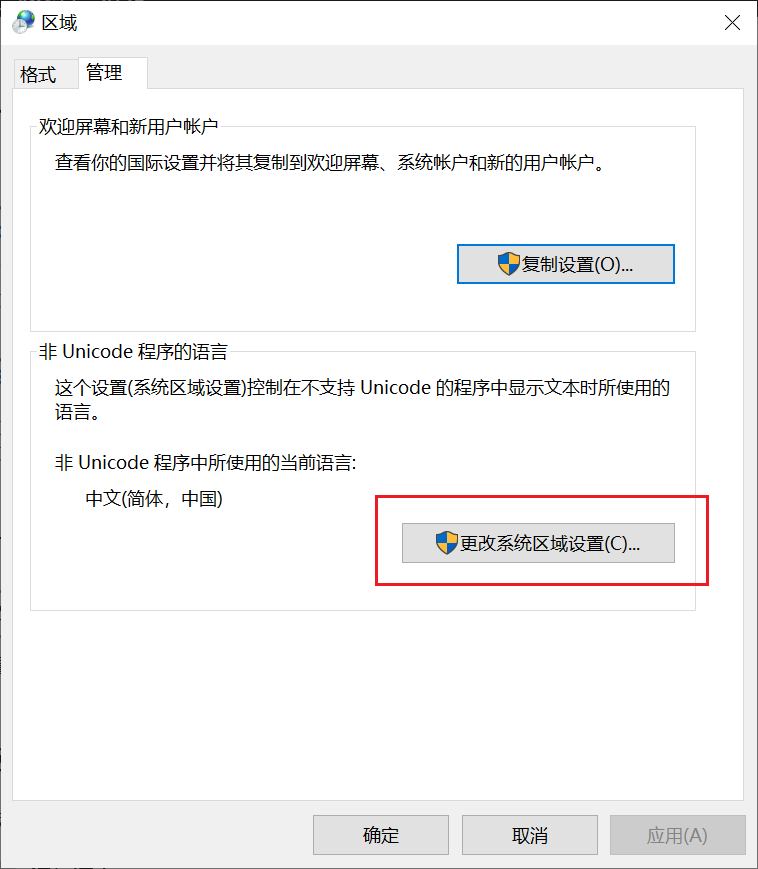

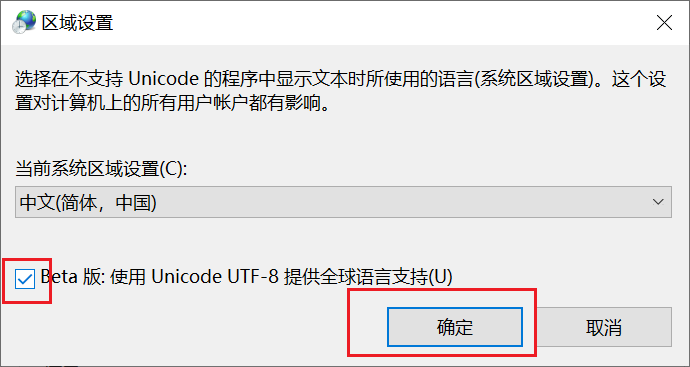

windows 支持utf8

进入设置

NFS git同步时报错1 2 3 4 5 6 7 fatal: detected dubious ownership in repository at '//192.168.3.120/mnt/NfsShareFolder/app/gitlab_jenkins/nginx/myhtml/picture/MyPicture' To add an exception for this directory, call: git config --global --add safe.directory '%(prefix)///192.168.3.120/mnt/NfsShareFolder/app/gitlab_jenkins/nginx/myhtml/picture/MyPicture' Set the environment variable GIT_TEST_DEBUG_UNSAFE_DIRECTORIES=true and run again for more information.

按照提示在windows执行命令将该目录加到信任目录

1 git config --global --add safe.directory '%(prefix)///192 .168 .3 .120 /mnt/NfsShareFolder/app/gitlab_jenkins/nginx/myhtml/picture/MyPicture'

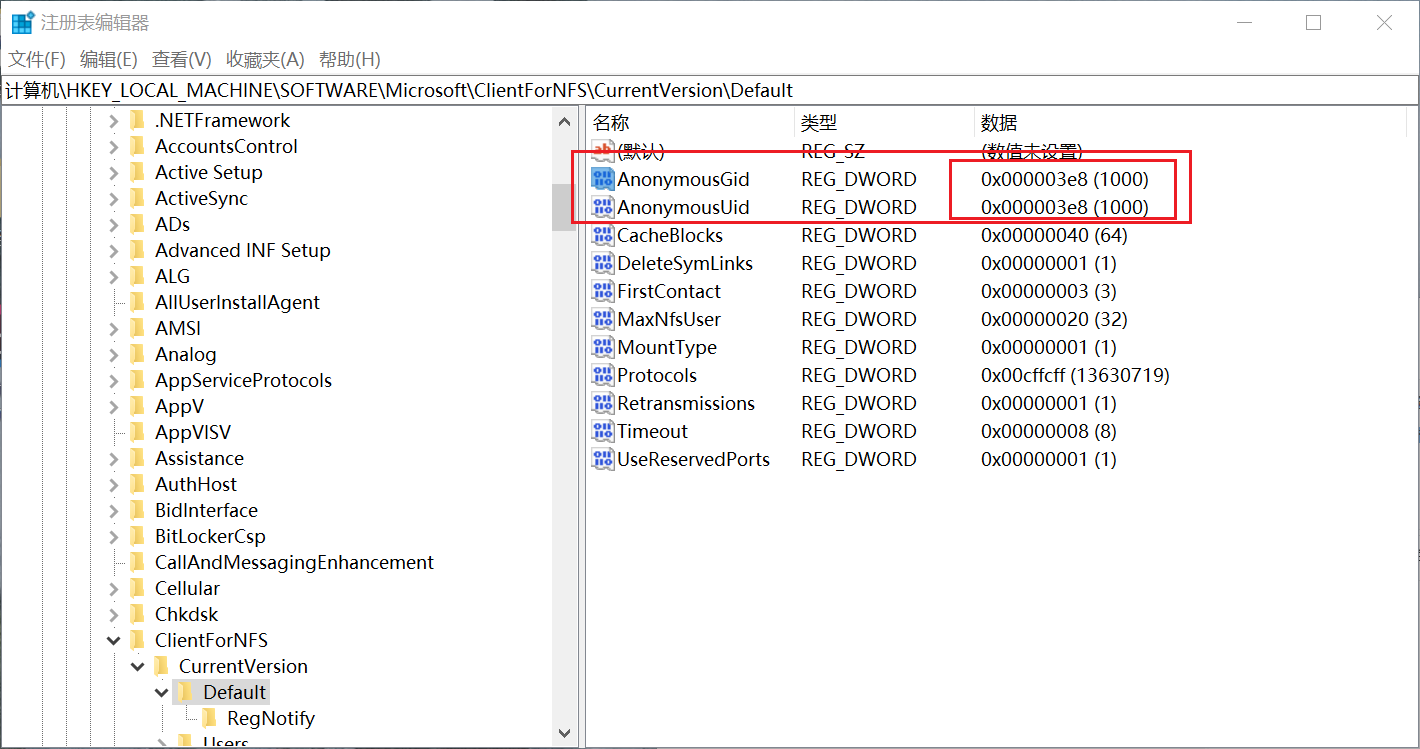

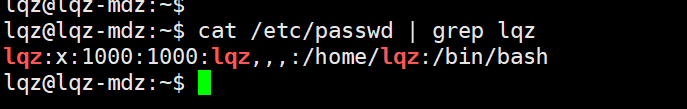

打开注册表

1 HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\ClientForNFS\CurrentVersion\Default

给其中增加两项:AnonymousUid,AnonymousGid

修改完了之后重启windows主机

160G 接卸盘配置 格式化创建存储并挂载磁盘 命令比较简单,一次性操作完成

1 2 3 4 5 6 7 8 9 10 11 12 # 创建pe pvcreate /dev/sdc1 # 创建vg vgcreate vg_data1 /dev/sdc1 # 创建lv lvcreate -l 100%FREE -n lv_data1 vg_data1 # 格式化 mkfs.ext4 /dev/vg_data1/lv_data1 # 创建目录 mkdir /data1 # 挂载磁盘 mount /dev/vg_data1/lv_data1 /data1

修改/etc/fstab

1 /dev/vg_data1/lv_data1 /data1 ext4 defaults 0 2

测试

目录规划

1 2 3 4 5 6 7 8 9 10 11 12 # /data 为数据盘. # 如果条件充裕也可以将数据类文件做动静分离,静态类目录放nfs上,则不用不数那么多软件包副本. 动态类防止在各自的本地盘. # 动静分离类盘要提前规划,需要提前了解哪些路径是可以叠加复用,难度较大,目前暂不考虑.所有节点都放在本地盘 mkdir -p /data1/app/ # 存放应用以及实例相关数据 mkdir -p /data1/pkg/ # 存放各个应用的安装包 # mkdir -p /data1/docker/ mkdir -p /data1/k8s/ # 若用到看k8s该目录存放k8s相关的组件 mkdir -p /data1/log/ # 通用日志目录 mkdir -p /data1/tmp/ # 通用临时文件目录 mkdir -p /data1/bak/ # 通用备份目录 mkdir -p /data1/DS/ # 通用DataStone存储目录 chown -R lqz:lqz /data1

完成 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 root@lqz-mdz:~# df -h 文件系统 容量 已用 可用 已用% 挂载点 udev 3.8G 0 3.8G 0% /dev tmpfs 781M 1.9M 779M 1% /run /dev/mapper/vgubuntu-root 116G 7.5G 102G 7% / tmpfs 3.9G 0 3.9G 0% /dev/shm tmpfs 5.0M 4.0K 5.0M 1% /run/lock tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup /dev/loop0 64M 64M 0 100% /snap/core20/1828 /dev/loop2 347M 347M 0 100% /snap/gnome-3-38-2004/119 /dev/loop1 128K 128K 0 100% /snap/bare/5 /dev/loop3 92M 92M 0 100% /snap/gtk-common-themes/1535 /dev/loop4 46M 46M 0 100% /snap/snap-store/638 /dev/loop5 50M 50M 0 100% /snap/snapd/18357 /dev/sda1 511M 6.1M 505M 2% /boot/efi /dev/sdb1 469G 28K 445G 1% /mnt/NfsShareFolder tmpfs 781M 24K 781M 1% /run/user/126 /dev/loop6 39M 39M 0 100% /snap/snapd/21759 tmpfs 781M 8.0K 781M 1% /run/user/1000 /dev/loop7 64M 64M 0 100% /snap/core20/2318 /dev/loop8 350M 350M 0 100% /snap/gnome-3-38-2004/143 /dev/mapper/vg_data1-lv_data1 146G 28K 139G 1% /data1 root@lqz-mdz:~#

开始ubuntu安装需要使用的 工具类组件 1 apt install net-tools lrzsz gzip curl vim git ntp openssh-server

安装wireguard 配置与腾讯云连接做内网穿透 略

1 2 3 4 5 6 7 8 9 10 11 12 13 root@lqz-mdz:/etc/wireguard# wg interface: txy public key: wunHIX马赛克马赛克马赛克马赛克 private key: (hidden) listening port: 马赛克 peer: CQv85w1skfa3C马赛克马赛克马赛克 endpoint: 123.马赛克.马赛克.马赛克:马赛克 allowed ips: 100.马赛克.马赛克.0/24 latest handshake: 40 seconds ago transfer: 184 B received, 680 B sent persistent keepalive: every 15 seconds root@lqz-mdz:/etc/wireguard#

开机启动grub选则时间过长 30s 修改/etc/default/grub

1 GRUB_RECORDFAIL_TIMEOUT=5

重新生成grub文件

重启试试吧链接

工具类组件 1 apt install net-tools lrzsz gzip curl vim git ntp

docker服务 停交换空间 1 2 swapoff -a sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 root@lqz-mdz:/etc# free -h 总计 已用 空闲 共享 缓冲/缓存 可用 内存: 7.6Gi 574Mi 4.5Gi 38Mi 2.5Gi 6.8Gi 交换: 0B 0B 0B root@lqz-mdz:/etc# cat /etc/fstab # /etc/fstab: static file system information. # # Use 'blkid' to print the universally unique identifier for a # device; this may be used with UUID= as a more robust way to name devices # that works even if disks are added and removed. See fstab(5). # # <file system> <mount point> <type> <options> <dump> <pass> /dev/mapper/vgubuntu-root / ext4 errors=remount-ro 0 1 # /boot/efi was on /dev/sda1 during installation UUID=75E5-6D16 /boot/efi vfat umask=0077 0 1 #/dev/mapper/vgubuntu-swap_1 none swap sw 0 0 # 500g ssd UUID=67688c10-7997-41b7-9d1d-000b06737028 /mnt/NfsShareFolder ext4 defaults 0 2 # 160G /dev/vg_data1/lv_data1 /data1 ext4 defaults 0 2 root@lqz-mdz:/etc#

docker安装 1 2 3 4 5 apt remove docker docker.io containerd runc apt install curl curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add - add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable" apt install docker-ce docker-ce-cli containerd.io

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 root@lqz-mdz:~# docker version Client: Docker Engine - Community Version: 26.1.4 API version: 1.45 Go version: go1.21.11 Git commit: 5650f9b Built: Wed Jun 5 11:29:19 2024 OS/Arch: linux/amd64 Context: default Server: Docker Engine - Community Engine: Version: 26.1.4 API version: 1.45 (minimum version 1.24) Go version: go1.21.11 Git commit: de5c9cf Built: Wed Jun 5 11:29:19 2024 OS/Arch: linux/amd64 Experimental: false containerd: Version: 1.6.33 GitCommit: d2d58213f83a351ca8f528a95fbd145f5654e957 runc: Version: 1.1.12 GitCommit: v1.1.12-0-g51d5e94 docker-init: Version: 0.19.0 GitCommit: de40ad0 root@lqz-mdz:~#

用户增加docker操作权限

修改docker默认存储位置 1 2 # 停docker systemctl stop docker.socket

使用 systemctl stop docker 停止后还会被自动唤醒,修改为停止docker.socket服务1 2 3 root@lqz-mdz:~# systemctl stop docker Warning: Stopping docker.service, but it can still be activated by: docker.socket

修改前1 ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

修改后1 ExecStart=/usr/bin/dockerd --data-root /data/docker -H tcp://0.0.0.0:2375 -H fd:// --containerd=/run/containerd/containerd.sock

1 mv /var/lib/docker /data1/docker

1 2 3 4 5 6 7 { "registry-mirrors": [ "https://hub-mirror.c.163.com", "https://dockerproxy.com", "https://docker.io" ] }

重启docker 1 2 systemctl daemon-reload systemctl start docker.socket

远程端口验证

1 docker -H tcp://192.168.1.120:2375 version

下载并安装docker-compose 1 sudo curl -L https://github.com/docker/compose/releases/download/v2.23.3/docker-compose-`uname -s`-`uname -m` -o /usr/local/bin/docker-compose

上传之后验证是否可用

1 2 root@lqz-mdz:~# docker-compose version Docker Compose version v2.17.2

java应用 maven镜像源 jdk安装 下载 jdk 的安装包(略)

1 2 3 4 5 6 7 lqz@lqz-mdz:/data/app/jdk$ pwd /data/app/jdk lqz@lqz-mdz:/data/app/jdk$ ls -l 总用量 8 drwxr-xr-x 7 root root 4096 5月 3 2023 jdk11.0.19 drwxr-xr-x 7 root root 4096 4月 2 2019 jdk1.8.0_212 lqz@lqz-mdz:/data/app/jdk$

我装了两个版本的jdk 貌似现在主流的开始切换到jdk17了,但是大多数项目还是jdk8.

maven安装 下载 maven软件包

1 2 3 4 5 lqz@lqz-mdz:/data/app$ ls -l 总用量 16 drwxr-xr-x 6 lqz lqz 4096 5月 14 2023 maven3.6.2 lqz@lqz-mdz:/data/app$

node应用 npm镜像源 安装nvm环境 1 /bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

验证 nvm 是否安装成功: 安装 Node.js 版本 1 2 nvm install node # 安装最新版本 nvm install 14.17.0 # 安装特定版本

默认是安装在home目录下.当让你也可以改变nvm的目录位置1 2 3 4 lqz@lqz-mdz:/data/app$ ls -al 总用量 32 drwxrwxr-x 8 lqz lqz 4096 5月 14 2023 .nvm lqz@lqz-mdz:/data/app$

python应用 python镜像源 miniconda 1 wget https://mirrors.tuna.tsinghua.edu.cn/anaconda/miniconda/Miniconda3-py312_24.4.0-0-Linux-x86_64.sh

增加权限并执行该脚本

1 2 3 4 (base) lqz@lqz-mdz:/data/app/python$ ls -l 总用量 4 drwxrwxr-x 19 lqz lqz 4096 6月 16 22:38 miniconda3 (base) lqz@lqz-mdz:/data/app/python$

增加环境变量

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 (base) lqz@lqz-mdz:/data/app/python$ cat ~/.bash_conda # >>> conda initialize >>> # !! Contents within this block are managed by 'conda init' !! __conda_setup="$('/data/app/python/miniconda3/bin/conda' 'shell.bash' 'hook' 2> /dev/null)" if [ $? -eq 0 ]; then eval "$__conda_setup" else if [ -f "/data/app/python/miniconda3/etc/profile.d/conda.sh" ]; then . "/data/app/python/miniconda3/etc/profile.d/conda.sh" else export PATH="/data/app/python/miniconda3/bin:$PATH" fi fi unset __conda_setup # <<< conda initialize <<<

修改国内镜像源 (包括pip)

1 2 3 4 5 6 7 8 9 10 (base) lqz@lqz-mdz:/data/app/python$ ls -al 总用量 16 drwxrwxr-x 3 lqz lqz 4096 6月 16 22:42 . drwxr-xr-x 9 lqz lqz 4096 6月 16 22:37 .. -rw-rw-r-- 1 lqz lqz 777 6月 16 22:42 .condarc drwxrwxr-x 19 lqz lqz 4096 6月 16 22:38 miniconda3 (base) lqz@lqz-mdz:/data/app/python$ (base) lqz@lqz-mdz:/data/app/python$ ls -ld ~/.condarc lrwxrwxrwx 1 lqz lqz 25 6月 16 22:42 /home/lqz/.condarc -> /data/app/python/.condarc (base) lqz@lqz-mdz:/data/app/python$

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 (base) lqz@lqz-mdz:/data/app/python$ cat .condarc channels: - defaults show_channel_urls: true default_channels: - https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main - https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/r - https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/msys2 custom_channels: conda-forge: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud msys2: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud bioconda: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud menpo: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud pytorch: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud pytorch-lts: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud simpleitk: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud deepmodeling: https://mirrors.tuna.tsinghua.edu.cn/anaconda/cloud/ (base) lqz@lqz-mdz:/data/app/python$

pip配置为国内源

1 pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple

当然可以将他们集中存在应用存储盘.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 (base) lqz@lqz-mdz:/data/app/python$ ls -ld ~/.config/pip lrwxrwxrwx 1 lqz lqz 20 6月 16 22:51 /home/lqz/.config/pip -> /data/app/python/pip (base) lqz@lqz-mdz:/data/app/python$ ls -ld ~/.conda lrwxrwxrwx 1 lqz lqz 23 6月 16 22:47 /home/lqz/.conda -> /data/app/python/.conda (base) lqz@lqz-mdz:/data/app/python$ ls -ld ~/.condarc lrwxrwxrwx 1 lqz lqz 25 6月 16 22:42 /home/lqz/.condarc -> /data/app/python/.condarc (base) lqz@lqz-mdz:/data/app/python$ (base) lqz@lqz-mdz:/data/app/python$ ls -al 总用量 24 drwxrwxr-x 5 lqz lqz 4096 6月 16 22:51 . drwxr-xr-x 9 lqz lqz 4096 6月 16 22:37 .. drwxrwxr-x 2 lqz lqz 4096 6月 16 22:45 .conda -rw-rw-r-- 1 lqz lqz 777 6月 16 22:42 .condarc drwxrwxr-x 19 lqz lqz 4096 6月 16 22:38 miniconda3 drwxrwxr-x 2 lqz lqz 4096 6月 16 22:50 pip (base) lqz@lqz-mdz:/data/app/python$

暂时未用到.用到的时候再补吧.

环境变量 我配置的环境变量是适配于gitlan-runner容器的. 配置的目录都是容器内映射的目录

如果需要在宿主机执行自行修改目录

1 2 3 4 5 6 7 8 9 10 11 12 13 lqz@lqz-mdz:/data/app/gitlab_jenkins/gitlab-runner/env_profiles$ cat gitlab-runner.sh export MAVEN_HOME=/home/gitlab-runner/maven/maven3.6.2 export JAVA_HOME=/home/gitlab-runner/jdk/jdk1.8.0_212 export PATH=$PATH:$MAVEN_HOME/bin:$JAVA_HOME/bin export NVM_DIR="/home/gitlab-runner/.nvm" [ -s "$NVM_DIR/nvm.sh" ] && \. "$NVM_DIR/nvm.sh" export NVM_NODEJS_ORG_MIRROR=https://npmmirror.com/mirrors/node/ export NVM_NPM_ORG_MIRROR=http://npm.taobao.org/mirrors/npm export NVM_IOJS_ORG_MIRROR=http://npm.taobao.org/mirrors/iojs

docker安装 CI/CD组件 gitlab_jenkins 我使用的配置如下.仅供参考

根据自己的需要的组织架构.自己评估将重要的目录持久化到磁盘上.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 version: '3.0' networks: docker_gitlab_jenkins_net: driver: bridge ipam: config: - subnet: 172.0 .0 .0 /24 services: gitlab: image: 'xiaochouyou/gitlab-ce-zh:11.1.4' container_name: "gitlab_n" restart: always privileged: true hostname: 'gitlab' networks: docker_gitlab_jenkins_net: ipv4_address: 172.0 .0 .3 environment: TZ: 'Asia/Shanghai' GITLAB_OMNIBUS_CONFIG: | external_url 'http://gitlab.testserv.cn:38080' gitlab_rails['time_zone'] = 'Asia/Shanghai' gitlab_rails['smtp_enable'] = true gitlab_rails['gitlab_shell_ssh_port'] = 38022 ports: - '38080:38080' - '38443:443' - '38022:38022' volumes: - /etc/timezone:/etc/timezone:ro - /etc/localtime:/etc/localtime:ro - /data/app/gitlab_jenkins/gitlab/config:/etc/gitlab - /data/app/gitlab_jenkins/gitlab/data:/var/opt/gitlab - /data/app/gitlab_jenkins/gitlab/logs:/var/log/gitlab deploy: resources: limits: cpus: '2' memory: 4096M reservations: cpus: '0.25' memory: 512M gitlab-runner: networks: docker_gitlab_jenkins_net: ipv4_address: 172.0 .0 .4 image: xiaochouyou/gitlab-runner:15.11.0 restart: always container_name: gitlab-runner_n privileged: true volumes: - /etc/timezone:/etc/timezone:ro - /etc/localtime:/etc/localtime:ro - /usr/bin/docker:/usr/bin/docker - /var/run/docker.sock:/var/run/docker.sock - /usr/lib/x86_64-linux-gnu/libltdl.so.7:/usr/lib/x86_64-linux-gnu/libltdl.so.7 - /data/app/gitlab_jenkins/gitlab-runner/config:/etc/gitlab-runner - /data/app/gitlab_jenkins/gitlab-runner/env_profiles:/home/gitlab-runner/env_profiles - /data/app/gitlab_jenkins/gitlab-runner/.git-credentials:/home/gitlab-runner/.git-credentials - /data/app/gitlab_jenkins/nginx/myhtml:/data/html - /data/app/jdk:/home/gitlab-runner/jdk - /data/app/maven3.6.2:/home/gitlab-runner/maven/maven3.6.2 - /data/app/.m2:/home/gitlab-runner/.m2 - /data/app/.nvm:/home/gitlab-runner/.nvm deploy: resources: limits: cpus: '2' memory: 2048M reservations: cpus: '0.5' memory: 512M jenkins: networks: docker_gitlab_jenkins_net: ipv4_address: 172.0 .0 .5 image: xiaochouyou/jenkins:lts volumes: - /etc/timezone:/etc/timezone:ro - /etc/localtime:/etc/localtime:ro - /var/run/docker.sock:/var/run/docker.sock - /usr/bin/docker:/usr/bin/docker - /usr/lib/x86_64-linux-gnu/libltdl.so.7:/usr/lib/x86_64-linux-gnu/libltdl.so.7 - /data/app/gitlab_jenkins/jenkins/data/jenkins/:/var/jenkins_home - /data/app/gitlab_jenkins/jenkins/Myblog:/var/jenkins_home/workspace/Myblog - /data/app/maven3.6.2:/var/jenkins_home/maven/maven3.6.2 - /data/app/.m2:/var/jenkins_home/.m2 - /data/app/.nvm:/var/jenkins_home/.nvm ports: - "28080:8080" expose: - "8080" - "50000" deploy: resources: limits: cpus: '2' memory: 1024M reservations: cpus: '0.5' memory: 128M privileged: true user: root restart: always container_name: jenkins_n environment: JAVA_OPTS: '-Xms256m -Xmx512m -XX:PermSize=256M -XX:MaxPermSize=512m -Djava.util.logging.config.file=/var/jenkins_home/log.properties' nginx: container_name: nginx_n image: nginx:1.23.2-alpine restart: always networks: docker_gitlab_jenkins_net: ipv4_address: 172.0 .0 .7 ports: - 8080 :8080 environment: - NGINX_PORT=8080 volumes: - /etc/timezone:/etc/timezone:ro - /etc/localtime:/etc/localtime:ro - /data/app/gitlab_jenkins/nginx/myhtml:/data/html:ro - /data/app/gitlab_jenkins/nginx/etc:/etc/nginx:rw - /data/app/gitlab_jenkins/nginx/Myblog:/etc/data/Myblog:rw deploy: resources: limits: cpus: '0.50' memory: 512M reservations: cpus: '0.10' memory: 128M

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 version: '3' services: zookeeper: image: wurstmeister/zookeeper container_name: zookeeper ports: - "2181:2181" deploy: resources: limits: cpus: '0.50' memory: 512M reservations: cpus: '0.10' memory: 128M kafka: image: wurstmeister/kafka depends_on: [ zookeeper ] container_name: kafka ports: - "9092:9092" volumes: - /data/app/kafka_redis_zk/kafka/config:/opt/kafka_2.13-2.8.1/config - /data/app/kafka_redis_zk/kafka/bin:/opt/kafka_2.13-2.8.1/bin environment: KAFKA_ADVERTISED_HOST_NAME: 192.168 .3 .120 KAFKA_CREATE_TOPICS: "test:1:1" KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181 deploy: resources: limits: cpus: '0.50' memory: 512M reservations: cpus: '0.10' memory: 128M redis-master: image: redis:6.2.6-alpine container_name: redis_6 ports: - 26379 :6379 volumes: - /etc/timezone:/etc/timezone:ro - /etc/localtime:/etc/localtime:ro - /data/app/kafka_redis_zk/redis/redis.conf:/usr/local/etc/redis/redis.conf:rw - /data/app/kafka_redis_zk/redis/data:/data:rw deploy: resources: limits: cpus: '0.50' memory: 512M reservations: cpus: '0.10' memory: 128M

redis常用配置参考

资源占用概况 1 2 3 4 5 6 7 8 9 CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O BLOCK I/O PIDS c8f2a2cd42d4 gitlab-runner_n 0.14% 154.5MiB / 2GiB 7.54% 59.2MB / 108MB 85MB / 240MB 11 d050bbae1083 kafka 0.74% 357.4MiB / 512MiB 69.80% 917kB / 1.86MB 5.43MB / 8.29MB 73 34c6eba6bbae zookeeper 0.11% 115.2MiB / 512MiB 22.51% 1.47MB / 875kB 9.05MB / 500kB 23 185025bcca28 redis_6 0.20% 3.309MiB / 512MiB 0.65% 12.3kB / 0B 4.04MB / 0B 5 dcc7610dac31 jenkins_n 0.11% 333.8MiB / 1GiB 32.60% 17kB / 1.77kB 36.3MB / 6.83MB 39 46449e2d5314 gitlab_n 6.63% 2.963GiB / 4GiB 74.08% 109MB / 92.8MB 814MB / 3.06GB 303 5cf63bfc871e nginx_n 0.00% 6.09MiB / 512MiB 1.19% 86.2kB / 8.22MB 7.53MB / 0B 5

待机 cpu 相对比较空闲. 只是内存使用较多. 后续可以考虑扩展点内存

暂时结束 应用间结构图大致如下 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 腾讯云99元/年 ----->----> 常驻宿主机 <------<------ 个人pc | 内外网穿透 | 局域网 | | | | | ----->----> | ---- gitlab | 反向代理 | | | | | -->---> 图床文件夹 <----<--- | | | NFS | | | | | | ----- 其他的项目 <---<---- | | http | | | ---- nginx | | | ---- gitlab-run | | ---- kafka | | ---- zookeeper | | ---- redis | | ......

工具类应用 内网穿透网络配置 监控 指标采集 数据展示 下发告警